Capstone2: German credit Risk

Louis Mono

Abstract

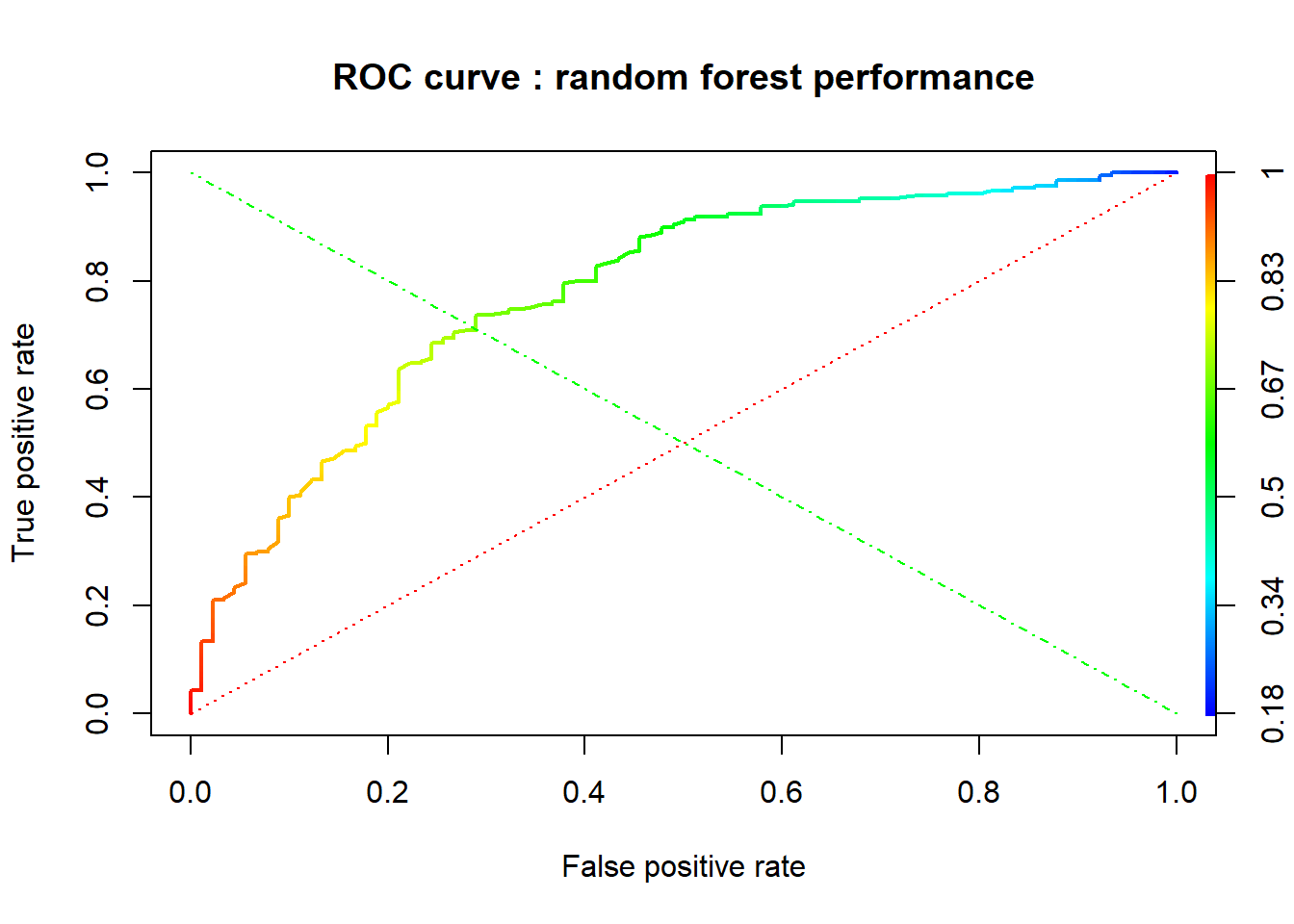

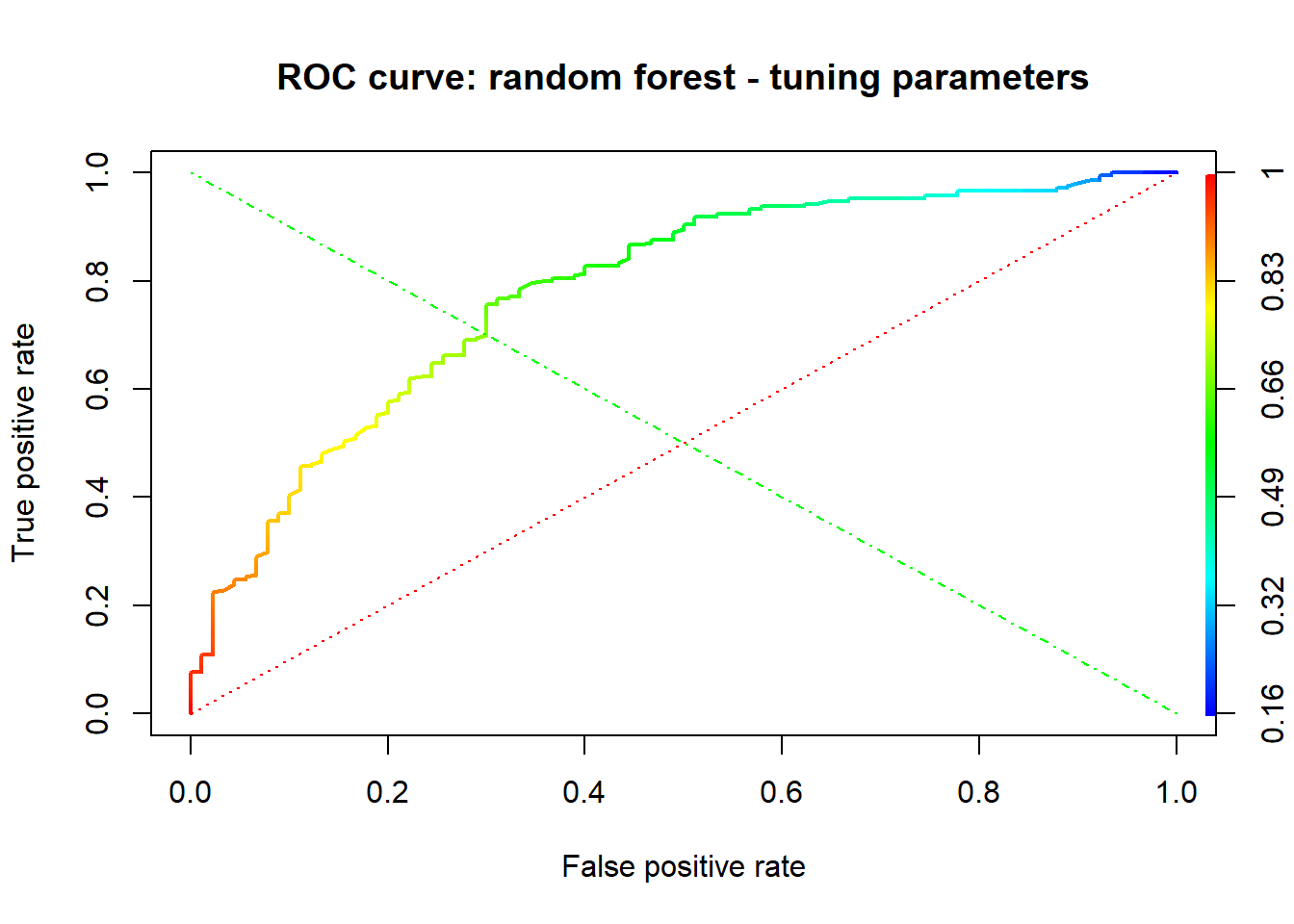

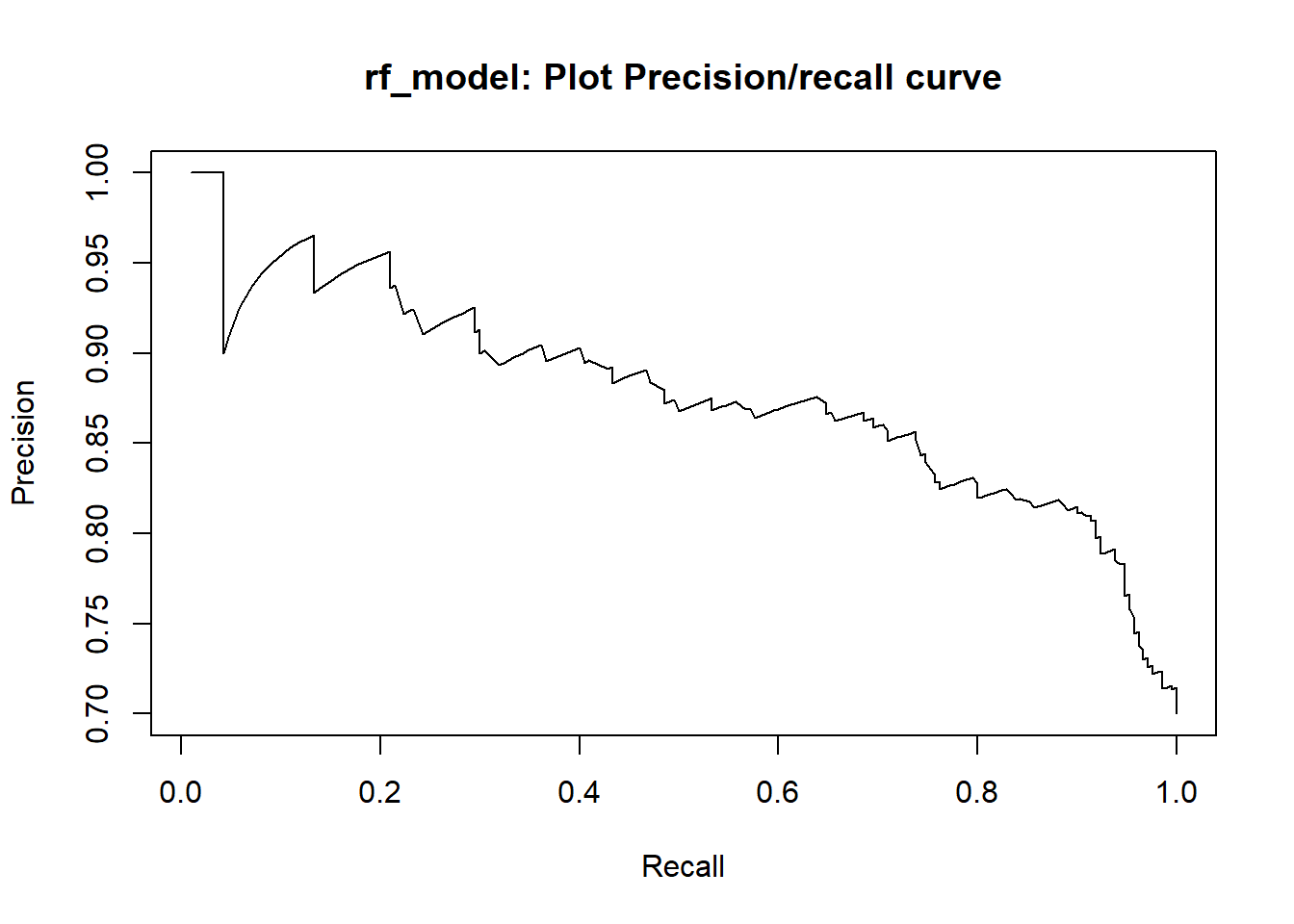

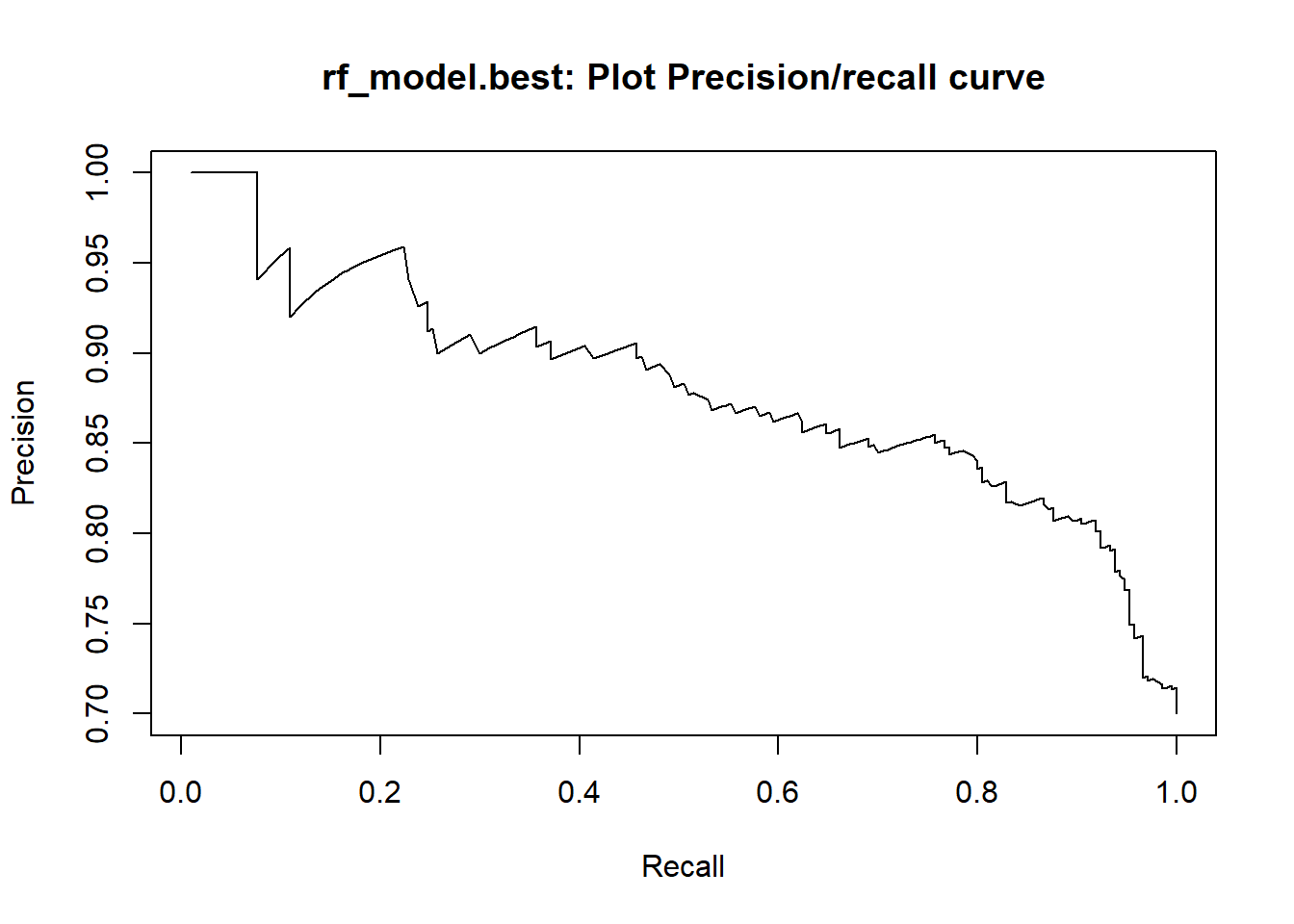

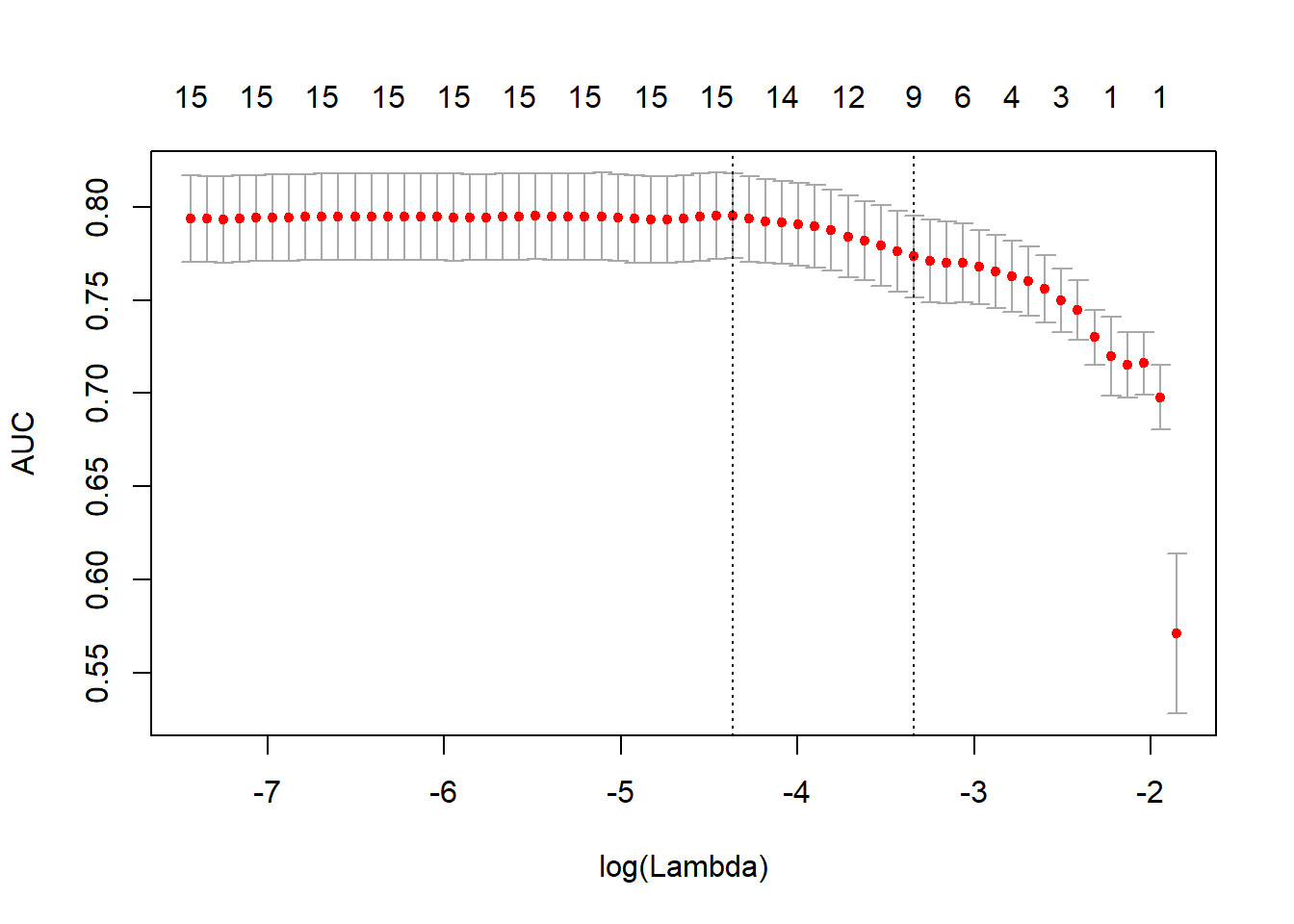

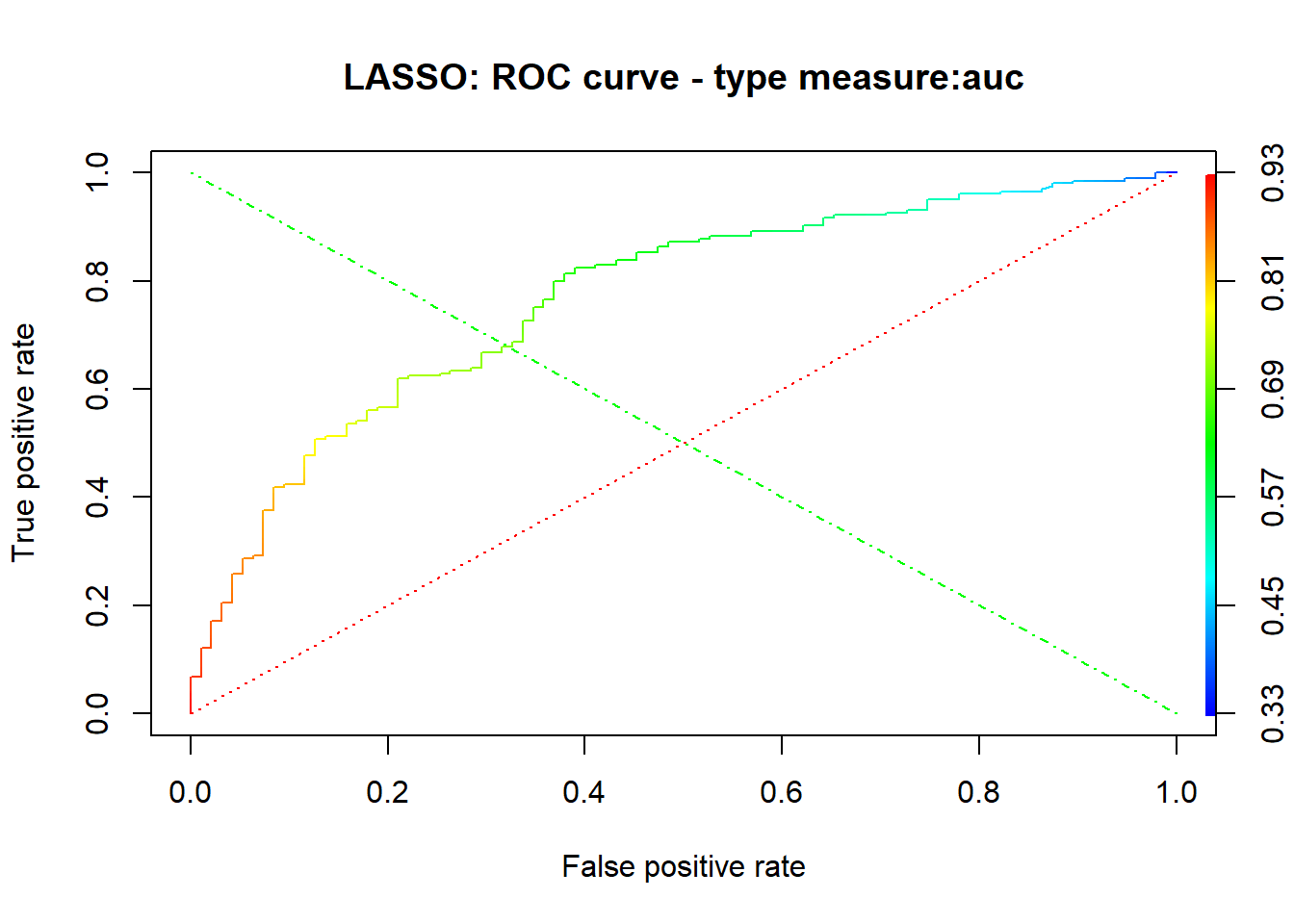

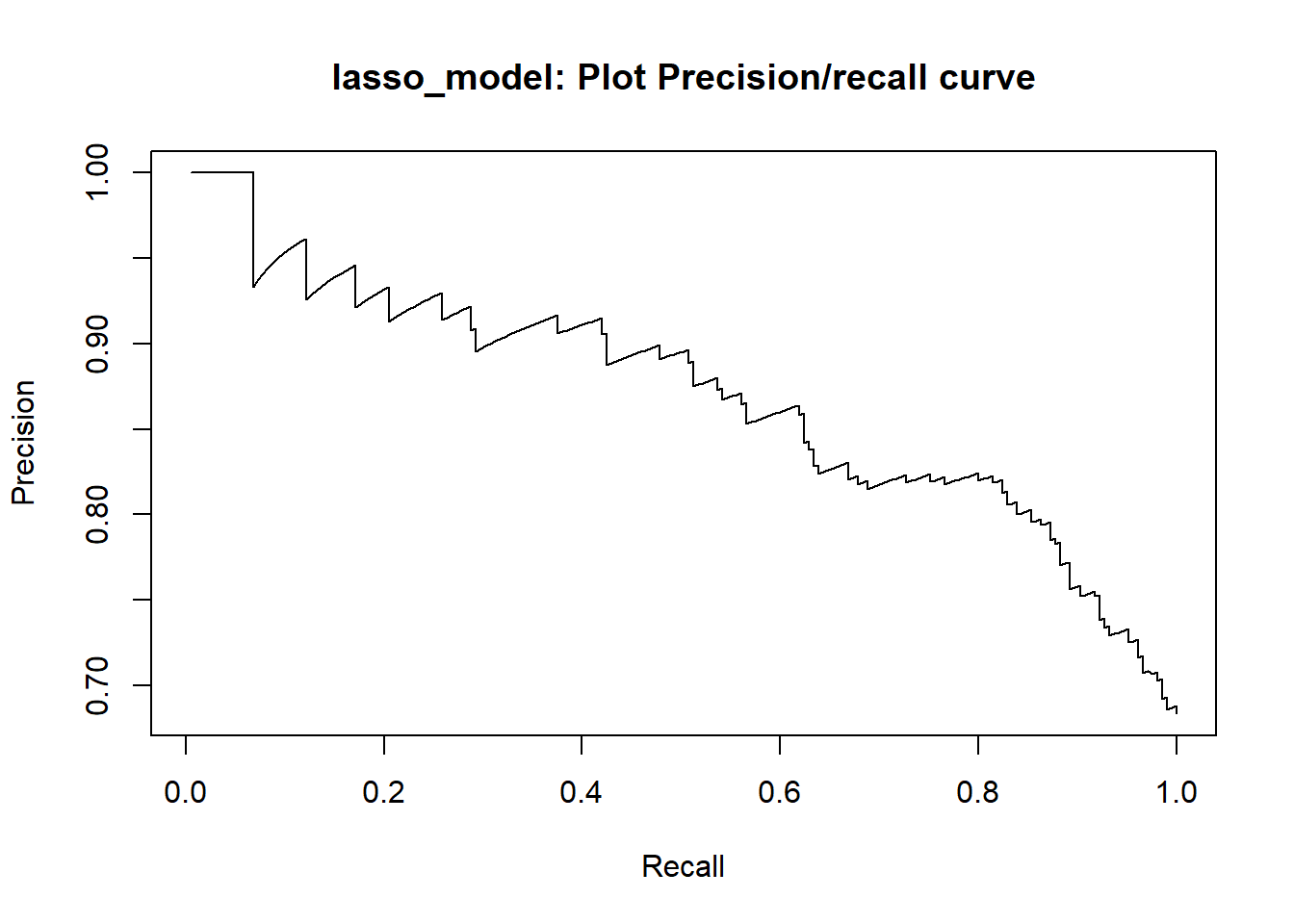

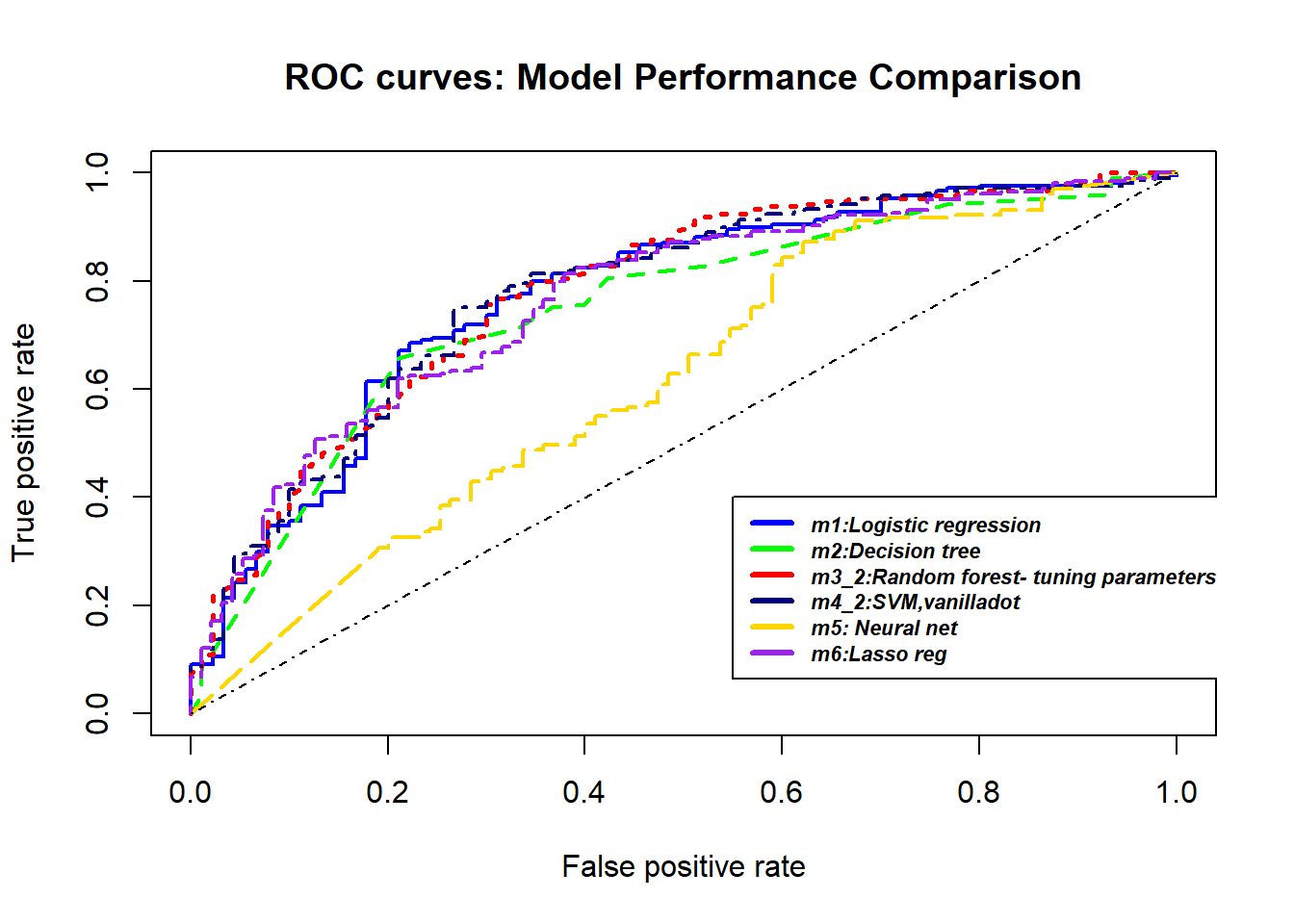

A credit score is a numerical expression based on a level analysis of a person’s credit files, to represent the creditworthiness of an individual. Lenders, such as banks and credit card companies, use credit scores to evaluate the potential risk posed by lending money to consumers and to mitigate losses due to bad debt. Generally, financial institutions utilize it as a method to support the decision-making about credit applications. Both Machine learning (ML) and Artificial intelligence (AI) techniques have been explored for credit scoring . Although there are no consistent conclusions on which ones are better, recent studies suggest combining multiple classifiers, i.e., ensemble learning, may have a better performance. In our study, using the german credit data available on the UCI Machine Learning Repository we assessed the performance of different Machine learning techniques based on their overall accuracy, but not only, since we supported our results with accuracy measures such as AUC, F1-score, KS and Gini . Our findings suggest that random forest model with tuning parameters , support vector machine based on vanilladot Linear kernel function and lasso regression, with a good overall accuracy values lead us to the most performant AUC values, 0.7833 , 0.7794 and 0.7675, respectively.

I. Introduction

The purpose of credit scoring is to classify the applicants into two types : applicants with good credit and applicants with bad credits. When a bank receives a loan application , applicants with good credit have great possibility to repay financial obligation. Applicants with bad credit have high possibility of defaulting. The accuracy of credit scoring is critical to financial institutions profitability . Even 1% improvement on the accuracy of credit scoring of applicants with bad credit will decreases a great loss for financial institutions (Hand & Henley, 1997).

This study aims at adressing this classification problem by using the the applicant’s demographic and socio-economic profiles of german credit data to examine the risk of lending loan to the customer. We assessed the performance of different Machine learning algorithms (Logistic regression model , Decision tree, random forests, Support vector machines ,neural networks, Lasso ) in terms of overall accuracy. For the model optimization, we conducted a comparative assessment of different models combining the effects of Gini, KS, F1-score, balanced accuracy and the area under the ROC curve (AUC) values.

The remainder of the report is organized as follow. In section 2, a general insight of the Dataset is presented, with a particular attention to the Data exploration. In section 3, we perform the Data Pre-processing. Section 4 explains the Methods and Analysis over different Machine learning techniques we used and presents the metrics for the models performance evaluation, while section 5 contains our main Results. Section 6 draws Conclusions and suggestions.

II. Dataset

2.1. Overview

Loading library and data

#library

library(tidyverse)

library(rchallenge)

library(caret)

library(RColorBrewer)

library(reshape2)

library(lattice)

library (rpart)

library(rpart.plot)

library(rattle)

library(ROCR)

library(ggpubr)

library(ggthemes)

library(randomForest)

library(Information)

library(VIM)

library(Boruta)

library(e1071)

library(kernlab)

library(gridExtra)

library(nnet)

library(NeuralNetTools)

library(lars)

library(glmnet)

library(kableExtra)

library(doSNOW)

library(doParallel)

library(rmdformats)

#data

data("german")

#get class/glimpse of data

class(german) "data.frame"glimpse(german)Observations: 1,000

Variables: 21

$ Duration <dbl> 6, 48, 12, 42, 24, 36, 24, 36, 12, 3...

$ Amount <dbl> 1169, 5951, 2096, 7882, 4870, 9055, ...

$ InstallmentRatePercentage <dbl> 4, 2, 2, 2, 3, 2, 3, 2, 2, 4, 3, 3, ...

$ ResidenceDuration <dbl> 4, 2, 3, 4, 4, 4, 4, 2, 4, 2, 1, 4, ...

$ Age <dbl> 67, 22, 49, 45, 53, 35, 53, 35, 61, ...

$ NumberExistingCredits <dbl> 2, 1, 1, 1, 2, 1, 1, 1, 1, 2, 1, 1, ...

$ NumberPeopleMaintenance <dbl> 1, 1, 2, 2, 2, 2, 1, 1, 1, 1, 1, 1, ...

$ Telephone <fct> none, yes, yes, yes, yes, none, yes,...

$ ForeignWorker <fct> yes, yes, yes, yes, yes, yes, yes, y...

$ CheckingAccountStatus <fct> lt.0, 0.to.200, none, lt.0, lt.0, no...

$ CreditHistory <fct> Critical, PaidDuly, Critical, PaidDu...

$ Purpose <fct> Radio.Television, Radio.Television, ...

$ SavingsAccountBonds <fct> Unknown, lt.100, lt.100, lt.100, lt....

$ EmploymentDuration <fct> gt.7, 1.to.4, 4.to.7, 4.to.7, 1.to.4...

$ Personal <fct> Male.Single, Female.NotSingle, Male....

$ OtherDebtorsGuarantors <fct> None, None, None, Guarantor, None, N...

$ Property <fct> RealEstate, RealEstate, RealEstate, ...

$ OtherInstallmentPlans <fct> None, None, None, None, None, None, ...

$ Housing <fct> Own, Own, Own, ForFree, ForFree, For...

$ Job <fct> SkilledEmployee, SkilledEmployee, Un...

$ Class <fct> Good, Bad, Good, Good, Bad, Good, Go...The German Credit data is a dataset provided by Dr. Hans Hofmann of the University of Hamburg. It’s a publically available from the UCI Machine Learning repository at the following link: https://archive.ics.uci.edu/ml/datasets/Statlog+(German+Credit+Data). However, this dataset is also built-in in some R packages, like caret , rchallenge or evtree. We decided to use directly the german data from the rchallenge package , which is a transformed version of the GermanCredit dataset with factors instead of dummy variables.

Getting a glimpse of german data, we observe it’s a data frame containing 21 variables for a total of 1,000 observations . The response variable (or outcome,y) in the dataset corresponds to the Class label, which is a binary variable indicating credit risk or credit worthiness with levels Good and Bad. Here, we are going to describe the 20 features and their characteristics:

quantitative features

-Duration : duration in months.

-Amount: credit Amount.

-InstallmentRatePercentage: installment rate in percentage of disposable income.

-ResidenceDuration: present residence since .

-Age : Client’s age.

-NumberExistingCredits: number of existing credits at this bank.

-NumberPeopleMaintenance: number of people being liable to provide maintenance.

qualitative features

-Telephone: binary variable indicating if customer has a registered telephone number

-ForeignWorker: binary variable indicating if the customer is a foreign worker

-CheckingAccountStatus: factor variable indicating the status of checking account

-CreditHistory: factor variable indicating credit history

-Purpose: factor variable indicating the credit’s purpose

-SavingsAccountBonds: factor variable indicating the savings account/bonds

-EmploymentDuration: ordered factor indicating the duration of the current employment

-Personal: factor variable indicating personal status and sex

-OtherDebtorsGuarantors: factor variable indicating Other debtors

-Property: factor variable indicating the client’s highest valued property

-OtherInstallmentPlans: factor variable indicating other installment plans

-Housing: factor variable indicating housing

-Job: factor indicating employment status

2.2. Data exploration

First,summary statistics of the 21 variables are presented , out of which 7 are numerical attributes. Also, we can identify the various levels for the 14 categorical attributes including the outcome.

summary(german) Duration Amount InstallmentRatePercentage

Min. : 4.0 Min. : 250 Min. :1.000

1st Qu.:12.0 1st Qu.: 1366 1st Qu.:2.000

Median :18.0 Median : 2320 Median :3.000

Mean :20.9 Mean : 3271 Mean :2.973

3rd Qu.:24.0 3rd Qu.: 3972 3rd Qu.:4.000

Max. :72.0 Max. :18424 Max. :4.000

ResidenceDuration Age NumberExistingCredits

Min. :1.000 Min. :19.00 Min. :1.000

1st Qu.:2.000 1st Qu.:27.00 1st Qu.:1.000

Median :3.000 Median :33.00 Median :1.000

Mean :2.845 Mean :35.55 Mean :1.407

3rd Qu.:4.000 3rd Qu.:42.00 3rd Qu.:2.000

Max. :4.000 Max. :75.00 Max. :4.000

NumberPeopleMaintenance Telephone ForeignWorker CheckingAccountStatus

Min. :1.000 none:404 no : 37 lt.0 :274

1st Qu.:1.000 yes :596 yes:963 0.to.200:269

Median :1.000 gt.200 : 63

Mean :1.155 none :394

3rd Qu.:1.000

Max. :2.000

CreditHistory Purpose SavingsAccountBonds

NoCredit.AllPaid: 40 Radio.Television :280 lt.100 :603

ThisBank.AllPaid: 49 NewCar :234 100.to.500 :103

PaidDuly :530 Furniture.Equipment:181 500.to.1000: 63

Delay : 88 UsedCar :103 gt.1000 : 48

Critical :293 Business : 97 Unknown :183

Education : 50

(Other) : 55

EmploymentDuration Personal OtherDebtorsGuarantors

lt.1 :172 Male.Divorced.Seperated: 50 None :907

1.to.4 :339 Female.NotSingle :310 CoApplicant: 41

4.to.7 :174 Male.Single :548 Guarantor : 52

gt.7 :253 Male.Married.Widowed : 92

Unemployed: 62 Female.Single : 0

Property OtherInstallmentPlans Housing

RealEstate:282 Bank :139 Rent :179

Insurance :232 Stores: 47 Own :713

CarOther :332 None :814 ForFree:108

Unknown :154

Job Class

UnemployedUnskilled : 22 Bad :300

UnskilledResident :200 Good:700

SkilledEmployee :630

Management.SelfEmp.HighlyQualified:148

outcome, y : Class

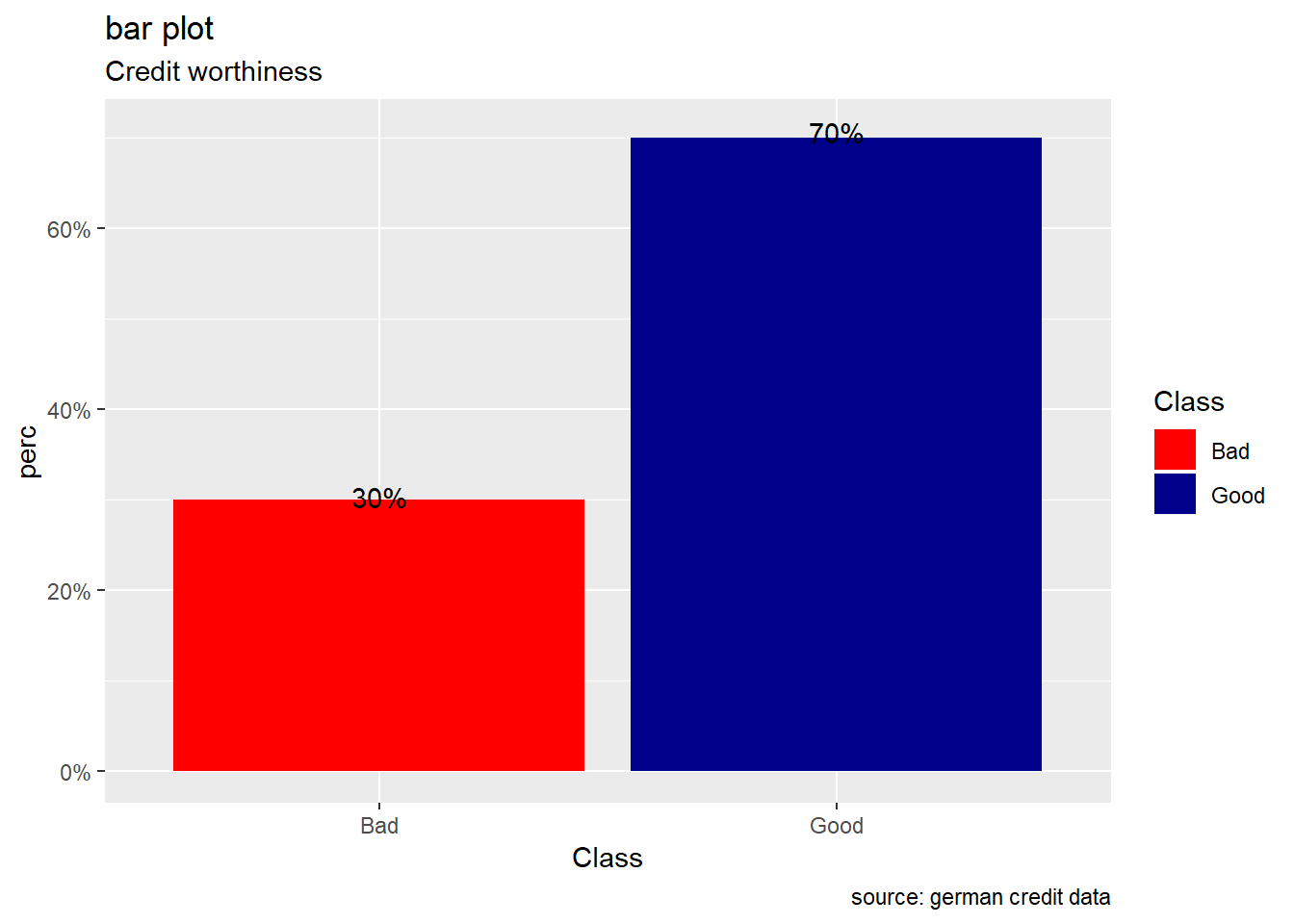

Visual exploration of the outcome, the credit worthiness, shows that there are more people with a good risk than a bad one. In fact, 70% of applicants have a good credit risk while about 30% have a bad credit risk .Then, class are unbalanced and for our subsequent analysis the data splitting in training set and test set should be done with a stratified random sampling method.

#i create Class.prop, an object of Class 'tbl_df', 'tbl' and 'data.frame'.It contains the calculated relative frequencies or proportions for the levels Bad/Good of the credit worthiness in the german credit data.

Class.prop <- german %>%

count(Class) %>%

mutate(perc = n / nrow(german)) # bar plot of credit worthiness

Class.prop %>%

ggplot(aes(x=Class,y= perc,fill=Class))+

geom_bar(stat="identity") +

labs(title="bar plot",

subtitle = "Credit worthiness",

caption=" source: german credit data") +

geom_text(aes(label=scales::percent(perc)), position = position_stack(vjust = 1.01))+

scale_y_continuous(labels = scales::percent)+

scale_fill_manual(values = c("Good" = "darkblue", "Bad" = "red"))

quantitative features.

Data exploration of numerical attributes disclosed the following insights:

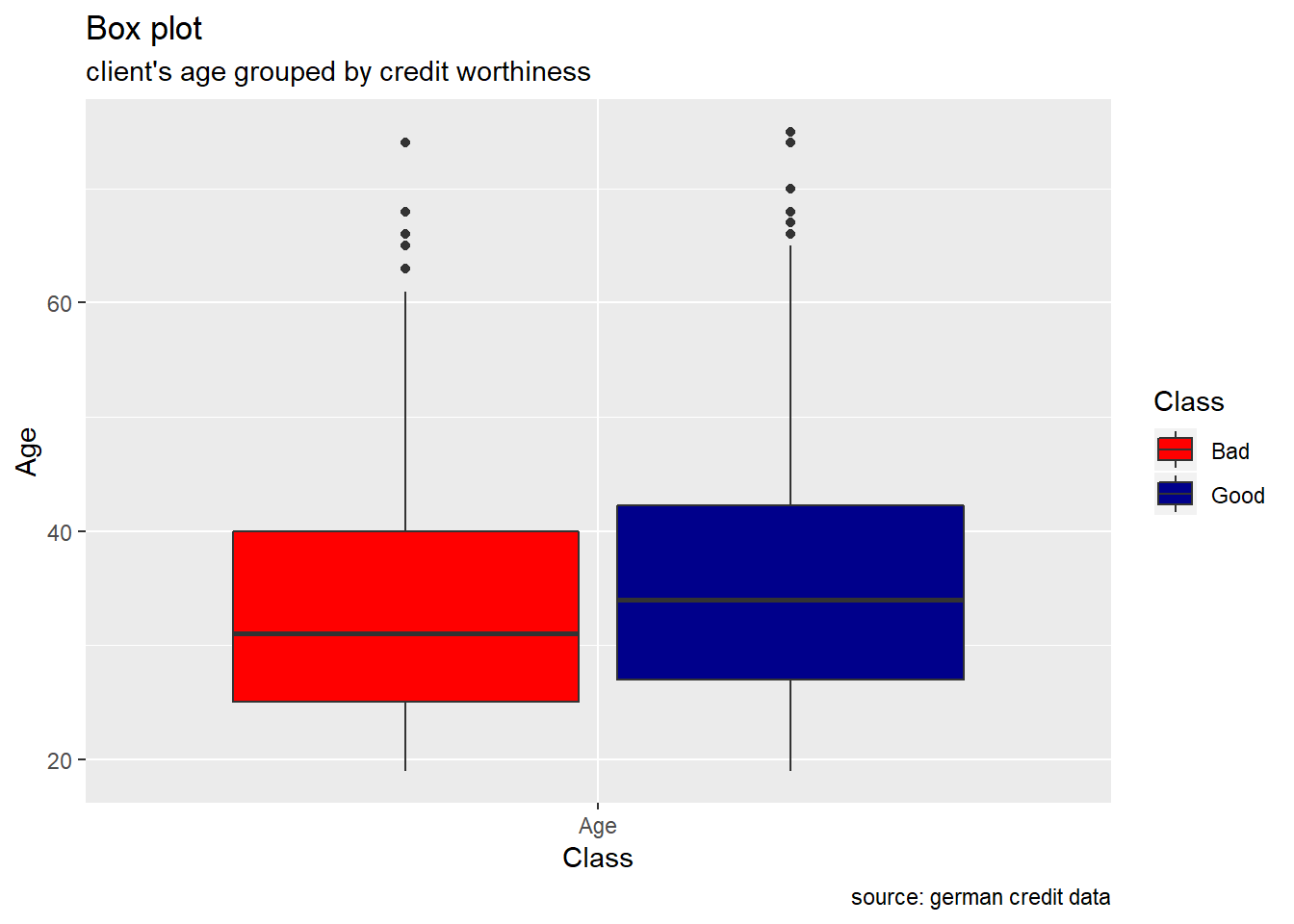

Age : From the age variable, we see that the median value for bad risk records is lesser than that of good records, it might be premature to say young people tend to have bad credit records, but we can safely assume it tends to be riskier.

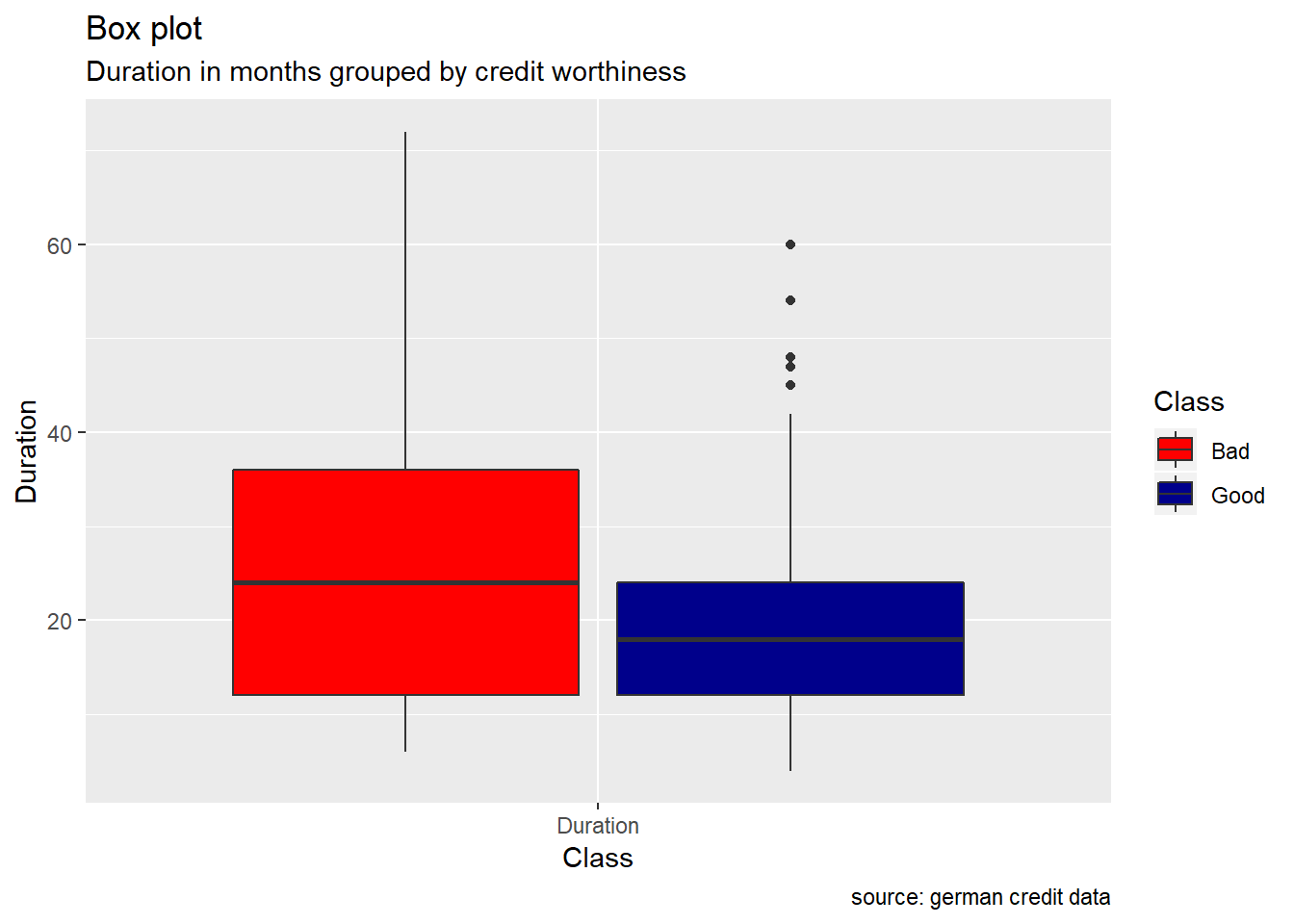

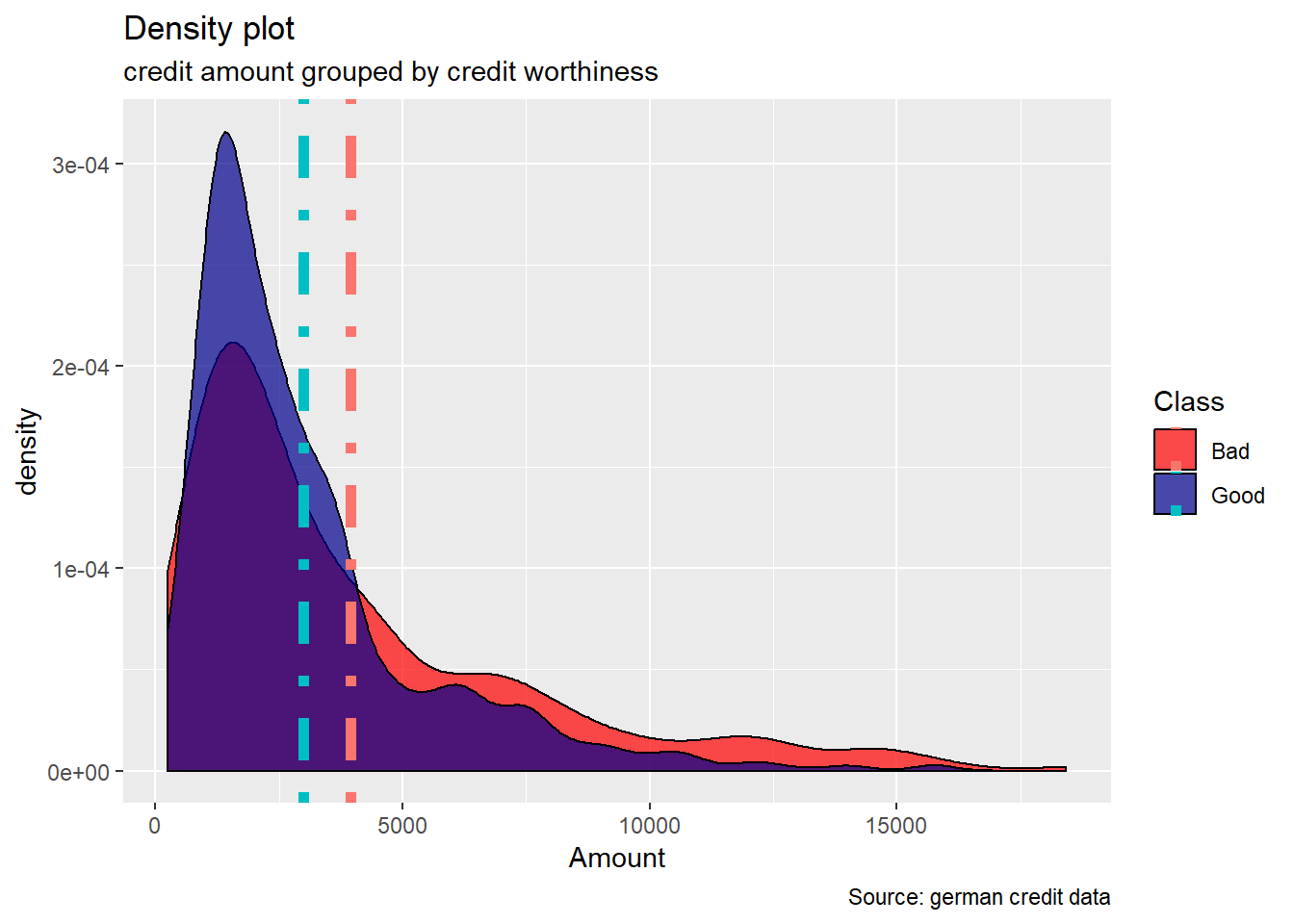

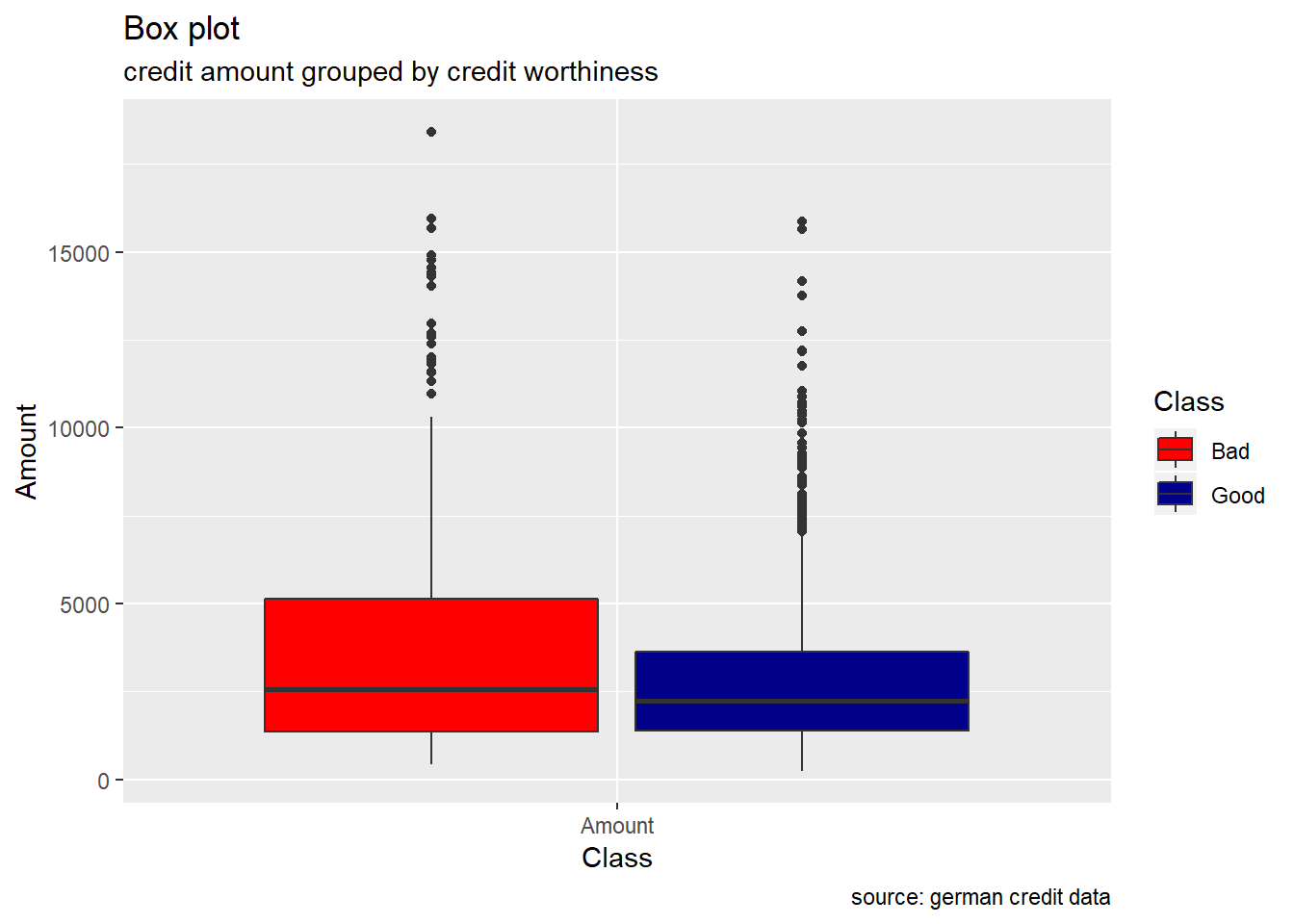

Duration, Amount : Duration in months as well as credit Amount , appears to show higher median value for bad risk with respect to good risk. Also, the range of these two variables, are more wide for the bad risk records than the good ones. When plotting their density curve along the vertical line for their mean value, we observe that neither Duration in months nor Amount credit is normally distributed. Data tends to show a right skewed distribution especially for the Amount credit variable.

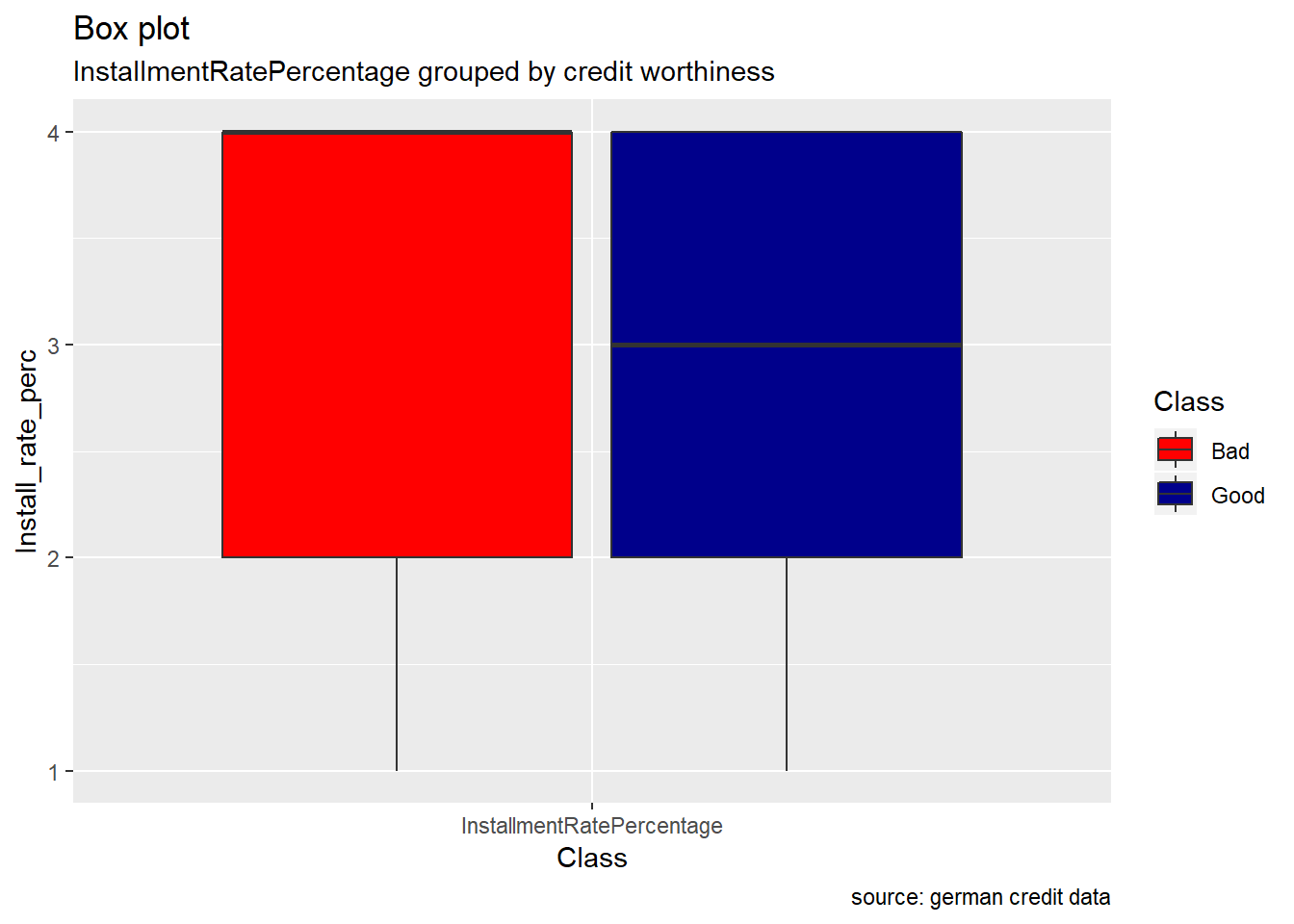

InstallmentRatePercentage : The bar plot of installment rate shows a significant difference between good and bad credits risk . The number of good records seem to be the double of bad records. When we look at the Box plot, it reveals that the median value for bad records is higher than the good ones even if the two Classes appear to have the same range.

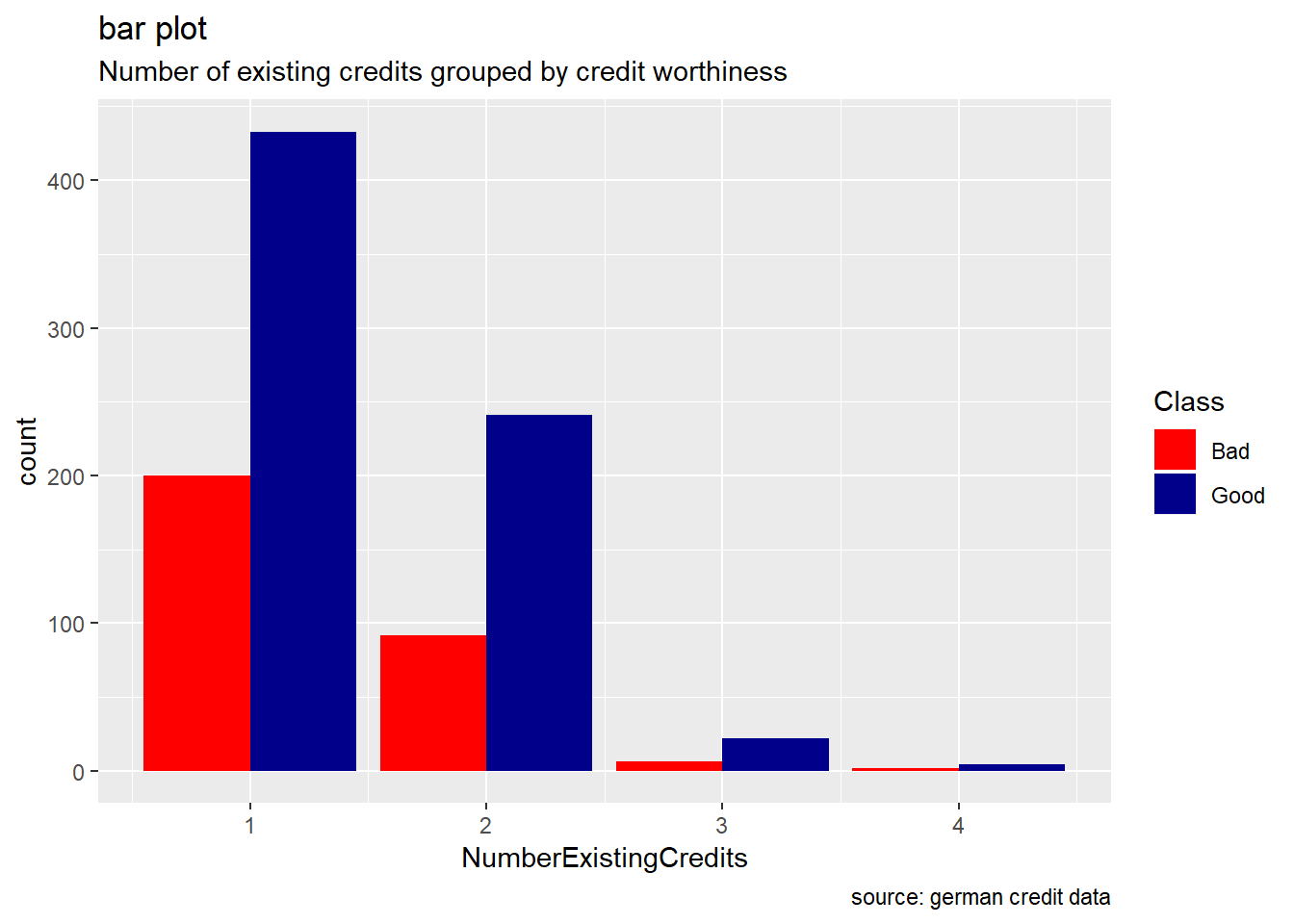

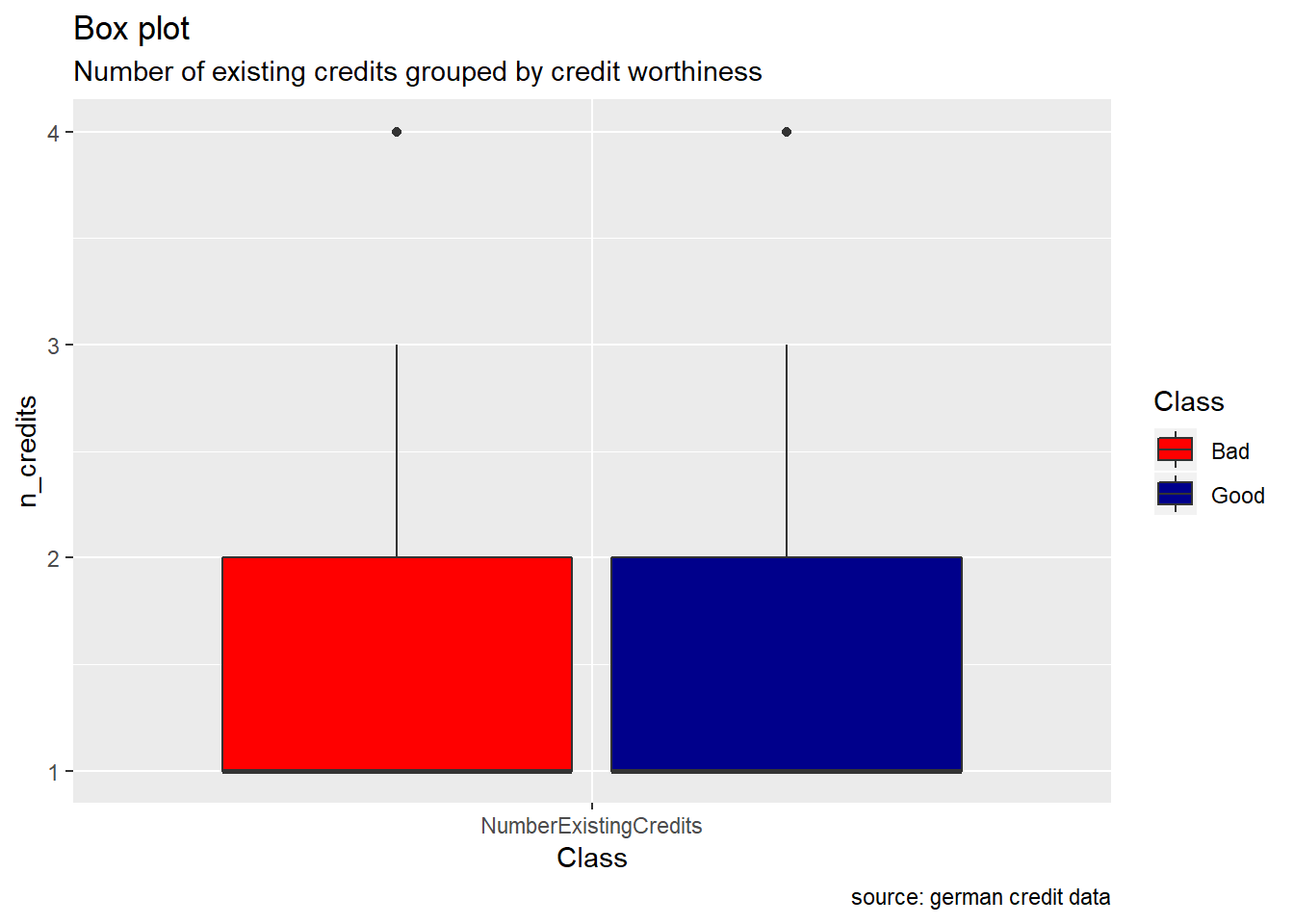

NumberExistingCredits, ResidenceDuration, NumberPeopleMaintenance : we almost bring up a similar observation for these attributes. While they have their two credit worthiness class “good/bad” which seem to show the same range( cf. boxplot) , good records are always higher than the bad ones(cf bar plot). When we compare some of descriptive statistics of two risks for each of these variable, we observe how mean, median, and sd are almost equal.

client’s Age

ggplot(melt(german[,c(5,21)]), aes(x = variable, y = value, fill = Class)) +

geom_boxplot() +

xlab("Class") +

ylab("Age") +

labs(title="Box plot", subtitle="client's age grouped by credit worthiness", caption = "source: german credit data") +

scale_fill_manual(values = c("Good" = "darkblue", "Bad" = "red"))

Duration in months

avg.duration <- german %>%

select(Duration, Class) %>%

group_by(Class) %>%

summarise(m=mean(Duration))

german%>%

ggplot(aes(Duration))+

geom_density(aes(fill=Class),alpha=0.7) +

geom_vline(data=avg.duration,aes(xintercept= m , colour= Class), lty = 4 ,size=2)+

labs(title="Density plot",

subtitle="Duration in months grouped by credit worthiness",

caption="Source: german credit data",

x="Duration",

fill="Class") +

scale_fill_manual(values = c("Good" = "darkblue", "Bad" = "red"))

ggplot(reshape2::melt(german[,c(1,21)]), aes(x = variable, y = value, fill = Class)) +

geom_boxplot() +

xlab("Class") +

ylab("Duration") +

labs(title="Box plot", subtitle="Duration in months grouped by credit worthiness", caption = "source: german credit data") +

scale_fill_manual(values = c("Good" = "darkblue", "Bad" = "red"))

credit Amount

avg.amount <- german %>%

select(Amount, Class) %>%

group_by(Class) %>%

summarise(m=mean(Amount))

german%>%

ggplot(aes(Amount))+

geom_density(aes(fill=Class),alpha=0.7) +

geom_vline(data=avg.amount,aes(xintercept= m , colour= Class), lty = 4 ,size=2)+

labs(title="Density plot",

subtitle="credit amount grouped by credit worthiness",

caption="Source: german credit data",

x="Amount",

fill="Class") +

scale_fill_manual(values = c("Good" = "darkblue", "Bad" = "red"))

ggplot(reshape2::melt(german[,c(2,21)]), aes(x = variable, y = value, fill = Class)) +

geom_boxplot() +

xlab("Class") +

ylab("Amount") +

labs(title="Box plot", subtitle="credit amount grouped by credit worthiness", caption = "source: german credit data") +

scale_fill_manual(values = c("Good" = "darkblue", "Bad" = "red"))

Installment rate

german %>%

ggplot(aes(InstallmentRatePercentage, ..count..)) +

geom_bar(aes(fill=Class), position ="dodge")+

labs(title="bar plot", subtitle="InstallmentRatePercentage grouped by credit worthiness", caption = "source: german credit data") +

scale_fill_manual(values = c("Good" = "darkblue", "Bad" = "red"))

ggplot(reshape2::melt(german[,c(3,21)]), aes(x = variable, y = value, fill = Class)) +

geom_boxplot() +

xlab("Class") +

ylab("Install_rate_perc") +

labs(title="Box plot", subtitle="InstallmentRatePercentage grouped by credit worthiness", caption = "source: german credit data") +

scale_fill_manual(values = c("Good" = "darkblue", "Bad" = "red"))

NumberExistingCredits

# we just report here barplot/boxplot for the attribute NumberExistingCredits,

# but we show descriptive statistics including also NumberPeopleMaintenance and #ResidenceDuration. To produce their barplot and boxplot , just repeat the code below and replace with their variable name.

german %>%

ggplot(aes(NumberExistingCredits, ..count..)) +

geom_bar(aes(fill=Class), position ="dodge")+

labs(title="bar plot", subtitle="Number of existing credits grouped by credit worthiness", caption = "source: german credit data") +

scale_fill_manual(values = c("Good" = "darkblue", "Bad" = "red"))

ggplot(reshape2::melt(german[,c(6,21)]), aes(x = variable, y = value, fill = Class)) +

geom_boxplot() +

xlab("Class") +

ylab("n_credits") +

labs(title="Box plot", subtitle="Number of existing credits grouped by credit worthiness", caption = "source: german credit data") +

scale_fill_manual(values = c("Good" = "darkblue", "Bad" = "red"))

# Compare mean, median and sd of two Class for each of these attributes

german%>% group_by(Class) %>%

summarize(ncredits.mean=mean(NumberExistingCredits),

ncredits.median=median(NumberExistingCredits),

ncredits.sd = sd(NumberExistingCredits))# A tibble: 2 x 4

Class ncredits.mean ncredits.median ncredits.sd

<fct> <dbl> <dbl> <dbl>

1 Bad 1.37 1 0.560

2 Good 1.42 1 0.585german%>% group_by(Class) %>%

summarize(resid_dur.mean=mean(ResidenceDuration),

resid_dur.median=median(ResidenceDuration),

resid_dur.sd = sd(ResidenceDuration))# A tibble: 2 x 4

Class resid_dur.mean resid_dur.median resid_dur.sd

<fct> <dbl> <dbl> <dbl>

1 Bad 2.85 3 1.09

2 Good 2.84 3 1.11german%>% group_by(Class) %>%

summarize(people_maint.mean=mean(NumberPeopleMaintenance),

people_maint.median=median(NumberPeopleMaintenance),

people_maint.sd = sd(NumberPeopleMaintenance))# A tibble: 2 x 4

Class people_maint.mean people_maint.median people_maint.sd

<fct> <dbl> <dbl> <dbl>

1 Bad 1.15 1 0.361

2 Good 1.16 1 0.363

In the following DT table, we can interactively visualize all numerical attributes and our response variable, credit worthiness (Class) . We can search / filter any keywords or specific entries for each variable.

# i create a dataframe "get_num" which contains all numerical attributes plus the response variable. Then, with the function datatable of DT library , i create HTML widget to display get_num.

get_num <- select_if(german, is.numeric)

get_num <- get_num %>%

mutate(Class = german$Class)

DT::datatable(get_num,rownames = FALSE, filter ="top", options =list(pageLength=10,scrollx=T))

qualitative features.

Data exploration of categorical attributes disclosed the following insights:

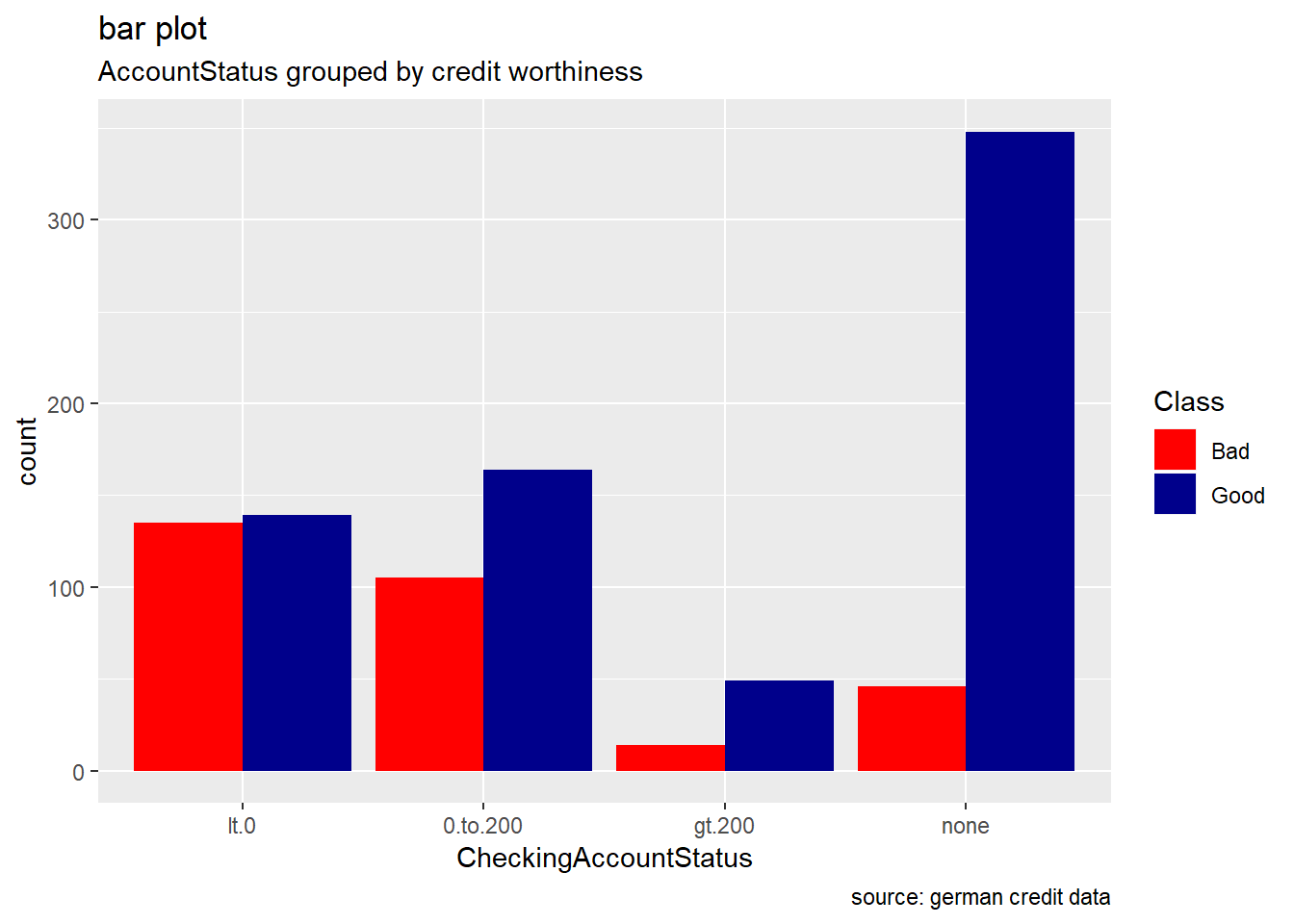

CheckingAccountStatus : the current status of the checking account matters as the frequency of the response variables is seen to differ from one sub category to another. Accounts with less than 0 DM houses more number of bad credit risk records while accounts with more than 200 DM the least.we see how applicants with no checking account house the highest number of good records. ( NB: DM -> Deutsche Mark)

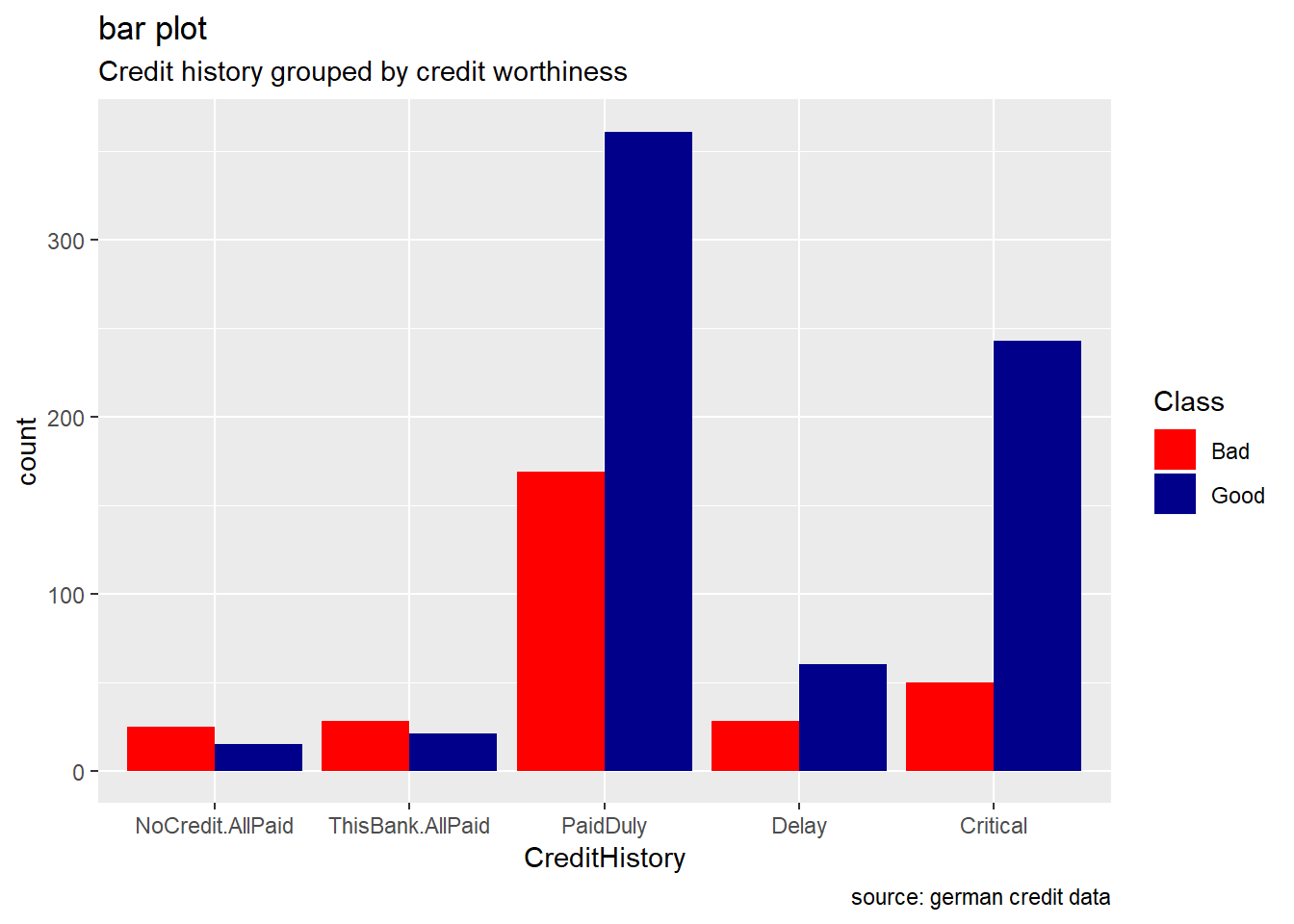

CreditHistory: Generally, credit history’s levels tend to show a higher number of good credit risk than bad credit risk. We observe that proportion of the response variable ,the credit worthiness, changes varies significantly. For categories “NoCredit.AllPaid” and “ThisBank.AllPaid”, we see how the number of bad credit records are greater.

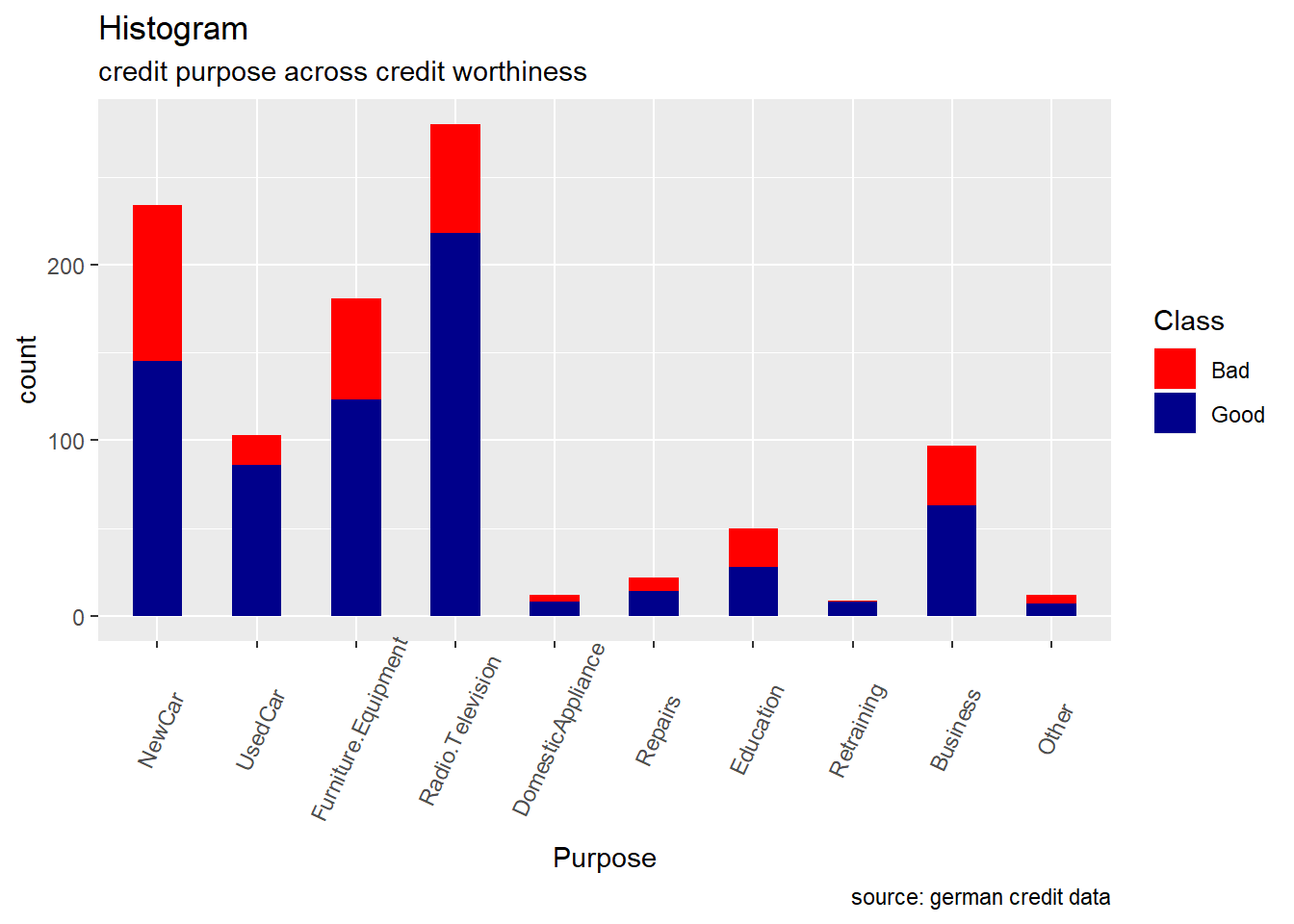

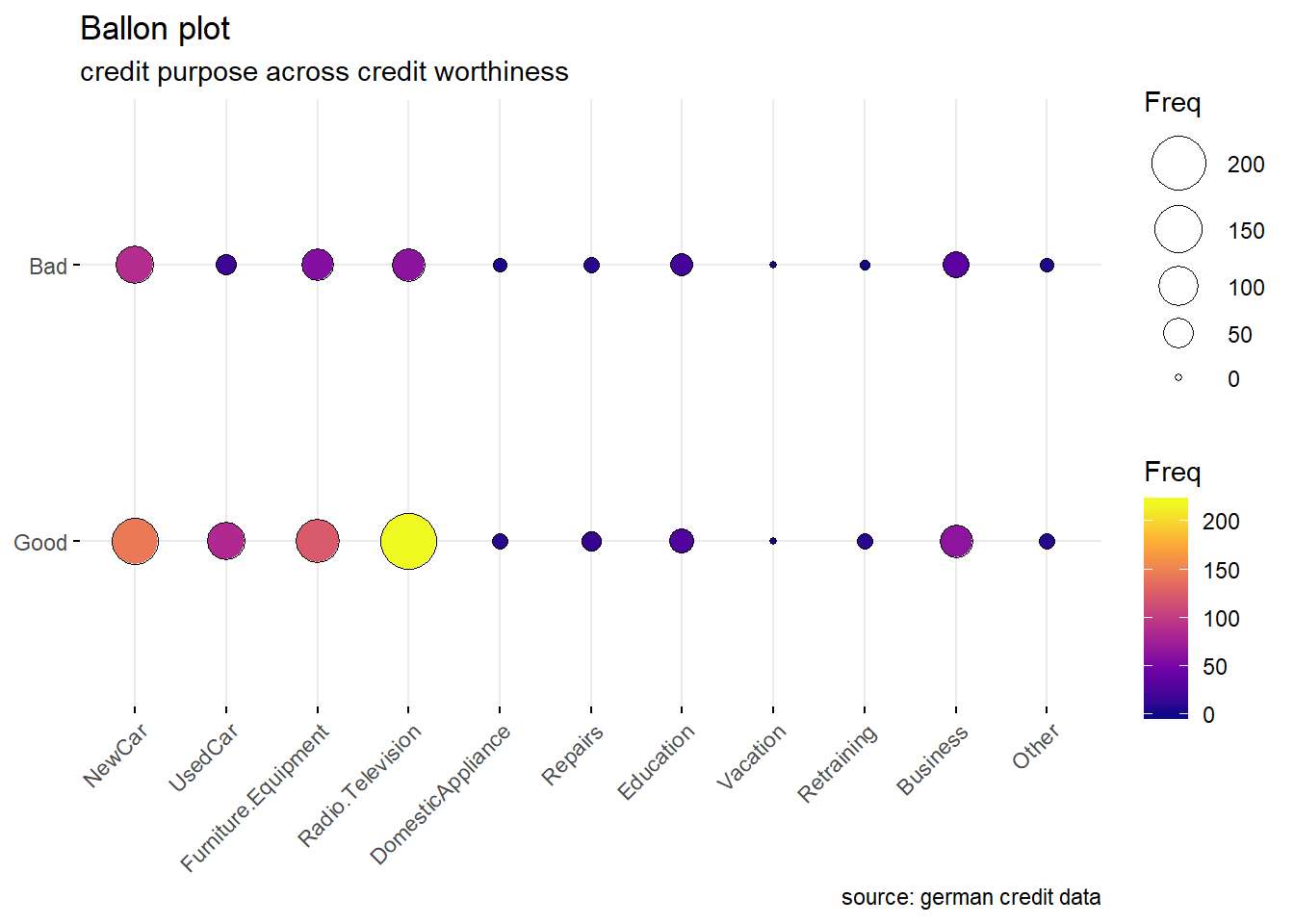

Purpose: For the purpose variable, we observe that the proportion of good and bad credit risk varies significantly across the different credit’s purposes. While the majority of categories show number of good risk records always greater than the bad ones, the categories as DomesticAppliance ,Education , Repairs and others seem to include more risky records. ( the difference between the number of bad and good records is really minimal) (see histogram and ballon plot)

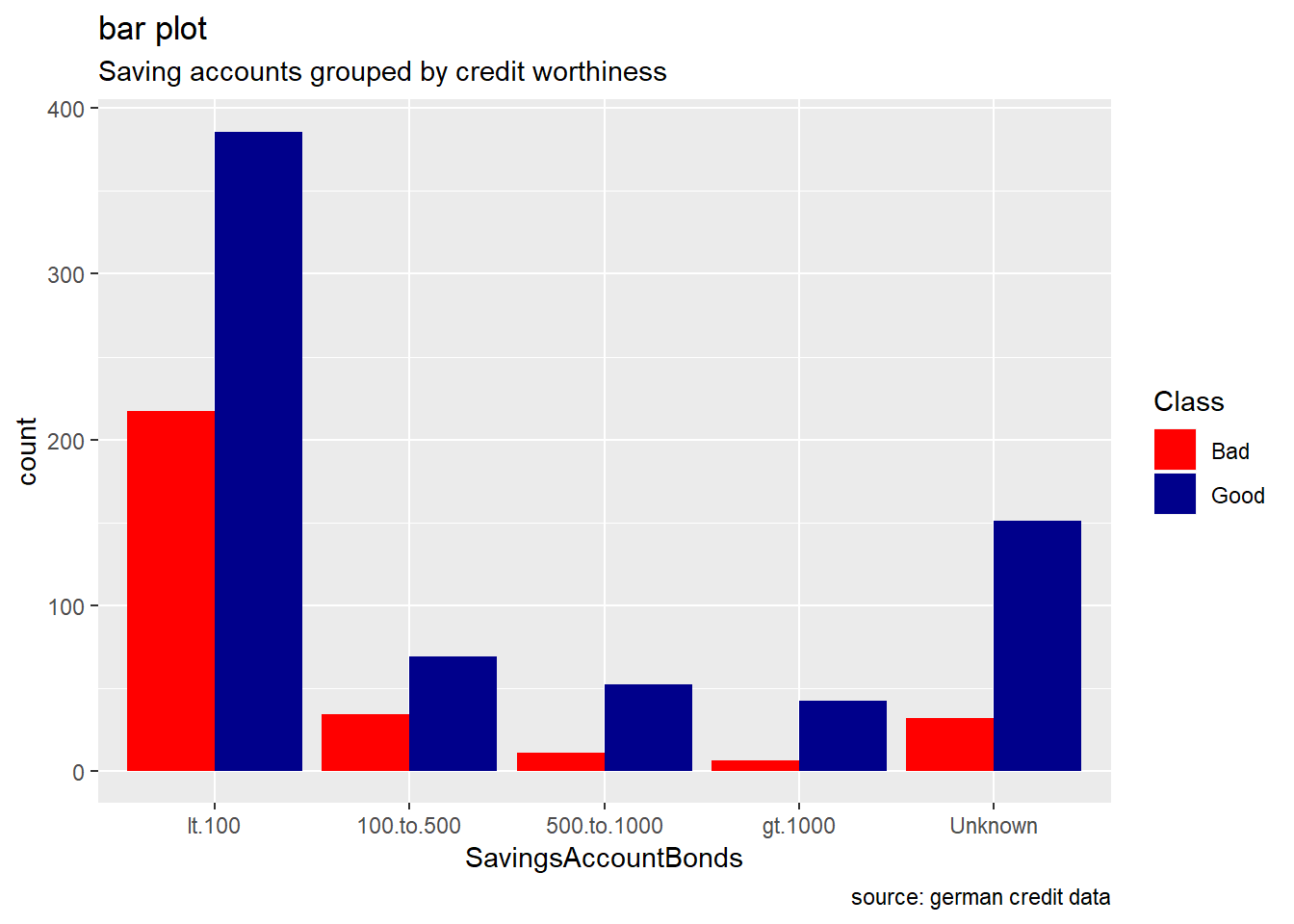

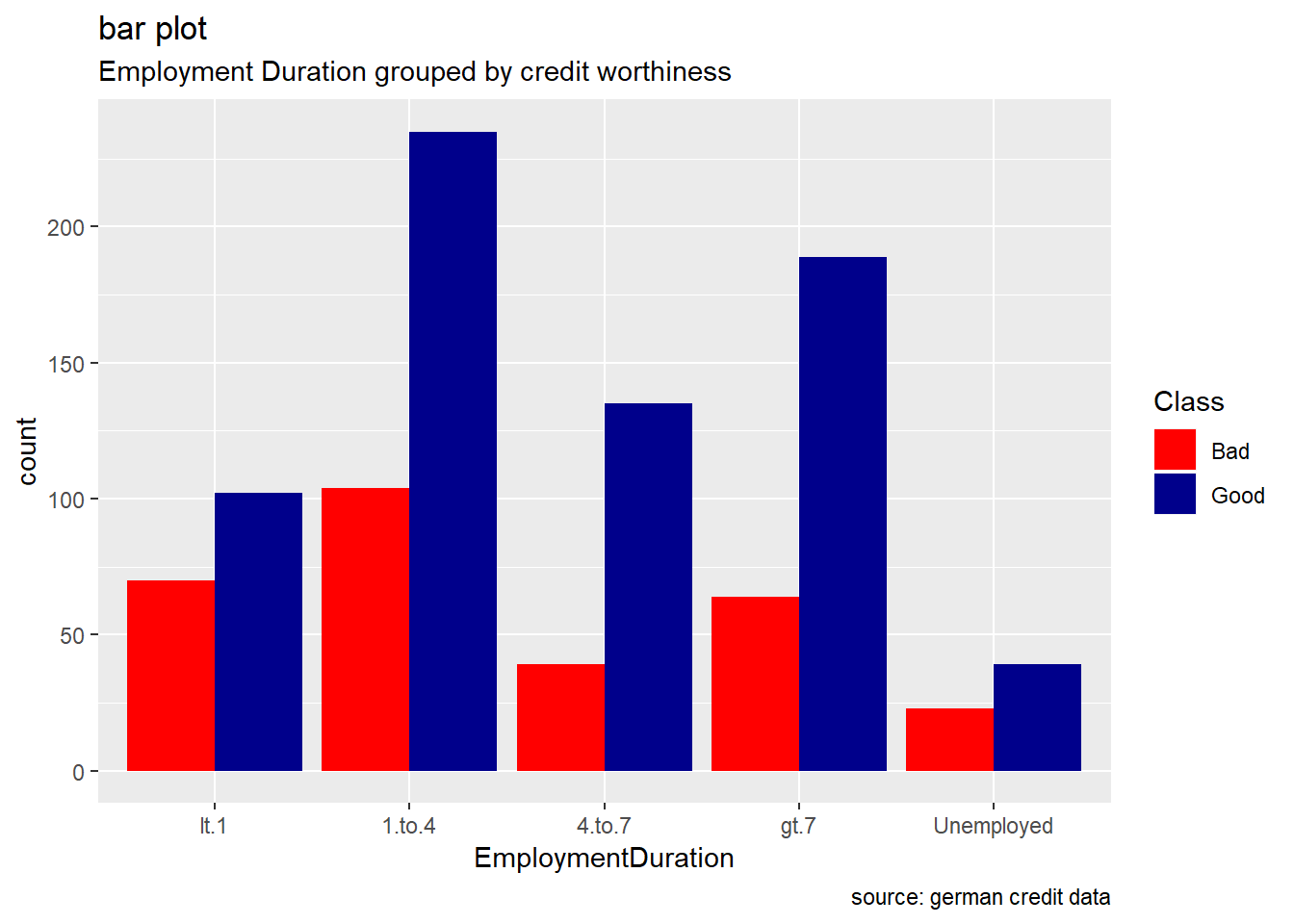

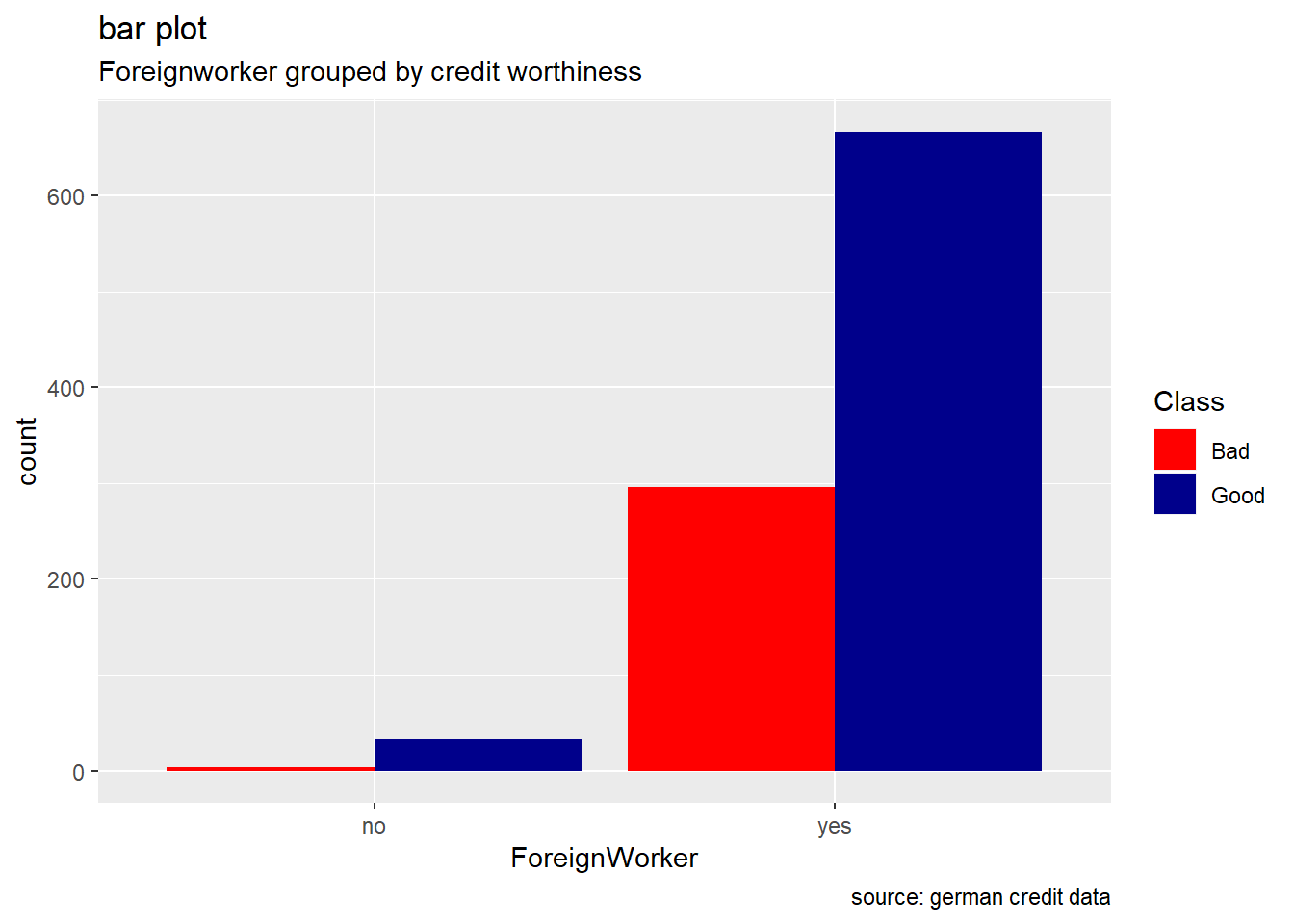

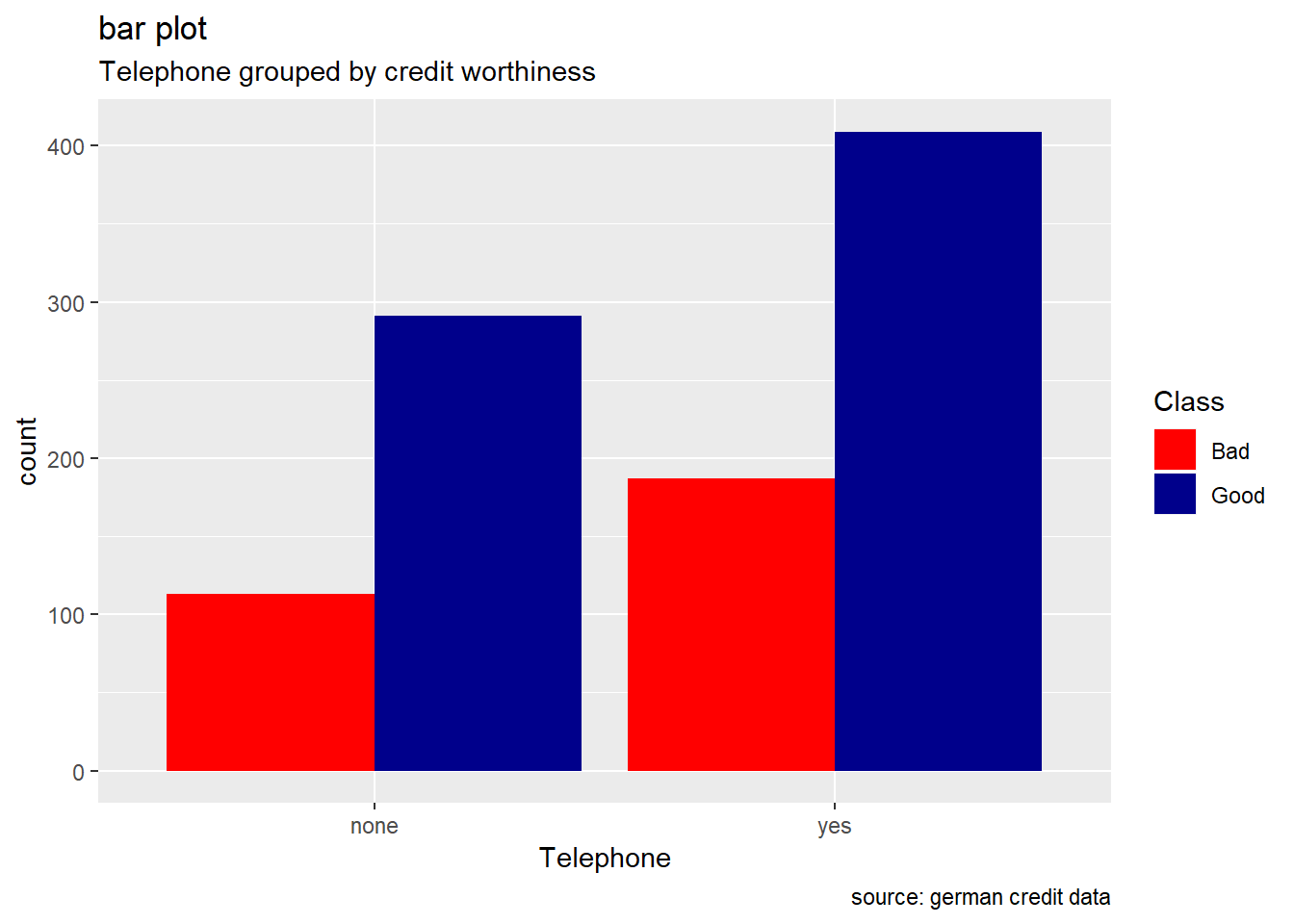

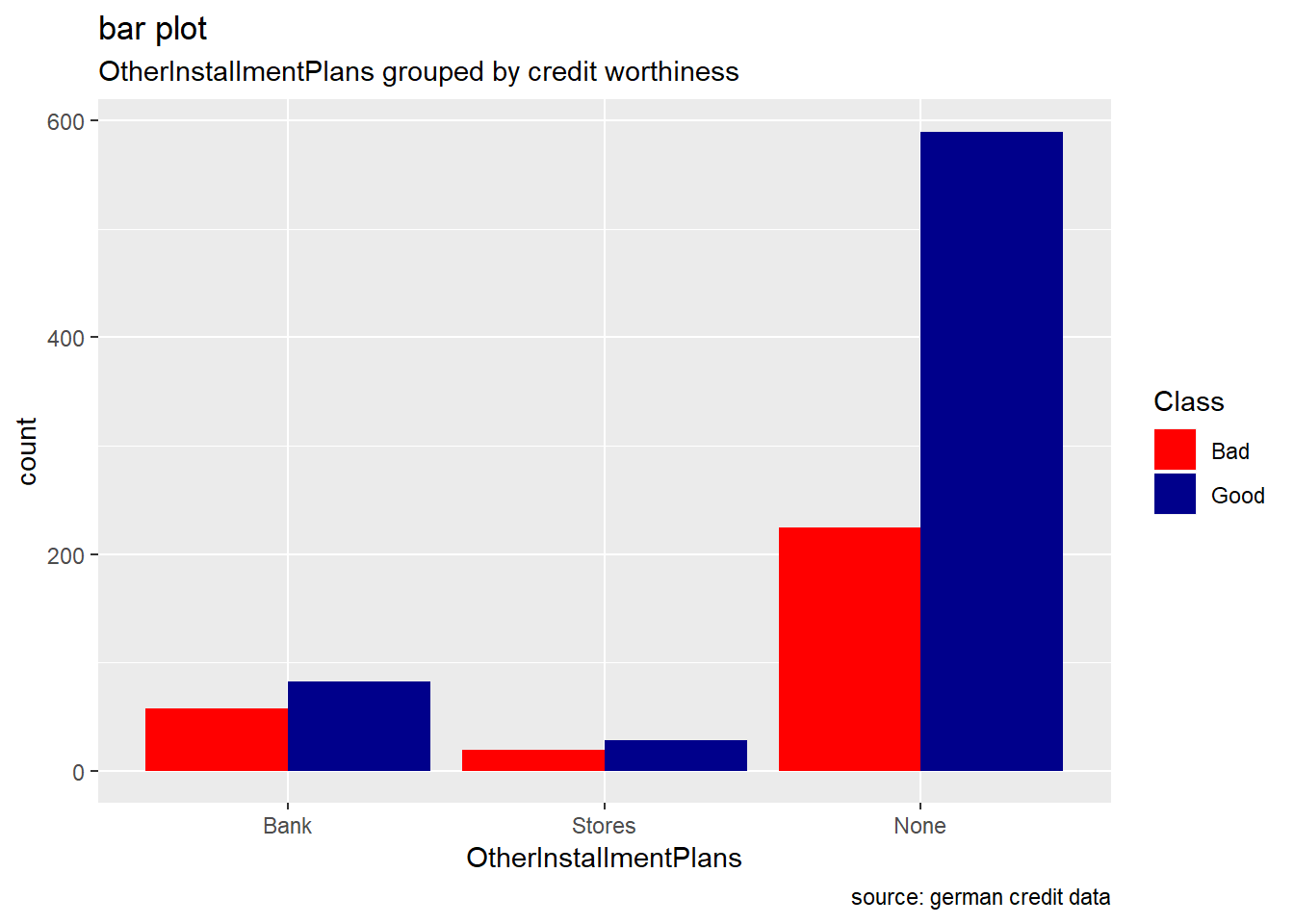

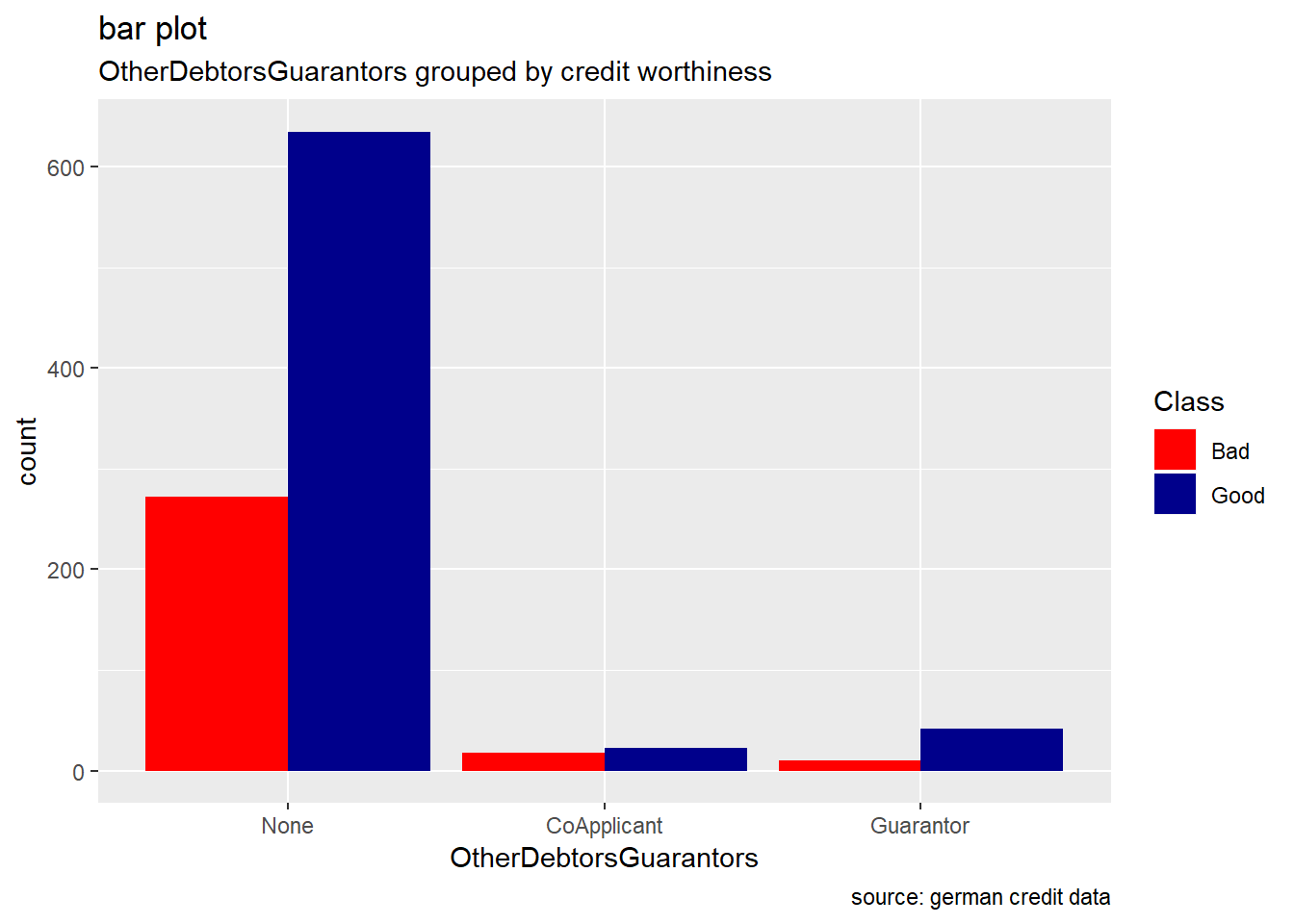

Generally, for the other categorical attributes, we observe a similar trend for which good creditworthiness records are greater than bad ones. Variables like SavingAccountBonds, EmploymentDuration, Personal, ForeignWorker, Housing, Property, or Telephone straightly follow that observation. However, the trend looks more significant for the attributes SavingAccountBonds, telephone,foreign workers, or also EmploymentDuration. Instead, the two features OtherInstallmentPlans and OtherDebtorsGuarantors show more risky records. (see on barplot levels Coapplicant or Guarantor for OtherDebtors , and Stores or Bank for OtherInstall)

CheckingAccountStatus

ggplot(german, aes(CheckingAccountStatus, ..count..)) +

geom_bar(aes(fill = Class), position = "dodge") +

labs(title="bar plot", subtitle="AccountStatus grouped by credit worthiness", caption = "source: german credit data") +

scale_fill_manual(values = c("Good" = "darkblue", "Bad" = "red"))

CreditHistory

german %>%

ggplot(aes(CreditHistory, ..count..)) +

geom_bar(aes(fill=Class), position ="dodge") +

labs(title="bar plot", subtitle="Credit history grouped by credit worthiness", caption = "source: german credit data") +

scale_fill_manual(values = c("Good" = "darkblue", "Bad" = "red"))

Purpose

german %>%

ggplot(aes(Purpose)) +

geom_bar(aes(fill=Class), width = 0.5) +

theme(axis.text.x = element_text(angle=65, vjust=0.6)) +

labs(title="Histogram",

subtitle="credit purpose across credit worthiness",

caption = "source: german credit data") +

scale_fill_manual(values = c("Good" = "darkblue", "Bad" = "red"))

purp <- table(german$Purpose,german$Class)

ggballoonplot(as.data.frame(purp), fill = "value",title="Ballon plot",

subtitle="credit purpose across credit worthiness",

caption = "source: german credit data")+

scale_fill_viridis_c(option = "C")

SavingsAccountBonds

german %>%

ggplot(aes(SavingsAccountBonds, ..count..)) +

geom_bar(aes(fill=Class), position ="dodge") +

labs(title="bar plot", subtitle="Saving accounts grouped by credit worthiness", caption = "source: german credit data") +

scale_fill_manual(values = c("Good" = "darkblue", "Bad" = "red"))

EmploymentDuration

german %>%

ggplot(aes(EmploymentDuration, ..count..)) +

geom_bar(aes(fill=Class), position ="dodge") +

labs(title="bar plot", subtitle="Employment Duration grouped by credit worthiness", caption = "source: german credit data") +

scale_fill_manual(values = c("Good" = "darkblue", "Bad" = "red"))

ForeignWorker

german %>%

ggplot(aes(ForeignWorker, ..count..)) +

geom_bar(aes(fill=Class), position ="dodge") +

labs(title="bar plot", subtitle="Foreignworker grouped by credit worthiness", caption = "source: german credit data") +

scale_fill_manual(values = c("Good" = "darkblue", "Bad" = "red"))

Telephone

german %>%

ggplot(aes(Telephone, ..count..)) +

geom_bar(aes(fill=Class), position ="dodge")+

labs(title="bar plot", subtitle="Telephone grouped by credit worthiness", caption = "source: german credit data") +

scale_fill_manual(values = c("Good" = "darkblue", "Bad" = "red"))

OtherInstallmentPlans

german %>%

ggplot(aes(OtherInstallmentPlans, ..count..)) +

geom_bar(aes(fill=Class), position ="dodge")+

labs(title="bar plot", subtitle="OtherInstallmentPlans grouped by credit worthiness", caption = "source: german credit data") +

scale_fill_manual(values = c("Good" = "darkblue", "Bad" = "red"))

OtherDebtorsGuarantor

german %>%

ggplot(aes(OtherDebtorsGuarantors, ..count..)) +

geom_bar(aes(fill=Class), position ="dodge")+

labs(title="bar plot", subtitle="OtherDebtorsGuarantors grouped by credit worthiness", caption = "source: german credit data") +

scale_fill_manual(values = c("Good" = "darkblue", "Bad" = "red"))

In the following DT table, we can interactively visualize all categorical features and our response variable, credit worthiness (Class) . We can search / filter any keywords or specific entries for each variable.

# i create a dataframe "get_cat" which contains all categorical attributes including the response variable. Then, with the function datatable of DT library , i create HTML widget to display get_num.

get_cat <- select_if(german, is.factor)

DT::datatable(get_cat,rownames = FALSE, filter ="top", options =list(pageLength=10,scrollx=T))

Multivariate analysis.

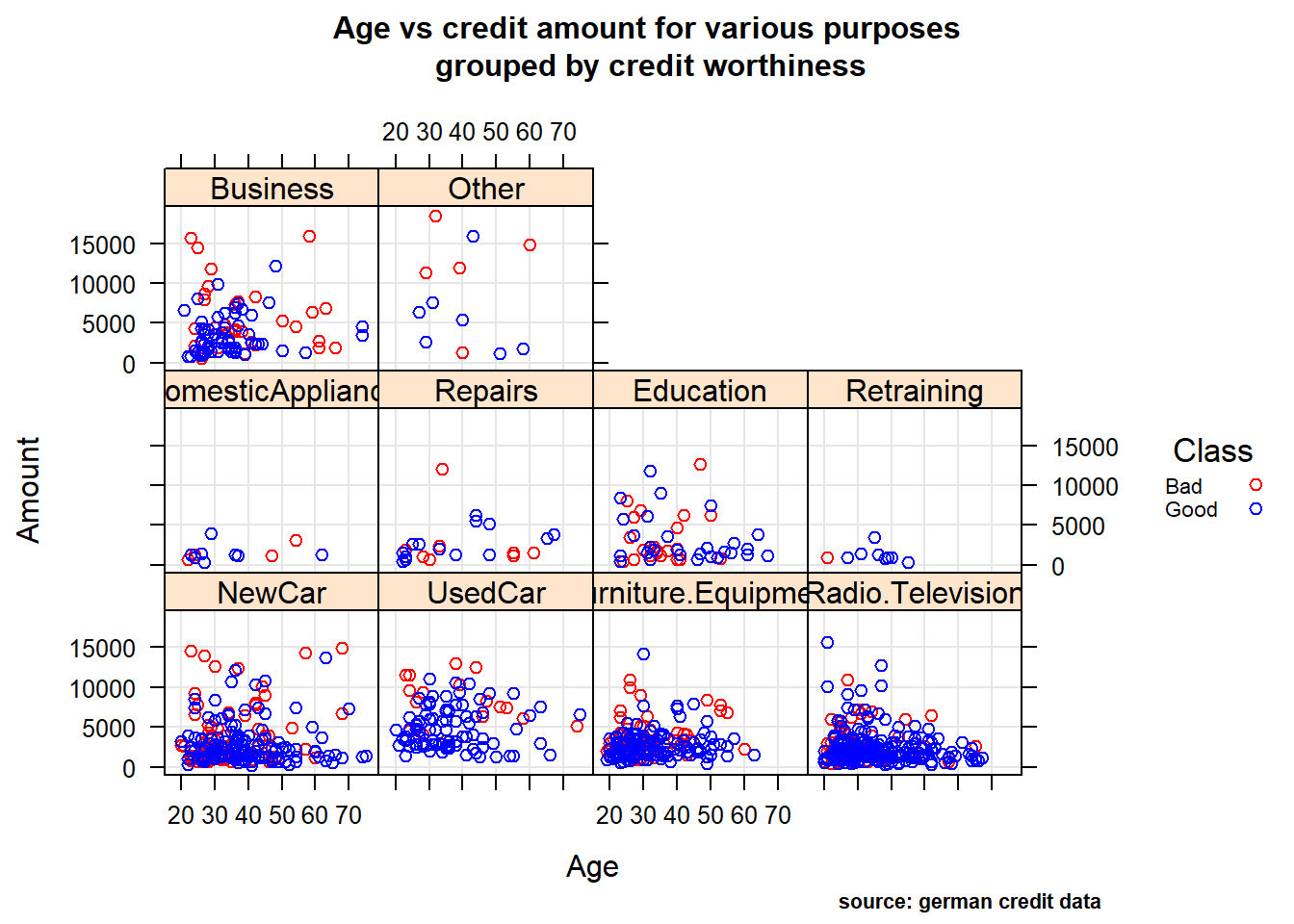

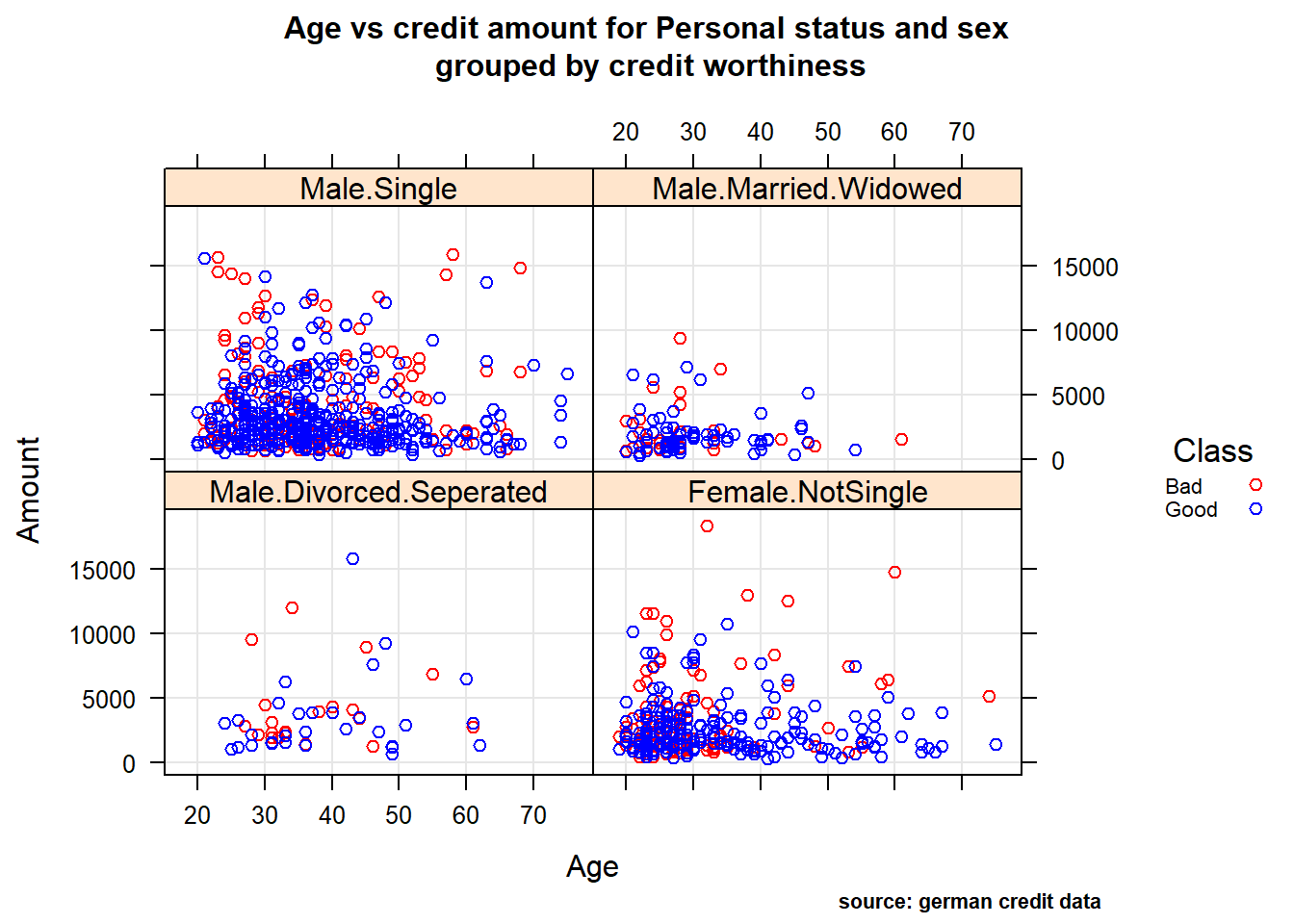

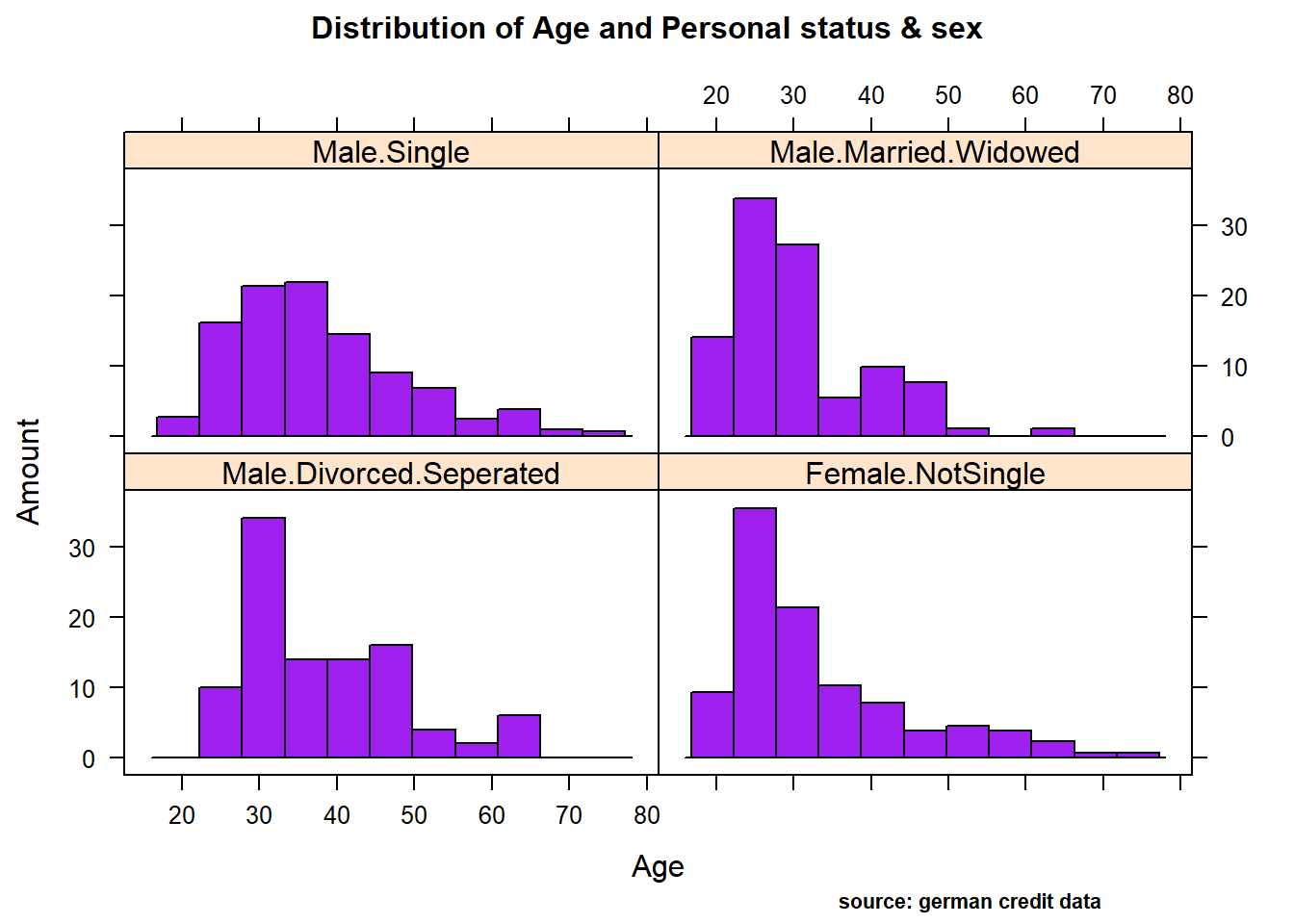

Relationship between quantitative and qualitative features, it’s performed to understand interactions between different fields in the dataset or finding interactions between variables more than 2 (- ex: pair plot and 3D scatter plot). We performed Multi-panel conditioning focusing on the relationship between Age , Amount credit with respect to Purpose and Personal status/sex (i1 , i2) , we also drew a histogram plot of Amount ~ Age conditioned on Personal status/sex variable (i3).

As in Sivakumar(2015), Multivariate data analysis of the latter revealed the following:

(i1), Age vs credit amount for various purpose : As we can see in the first plot ,most of the loans are sought to buy: new car , furniture/equipment, radio/television. It also reveals that surprisingly few people buying used cars have bad rating! And not surprisingly, lower the age of the lonee and higher loan amount correlates to bad credits.

(i2), Age vs credit Amount for Personal status and sex : Male: the second plot reveals that single males tend to borrow more, and as before, younger they are and higher the loan amount corresponds to a bad rating. Instead, married/widowed or divorced/separated Males have shown the least amount of borrowing. Because of this, its difficult to visually observe any trends in these categories. Female: the first obvious observation for females is the absence of data for single women. Its not sure if its lack of data or there were no single women applying for loans- though the second possibility seems unlikely in real life. The next most borrowing after single males category is Female not single :divorced/separated/married. The dominant trend in this category is smaller loan amount, higher the age, better the credit rating.

(i3), Distribution Amount ~ Age conditioned on Personal status/sex :The histogram reveals that there is a right skewed nearly normal trend seen across all Personal Status and Sex categories, with 30 being the age where people in the sample seem to be borrowing the most.

Age vs credit amount for various purpose

mycol <- c("red","blue")

xyplot(Amount ~ Age|Purpose, german,

grid = TRUE,

group= Class,

auto.key = list(points = TRUE, rectangles = FALSE, title="Class",cex=0.7, space = "right"),

main=list(

label="Age vs credit amount for various purposes\n grouped by credit worthiness",

cex=1),

sub= "source: german credit data",

par.settings = c(simpleTheme(col = mycol),list(par.sub.text = list(cex = 0.7, col = "black",x=0.75))))

Age vs credit Amount for Personal status and sex

xyplot(Amount ~ Age |Personal , german,

group = Class,

grid = TRUE,

auto.key = list(points = TRUE, rectangles = FALSE, title="Class",cex=0.7, space = "right"),

main=list(

label="Age vs credit amount for Personal status and sex\n grouped by credit worthiness",

cex=1),

#sub= "source: german credit data"),

sub= "source: german credit data",

par.settings = c(simpleTheme(col = mycol),list(par.sub.text = list(cex = 0.7, col = "black",x=0.75))))

Distribution Amount ~ Age conditioned on Personal status/sex

histogram(Amount ~ Age | Personal,

data = german,

xlab = "Age",

ylab= "Amount",

main= list( label="Distribution of Age and Personal status & sex", cex=1),

col="purple",

sub= "source: german credit data",

par.settings=list(par.sub.text = list(cex = 0.7, col = "black", x=0.75)))

III. Data Preprocessing

Real-life data typically needs to be preprocessed (e.g. cleansed, filtered, transformed) in order to be used by the machine learning techniques in the analysis step.

Credit scoring portfolios are frequently voluminous and they are in the range of several thousand, well over 100000 applicants measured on more than 100 variables are quite common (Hand and Hen ley 1997). These portfolios are characterized by noise, missing values, complexity of distributions and by redundant or irrelevant features (Piramuthu 2006). Clearly, the applicants characteristics will vary from situation to situation: an applicant looking for a small loan will be asked for different information from another who is asking for a big loan. Also, according to Garcia et al(2012), in credit scoring applications the resampling approaches have produced important gains in performance when compared to the use of the imbalanced data sets

Then in this section, we’ll focus mainly on data preprocessing techniques that are of particular importance when performing a credit scoring task. These techniques include Data wrangling( cleansing, filtering, renaming variables, recodifing factors,etc ) , Features selection(filter,wrapper methods) and Data partitioning (stratified random sampling method to correct the imbalanced class).

3.1. Data Wrangling

To perform the different steps of data pre-processing part and successive analysis , we make a copy of the german credit dataset, in order to to keep unchanged our original german credit data .

german.copy <- german3.1.1.

Missing values

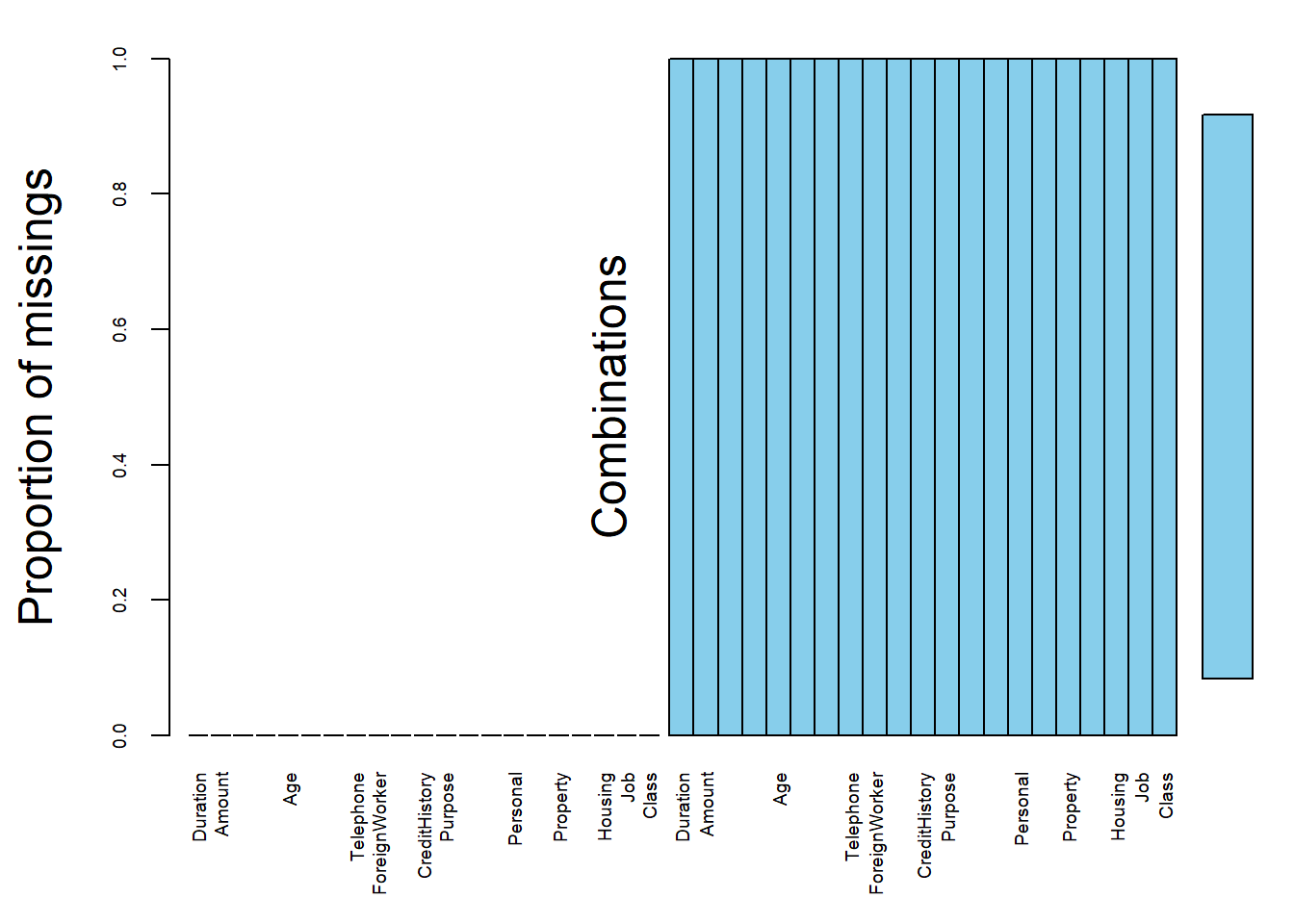

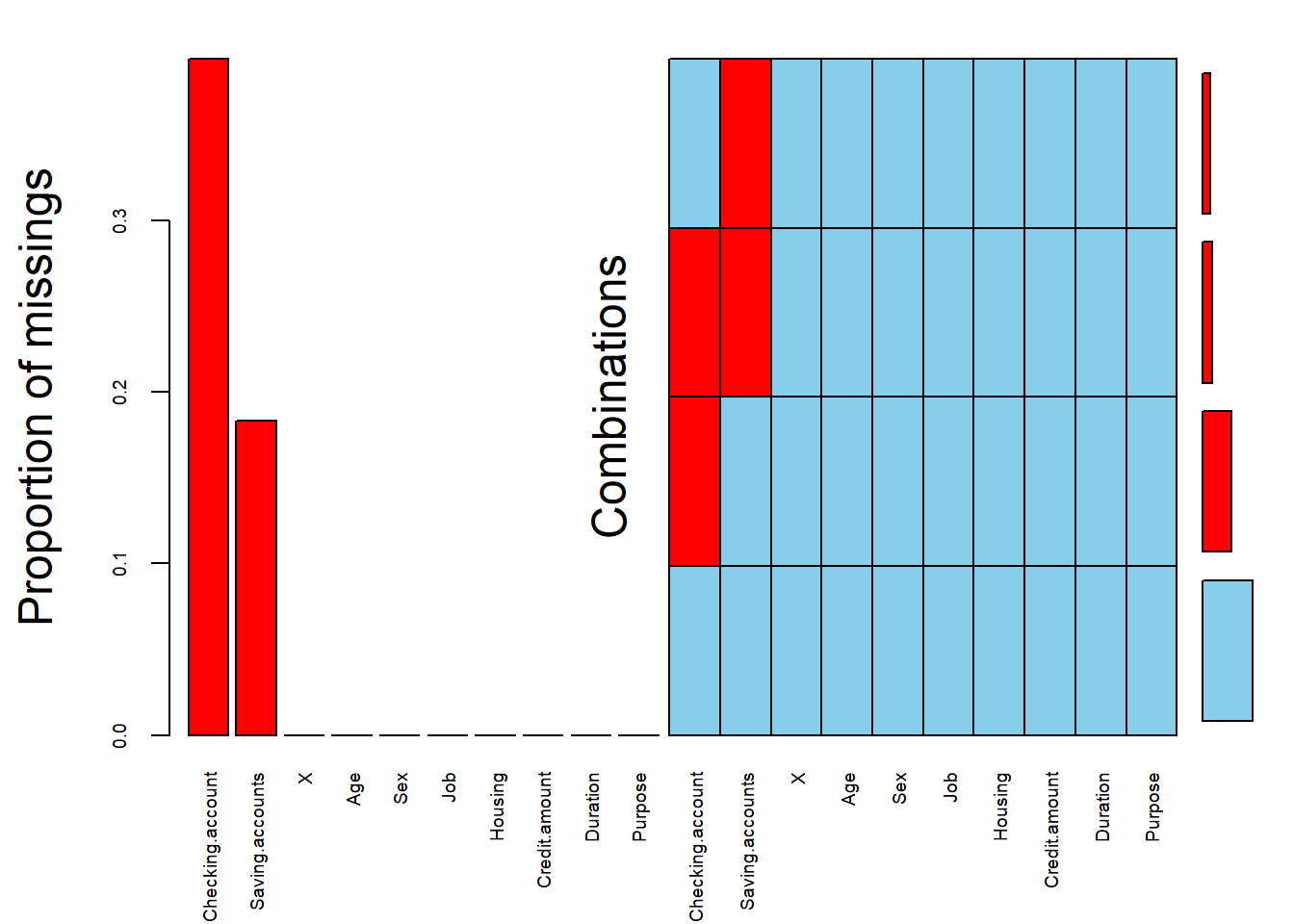

We used the VIM package to explore the data and the structure of the missing or imputed values. Fortunately, We observe that there are no missing values. It’s confirmed not only by the proportion of missings plot , but also by the variable sorted by number of missings output, where each variable have a null count.

If they were missing values, it would be presented with red cells like the case of german credit risk from kaggle downloaded at this link (see second plot)

#aggr function : Calculate or plot the amount of missing/imputed values in each variable and the amount of missing/imputed values in certain combinations of variables.

missing.values <- aggr(german.copy, sortVars = T, prop = T, sortCombs = T,

cex.lab = 1.5, cex.axis = .6, cex.numbers = 5,

combined = F, gap = -.2)

Variables sorted by number of missings:

Variable Count

Duration 0

Amount 0

InstallmentRatePercentage 0

ResidenceDuration 0

Age 0

NumberExistingCredits 0

NumberPeopleMaintenance 0

Telephone 0

ForeignWorker 0

CheckingAccountStatus 0

CreditHistory 0

Purpose 0

SavingsAccountBonds 0

EmploymentDuration 0

Personal 0

OtherDebtorsGuarantors 0

Property 0

OtherInstallmentPlans 0

Housing 0

Job 0

Class 0#german credit data from kaggle

GCD <- read.csv(file="german_credit_data.csv", header=TRUE, sep=",")

germanKaggle_NA <- aggr(GCD, sortVars = T, prop = T, sortCombs = T,

cex.lab = 1.5, cex.axis = .6, cex.numbers = 5,

combined = F, gap = -.2)

Variables sorted by number of missings:

Variable Count

Checking.account 0.394

Saving.accounts 0.183

X 0.000

Age 0.000

Sex 0.000

Job 0.000

Housing 0.000

Credit.amount 0.000

Duration 0.000

Purpose 0.0003.1.2.

Renaming variables

Renaming data columns is a common task in Data pre-processing that can make implementing ML algorithms/ writing code faster by using short, intuitive names. The dplyr function rename() makes this easy.

#rename(data, new_name = `old_name`)

german.copy = rename( german.copy,

Risk = `Class`,

installment_rate = `InstallmentRatePercentage`,

present_resid = `ResidenceDuration`,

n_credits = `NumberExistingCredits`,

n_people = `NumberPeopleMaintenance`,

check_acct = `CheckingAccountStatus`,

credit_hist = `CreditHistory`,

savings_acct = `SavingsAccountBonds`,

present_emp = `EmploymentDuration`,

status_sex = `Personal`,

other_debt = `OtherDebtorsGuarantors`,

other_install = `OtherInstallmentPlans`

)

#get glimpse of data

glimpse(german.copy)Observations: 1,000

Variables: 21

$ Duration <dbl> 6, 48, 12, 42, 24, 36, 24, 36, 12, 30, 12, 48...

$ Amount <dbl> 1169, 5951, 2096, 7882, 4870, 9055, 2835, 694...

$ installment_rate <dbl> 4, 2, 2, 2, 3, 2, 3, 2, 2, 4, 3, 3, 1, 4, 2, ...

$ present_resid <dbl> 4, 2, 3, 4, 4, 4, 4, 2, 4, 2, 1, 4, 1, 4, 4, ...

$ Age <dbl> 67, 22, 49, 45, 53, 35, 53, 35, 61, 28, 25, 2...

$ n_credits <dbl> 2, 1, 1, 1, 2, 1, 1, 1, 1, 2, 1, 1, 1, 2, 1, ...

$ n_people <dbl> 1, 1, 2, 2, 2, 2, 1, 1, 1, 1, 1, 1, 1, 1, 1, ...

$ Telephone <fct> none, yes, yes, yes, yes, none, yes, none, ye...

$ ForeignWorker <fct> yes, yes, yes, yes, yes, yes, yes, yes, yes, ...

$ check_acct <fct> lt.0, 0.to.200, none, lt.0, lt.0, none, none,...

$ credit_hist <fct> Critical, PaidDuly, Critical, PaidDuly, Delay...

$ Purpose <fct> Radio.Television, Radio.Television, Education...

$ savings_acct <fct> Unknown, lt.100, lt.100, lt.100, lt.100, Unkn...

$ present_emp <fct> gt.7, 1.to.4, 4.to.7, 4.to.7, 1.to.4, 1.to.4,...

$ status_sex <fct> Male.Single, Female.NotSingle, Male.Single, M...

$ other_debt <fct> None, None, None, Guarantor, None, None, None...

$ Property <fct> RealEstate, RealEstate, RealEstate, Insurance...

$ other_install <fct> None, None, None, None, None, None, None, Non...

$ Housing <fct> Own, Own, Own, ForFree, ForFree, ForFree, Own...

$ Job <fct> SkilledEmployee, SkilledEmployee, UnskilledRe...

$ Risk <fct> Good, Bad, Good, Good, Bad, Good, Good, Good,...3.1.3.

Skewness

Skewness is defined to be the third standardized central moment .You can easily tell if a distribution is skewed by simple visualization. There are different ways may help to remove skewness such as log, square root or inverse. However it is often difficult to determine from plots which transformation is most appropriate for correcting skewness. The Box-Cox procedure automatically identified a transformation from the family of power transformations that are indexed by a parameter \(λ\).

This family includes:

- log transformation \((λ=0)\)

- square transformation \((λ=2)\)

- square root \((λ=0.5)\)

- inverse \((λ=−1)\)

We used preProcess() function in caret to apply this transformation by changing the method argument to BoxCox.

mySkew <- preProcess(german.copy, method = c("BoxCox"))

mySkew## Created from 1000 samples and 20 variables

##

## Pre-processing:

## - Box-Cox transformation (6)

## - ignored (14)

##

## Lambda estimates for Box-Cox transformation:

## 0.1, -0.1, 1.5, 1.1, -0.7, -2mySkew$method## $BoxCox

## [1] "Duration" "Amount" "installment_rate"

## [4] "present_resid" "Age" "n_credits"

##

## $ignore

## [1] "Telephone" "ForeignWorker" "check_acct" "credit_hist"

## [5] "Purpose" "savings_acct" "present_emp" "status_sex"

## [9] "other_debt" "Property" "other_install" "Housing"

## [13] "Job" "Risk"mySkew$bc## $Duration

## Box-Cox Transformation

##

## 1000 data points used to estimate Lambda

##

## Input data summary:

## Min. 1st Qu. Median Mean 3rd Qu. Max.

## 4.0 12.0 18.0 20.9 24.0 72.0

##

## Largest/Smallest: 18

## Sample Skewness: 1.09

##

## Estimated Lambda: 0.1

## With fudge factor, Lambda = 0 will be used for transformations

##

##

## $Amount

## Box-Cox Transformation

##

## 1000 data points used to estimate Lambda

##

## Input data summary:

## Min. 1st Qu. Median Mean 3rd Qu. Max.

## 250 1366 2320 3271 3972 18424

##

## Largest/Smallest: 73.7

## Sample Skewness: 1.94

##

## Estimated Lambda: -0.1

## With fudge factor, Lambda = 0 will be used for transformations

##

##

## $installment_rate

## Box-Cox Transformation

##

## 1000 data points used to estimate Lambda

##

## Input data summary:

## Min. 1st Qu. Median Mean 3rd Qu. Max.

## 1.000 2.000 3.000 2.973 4.000 4.000

##

## Largest/Smallest: 4

## Sample Skewness: -0.53

##

## Estimated Lambda: 1.5

##

##

## $present_resid

## Box-Cox Transformation

##

## 1000 data points used to estimate Lambda

##

## Input data summary:

## Min. 1st Qu. Median Mean 3rd Qu. Max.

## 1.000 2.000 3.000 2.845 4.000 4.000

##

## Largest/Smallest: 4

## Sample Skewness: -0.272

##

## Estimated Lambda: 1.1

## With fudge factor, no transformation is applied

##

##

## $Age

## Box-Cox Transformation

##

## 1000 data points used to estimate Lambda

##

## Input data summary:

## Min. 1st Qu. Median Mean 3rd Qu. Max.

## 19.00 27.00 33.00 35.55 42.00 75.00

##

## Largest/Smallest: 3.95

## Sample Skewness: 1.02

##

## Estimated Lambda: -0.7

##

##

## $n_credits

## Box-Cox Transformation

##

## 1000 data points used to estimate Lambda

##

## Input data summary:

## Min. 1st Qu. Median Mean 3rd Qu. Max.

## 1.000 1.000 1.000 1.407 2.000 4.000

##

## Largest/Smallest: 4

## Sample Skewness: 1.27

##

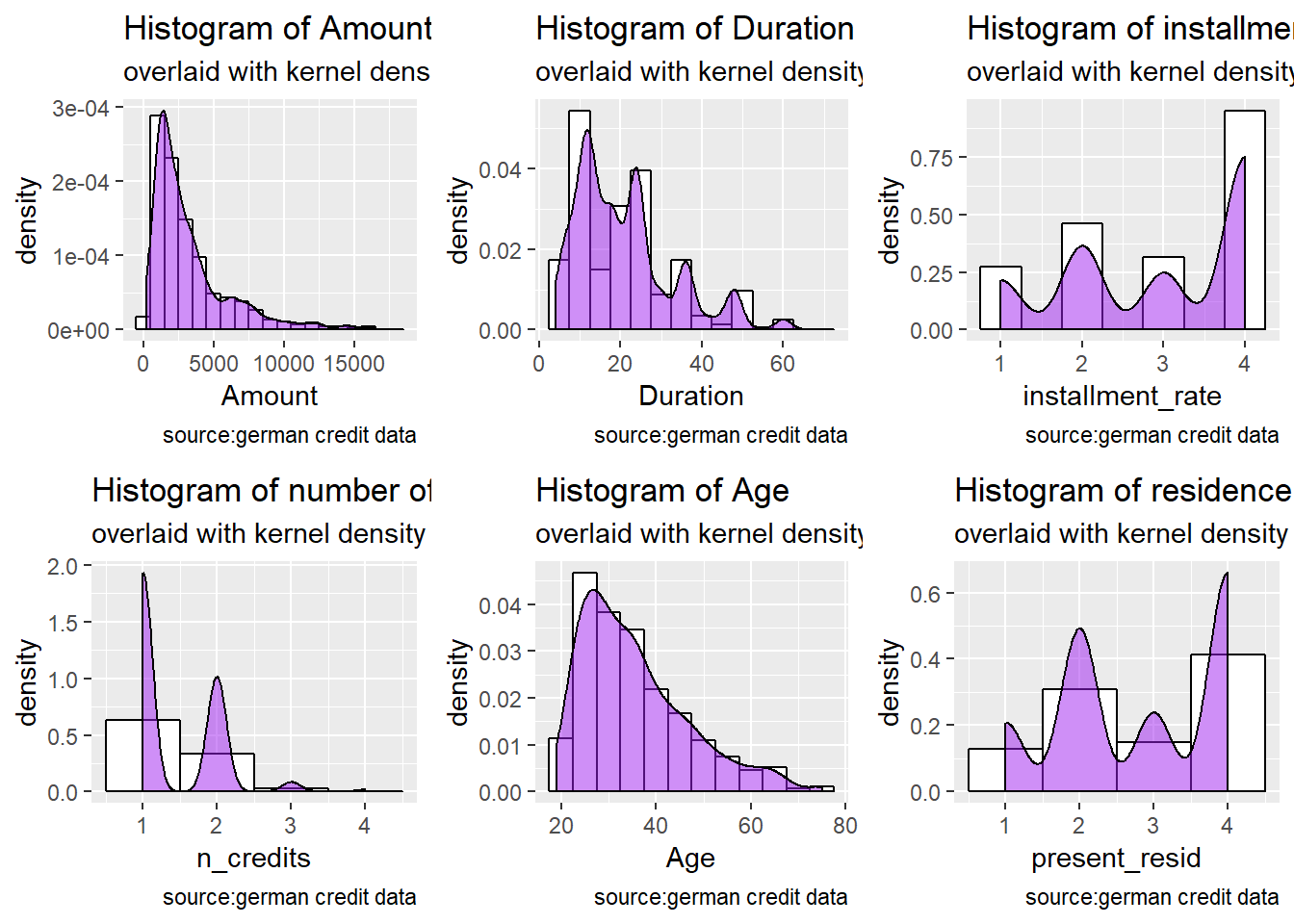

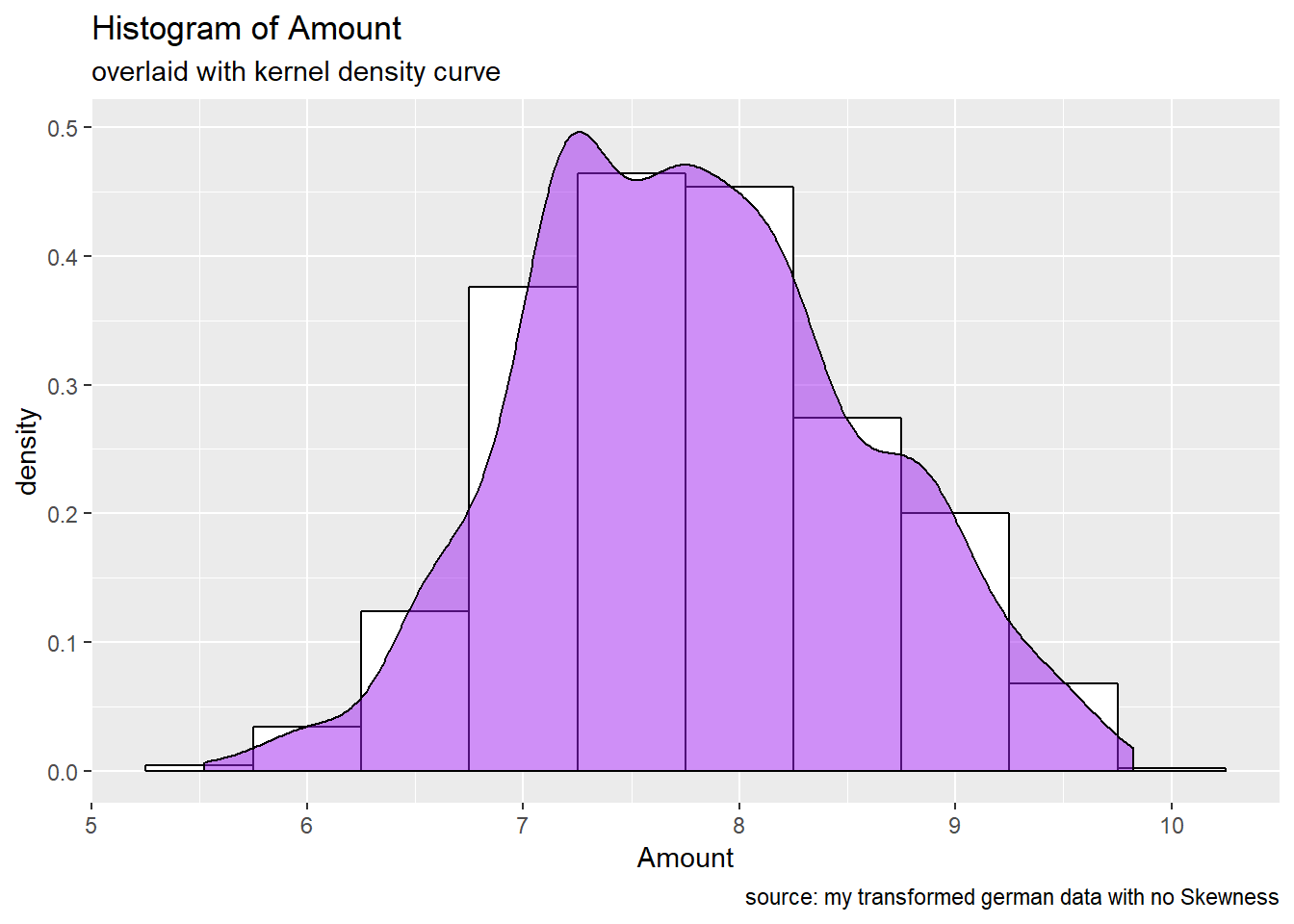

## Estimated Lambda: -2We visually inspected the skewness by plotting histogram of the 6 numerical attributes inclined to a BoxCox transformation ( see mySkew$method)

p1<- german.copy %>%

ggplot(aes(x=Amount)) +

geom_histogram(aes(y=..density..), # Histogram with density

binwidth=1000,

colour="black", fill="white") +

geom_density(alpha=.5, fill="purple") + # Overlay with transparent density plot

labs(title= "Histogram of Amount", subtitle= "overlaid with kernel density curve", caption = "source:german credit data")

p2<- german.copy %>%

ggplot(aes(x=installment_rate)) +

geom_histogram(aes(y=..density..), # Histogram with density

binwidth=.5,

colour="black", fill="white") +

geom_density(alpha=.5, fill="purple") + # Overlay with transparent density plot

labs(title= "Histogram of installment rate percentage", subtitle= "overlaid with kernel density curve", caption = "source:german credit data")

p3 <- german.copy %>%

ggplot(aes(x=Duration)) +

geom_histogram(aes(y=..density..), # Histogram with density instead y-axis count

binwidth=5,

colour="black", fill="white") +

geom_density(alpha=.5, fill="purple") + # Overlay with transparent density plot

labs(title= "Histogram of Duration in months", subtitle= "overlaid with kernel density curve", caption = "source:german credit data")

p4 <- german.copy %>%

ggplot(aes(x=n_credits)) +

geom_histogram(aes(y=..density..),

binwidth=1,

colour="black", fill="white") +

geom_density(alpha=.5, fill="purple") + # Overlay with transparent density plot

labs(title= "Histogram of number of existing credits", subtitle= "overlaid with kernel density curve", caption = "source:german credit data")

p5 <- german.copy %>%

ggplot(aes(x=Age)) +

geom_histogram(aes(y=..density..), # Histogram with density instead of count on y-axis

binwidth=5,

colour="black", fill="white") +

geom_density(alpha=.5, fill="purple") + # Overlay with transparent density plot

labs(title= "Histogram of Age", subtitle= "overlaid with kernel density curve", caption = "source:german credit data")

p6 <- german.copy %>%

ggplot(aes(x=present_resid)) +

geom_histogram(aes(y=..density..),

binwidth=1,

colour="black", fill="white") +

geom_density(alpha=.5, fill="purple") + # Overlay with transparent density plot

labs(title= "Histogram of residence duration", subtitle= "overlaid with kernel density curve", caption = "source:german credit data")

#plot all

grid.arrange(p1, p3, p2, p4, p5, p6, ncol=3)

The previous plots confirm the skweness distributions of 6 numerical attributes inclined to a BoxCox transformation. More over, The output of mySkew, the applied BoxCoxTrans , shows the sample size (1000), number of variables (20) and the \(λ\) estimates for Box-Cox transformation candidate variables (6).

Do we have to apply a transformation/ is it useful to resolve skewness for the purpose of our work? : The output of mySkew$bc reveals that the Amount (1.94) , n_credits (1.27) and Duration (1.09) attributes have the higher values of skweness and precises that Lambda = 0 will be used for transformations for Amount and Duration attributes . Numerical features such as n_credits, present_resid, Age, Duration are seen more as discrete variables. The Amount attribute remains the only good candidate to apply a log transformation.

predict() method applies the results to a data frame myTransformed. In the latter,we can find the transformed Amount variable and other BoxCox transformatons applied to the other numerical attributes. We’ll take it account for our successive analysis only if necessary.

myTransformed <- predict(mySkew, german.copy)

glimpse(myTransformed)Observations: 1,000

Variables: 21

$ Duration <dbl> 1.791759, 3.871201, 2.484907, 3.737670, 3.178...

$ Amount <dbl> 7.063904, 8.691315, 7.647786, 8.972337, 8.490...

$ installment_rate <dbl> 4.666667, 1.218951, 1.218951, 1.218951, 2.797...

$ present_resid <dbl> 4, 2, 3, 4, 4, 4, 4, 2, 4, 2, 1, 4, 1, 4, 4, ...

$ Age <dbl> 1.353297, 1.264435, 1.334866, 1.329110, 1.339...

$ n_credits <dbl> 0.3750000, 0.0000000, 0.0000000, 0.0000000, 0...

$ n_people <dbl> 1, 1, 2, 2, 2, 2, 1, 1, 1, 1, 1, 1, 1, 1, 1, ...

$ Telephone <fct> none, yes, yes, yes, yes, none, yes, none, ye...

$ ForeignWorker <fct> yes, yes, yes, yes, yes, yes, yes, yes, yes, ...

$ check_acct <fct> lt.0, 0.to.200, none, lt.0, lt.0, none, none,...

$ credit_hist <fct> Critical, PaidDuly, Critical, PaidDuly, Delay...

$ Purpose <fct> Radio.Television, Radio.Television, Education...

$ savings_acct <fct> Unknown, lt.100, lt.100, lt.100, lt.100, Unkn...

$ present_emp <fct> gt.7, 1.to.4, 4.to.7, 4.to.7, 1.to.4, 1.to.4,...

$ status_sex <fct> Male.Single, Female.NotSingle, Male.Single, M...

$ other_debt <fct> None, None, None, Guarantor, None, None, None...

$ Property <fct> RealEstate, RealEstate, RealEstate, Insurance...

$ other_install <fct> None, None, None, None, None, None, None, Non...

$ Housing <fct> Own, Own, Own, ForFree, ForFree, ForFree, Own...

$ Job <fct> SkilledEmployee, SkilledEmployee, UnskilledRe...

$ Risk <fct> Good, Bad, Good, Good, Bad, Good, Good, Good,...p1_new <- myTransformed %>%

ggplot(aes(x=Amount)) +

geom_histogram(aes(y=..density..),

binwidth=.5,

colour="black", fill="white") +

geom_density(alpha=.5, fill="purple") + # Overlay with transparent density plot

labs(title= "Histogram of Amount", subtitle= "overlaid with kernel density curve",

caption = "source: my transformed german data with no Skewness")

p1_new

Before to continue with the Feature selection step, we are going to recode the response variable Risk, by creating a new variable (numerical) risk_bin where 0 corresponds to a Bad credit worthiness and 1 to a Good credit worthiness. We didn’t eliminate the original Risk outcome , they can be both useful for successive analysis depending of the Machine learning model we’ll test.

german.copy <- german.copy %>%

mutate(risk_bin = ifelse(Risk == "Bad",0,1))3.2. Features selection

According to HA & Nguyen (2016), there are two different categories of feature selection methods: filter and wrapper methods.

3.2.1.

filter methods

The filter approach considers the feature selection process as a precursor stage of learning algorithms. The filter model uses evaluation functions to evaluate the classification performances of subsets of features. There are many evaluation functions such as feature importance, Gini, information gain, filtering, etc. A disadvantage of this approach is that there is no direct relationship between the feature selection process and the performance of learning algorithms.

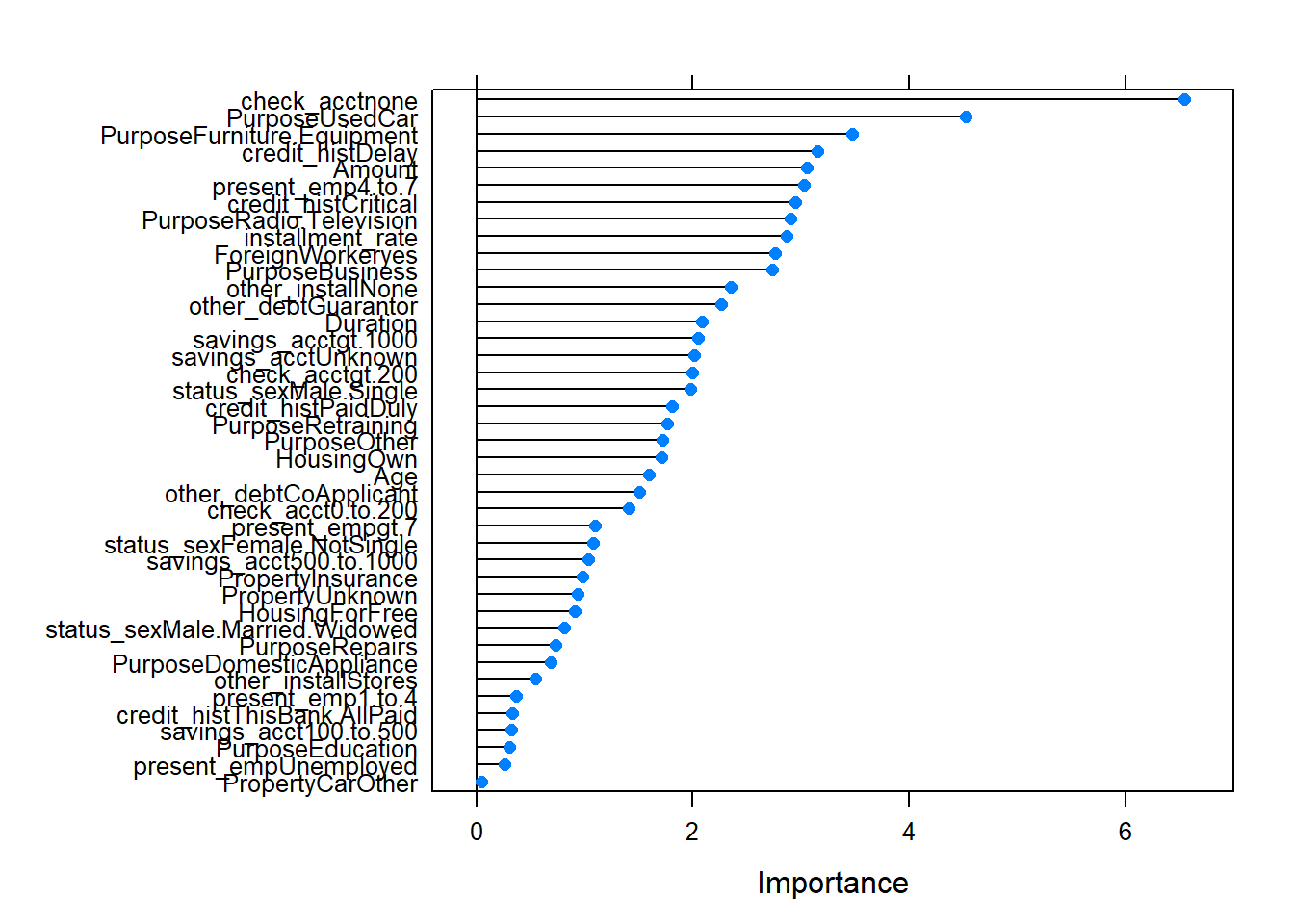

- sbf (selection by filtering, caret package):

The caret function sbf (for selection by filter) can be used to cross-validate such feature selection schemes. It’s a part of Univariate filters .They pre-screen the predictors using simple univariate statistical methods.As an example, it has been suggested for classification models, that predictors can be filtered by conducting some sort of k-sample test (where k is the number of classes) to see if the mean of the predictor is different between the classes. Wilcoxon tests, t-tests and ANOVA models are sometimes used. Predictors that have statistically significant differences between the classes are then used for modeling.For more details, read this

filterCtrl <- sbfControl(functions = rfSBF, method = "repeatedcv", repeats = 5)

set.seed(1)

rfWithFilter <- sbf( Risk ~ ., german.copy[,-22], sbfControl = filterCtrl)

rfWithFilter##

## Selection By Filter

##

## Outer resampling method: Cross-Validated (10 fold, repeated 5 times)

##

## Resampling performance:

##

## Accuracy Kappa AccuracySD KappaSD

## 0.743 0.3189 0.03666 0.09716

##

## Using the training set, 23 variables were selected:

## Duration, Amount, installment_rate, Age, ForeignWorkeryes...

##

## During resampling, the top 5 selected variables (out of a possible 30):

## Age (100%), Amount (100%), check_acct0.to.200 (100%), check_acctnone (100%), credit_histCritical (100%)

##

## On average, 21.8 variables were selected (min = 19, max = 25)The output of Model fitting after applying sbf univariate filters tell us that the top 5 selected variables are : Age (100%), Amount (100%), check_acct0.to.200 (100%), check_acctnone (100%), credit_histCritical (100%).Let’s try another method to assert or rebut the latter findings.

- WOE,IV (Information package) :

Weight of Evidence(WOE): WOE shows predictive power of an independent variable in relation to dependent variable. It evolved with credit scoring to magnify separation power between a good customer and a bad customer, hence it is one of the measures of separation between two classes(good/bad, yes/no, 0/1, A/B, response/no-response). It is defined as: \[WOE = ln(\frac{Distribution.of.nonEvents(Good)}{Distribution.of.Events(Bad)})\] .It is computed from the basic odds ratio: (Distribution of Good Credit Outcomes) / (Distribution of Bad Credit Outcomes)

Some benefits of WOE lie in the fact that it can treat outliers (Suppose you have a continuous variable such as annual salary and extreme values are more than 500 million dollars. These values would be grouped to a class of (let’s say 250-500 million dollars). Later, instead of using the raw values, we would be using WOE scores of each classes.) and handle missing values as missing values can be binned separately. Also, It handles both continuous and categorical variables so there is no need for dummy variables.

Information value(IV): Information value is one of the most useful technique to select important variables in a predictive model. It helps to rank variables on the basis of their importance. The IV is calculated using the following formula : \[IV =\sum(\%nonEvents - \%events)* WOE\]

According to Siddiqi (2006), by convention the values of the \(IV\) statistic in credit scoring can be interpreted as follows.

If the \(IV\) statistic is:

- Less than 0.02, then the predictor is not useful for modeling (separating the Goods from the Bads)

- 0.02 to 0.1, then the predictor has only a weak relationship to the Goods/Bads odds ratio

- 0.1 to 0.3, then the predictor has a medium strength relationship to the Goods/Bads odds ratio

- 0.3 to 0.5, then the predictor has a strong relationship to the Goods/Bads odds ratio.

- greater than 0.5, suspicious relationship (Check once)

IV <- create_infotables(data=german.copy[,-21], NULL, y="risk_bin", bins=10)

IV$Summary$IV <- round(IV$Summary$IV*100,2)

#get tables from the IV statistic

IV$Tables## $Duration

## Duration N Percent WOE IV

## 1 [4,8] 94 0.094 1.28093385 0.1110143

## 2 [9,11] 86 0.086 0.55359530 0.1342125

## 3 [12,14] 187 0.187 0.16066006 0.1388793

## 4 [15,16] 66 0.066 0.55804470 0.1569494

## 5 [18,22] 153 0.153 -0.18342106 0.1622773

## 6 [24,28] 201 0.201 -0.03995831 0.1626008

## 7 [30,33] 43 0.043 -0.11905936 0.1632244

## 8 [36,72] 170 0.170 -0.77668029 0.2778772

##

## $Amount

## Amount N Percent WOE IV

## 1 [250,931] 99 0.099 -0.06177736 0.0003824313

## 2 [932,1258] 99 0.099 -0.01438874 0.0004029866

## 3 [1262,1474] 99 0.099 0.23789141 0.0057272229

## 4 [1478,1901] 102 0.102 0.38665578 0.0197204795

## 5 [1905,2315] 100 0.100 0.04808619 0.0199494614

## 6 [2319,2835] 100 0.100 0.25131443 0.0259331383

## 7 [2848,3578] 100 0.100 0.36101335 0.0379669164

## 8 [3590,4712] 100 0.100 0.04808619 0.0381958983

## 9 [4716,7166] 100 0.100 -0.31508105 0.0486985998

## 10 [7174,18424] 101 0.101 -0.74820696 0.1117617577

##

## $installment_rate

## installment_rate N Percent WOE IV

## 1 [1,1] 136 0.136 0.25131443 0.008137801

## 2 [2,2] 231 0.231 0.15546647 0.013542111

## 3 [3,3] 157 0.157 0.06453852 0.014187496

## 4 [4,4] 476 0.476 -0.15730029 0.026322090

##

## $present_resid

## present_resid N Percent WOE IV

## 1 [1,1] 130 0.130 0.112477983 0.001606828

## 2 [2,2] 308 0.308 -0.070150705 0.003143463

## 3 [3,3] 149 0.149 0.054941118 0.003588224

## 4 [4,4] 413 0.413 -0.001152738 0.003588773

##

## $Age

## Age N Percent WOE IV

## 1 [19,22] 57 0.057 -0.38299225 0.008936486

## 2 [23,25] 133 0.133 -0.59025276 0.059810652

## 3 [26,27] 101 0.101 0.16093037 0.062339558

## 4 [28,29] 80 0.080 -0.33647224 0.071953050

## 5 [30,32] 112 0.112 0.11316409 0.073354130

## 6 [33,35] 105 0.105 0.06899287 0.073846936

## 7 [36,38] 92 0.092 0.56639547 0.099739300

## 8 [39,44] 119 0.119 0.06899287 0.100297814

## 9 [45,51] 96 0.096 0.48770321 0.120734901

## 10 [52,75] 105 0.105 0.06899287 0.121227707

##

## $n_credits

## n_credits N Percent WOE IV

## 1 [1,1] 633 0.633 -0.0748775 0.003601251

## 2 [2,4] 367 0.367 0.1347806 0.010083557

##

## $n_people

## n_people N Percent WOE IV

## 1 [1,1] 845 0.845 -0.00281611 6.705024e-06

## 2 [2,2] 155 0.155 0.01540863 4.339223e-05

##

## $Telephone

## Telephone N Percent WOE IV

## 1 none 404 0.404 0.09863759 0.003851563

## 2 yes 596 0.596 -0.06469132 0.006377605

##

## $ForeignWorker

## ForeignWorker N Percent WOE IV

## 1 no 37 0.037 1.26291534 0.04269857

## 2 yes 963 0.963 -0.03486727 0.04387741

##

## $check_acct

## check_acct N Percent WOE IV

## 1 0.to.200 269 0.269 -0.4013918 0.04644676

## 2 gt.200 63 0.063 0.4054651 0.05590762

## 3 lt.0 274 0.274 -0.8180987 0.26160100

## 4 none 394 0.394 1.1762632 0.66601150

##

## $credit_hist

## credit_hist N Percent WOE IV

## 1 Critical 293 0.293 0.73374058 0.1324227

## 2 Delay 88 0.088 -0.08515781 0.1330715

## 3 NoCredit.AllPaid 40 0.040 -1.35812348 0.2171458

## 4 PaidDuly 530 0.530 -0.08831862 0.2213515

## 5 ThisBank.AllPaid 49 0.049 -1.13497993 0.2932335

##

## $Purpose

## Purpose N Percent WOE IV

## 1 Business 97 0.097 -0.23052366 0.005378885

## 2 DomesticAppliance 12 0.012 -0.15415068 0.005672506

## 3 Education 50 0.050 -0.60613580 0.025877032

## 4 Furniture.Equipment 181 0.181 -0.09555652 0.027560647

## 5 NewCar 234 0.234 -0.35920049 0.059717643

## 6 Other 12 0.012 -0.51082562 0.063123147

## 7 Radio.Television 280 0.280 0.41006282 0.106082109

## 8 Repairs 22 0.022 -0.28768207 0.107999990

## 9 Retraining 9 0.009 1.23214368 0.117974486

## 10 UsedCar 103 0.103 0.77383609 0.169195066

##

## $savings_acct

## savings_acct N Percent WOE IV

## 1 100.to.500 103 0.103 -0.1395519 0.002060052

## 2 500.to.1000 63 0.063 0.7060506 0.028621002

## 3 Unknown 183 0.183 0.7042461 0.105417360

## 4 gt.1000 48 0.048 1.0986123 0.149361851

## 5 lt.100 603 0.603 -0.2713578 0.196009557

##

## $present_emp

## present_emp N Percent WOE IV

## 1 1.to.4 339 0.339 -0.03210325 0.000351607

## 2 4.to.7 174 0.174 0.39441527 0.025143424

## 3 Unemployed 62 0.062 -0.31923043 0.031832062

## 4 gt.7 253 0.253 0.23556607 0.045180806

## 5 lt.1 172 0.172 -0.47082029 0.086433631

##

## $status_sex

## status_sex N Percent WOE IV

## 1 Female.NotSingle 310 0.310 -0.2353408 0.01793073

## 2 Male.Divorced.Seperated 50 0.050 -0.4418328 0.02845056

## 3 Male.Married.Widowed 92 0.092 0.1385189 0.03016555

## 4 Male.Single 548 0.548 0.1655476 0.04467068

##

## $other_debt

## other_debt N Percent WOE IV

## 1 CoApplicant 41 0.041 -0.6021754024 0.01634476

## 2 Guarantor 52 0.052 0.5877866649 0.03201907

## 3 None 907 0.907 0.0005250722 0.03201932

##

## $Property

## Property N Percent WOE IV

## 1 CarOther 332 0.332 -0.03419136 0.0003907585

## 2 Insurance 232 0.232 -0.02857337 0.0005812476

## 3 RealEstate 282 0.282 0.46103496 0.0545882000

## 4 Unknown 154 0.154 -0.58608236 0.1126382624

##

## $other_install

## other_install N Percent WOE IV

## 1 Bank 139 0.139 -0.4836299 0.03523589

## 2 None 814 0.814 0.1211786 0.04689212

## 3 Stores 47 0.047 -0.4595323 0.05761454

##

## $Housing

## Housing N Percent WOE IV

## 1 ForFree 108 0.108 -0.4726044 0.02610577

## 2 Own 713 0.713 0.1941560 0.05190078

## 3 Rent 179 0.179 -0.4044452 0.08329343

##

## $Job

## Job N Percent WOE IV

## 1 Management.SelfEmp.HighlyQualified 148 0.148 -0.20441251 0.006424393

## 2 SkilledEmployee 630 0.630 0.02278003 0.006749822

## 3 UnemployedUnskilled 22 0.022 -0.08515781 0.006912028

## 4 UnskilledResident 200 0.200 0.09716375 0.008762766#get summary of the IV statistic

IV$Summary Variable IV

10 check_acct 66.60

11 credit_hist 29.32

1 Duration 27.79

13 savings_acct 19.60

12 Purpose 16.92

5 Age 12.12

17 Property 11.26

2 Amount 11.18

14 present_emp 8.64

19 Housing 8.33

18 other_install 5.76

15 status_sex 4.47

9 ForeignWorker 4.39

16 other_debt 3.20

3 installment_rate 2.63

6 n_credits 1.01

20 Job 0.88

8 Telephone 0.64

4 present_resid 0.36

7 n_people 0.00We observed that following variables do not have prediction power - very very weak predictor (IV< 2%), hence we shall exclude them from modeling:

kable(IV$Summary %>%

filter(IV < 2))| Variable | IV |

|---|---|

| n_credits | 1.01 |

| Job | 0.88 |

| Telephone | 0.64 |

| present_resid | 0.36 |

| n_people | 0.00 |

Second group of variables are very weak predictors (2%<=IV< 10%), hence we may or may not include them while modeling

kable(IV$Summary %>%

filter(IV >= 2 & IV < 10 ))| Variable | IV |

|---|---|

| present_emp | 8.64 |

| Housing | 8.33 |

| other_install | 5.76 |

| status_sex | 4.47 |

| ForeignWorker | 4.39 |

| other_debt | 3.20 |

| installment_rate | 2.63 |

Third group of variables have medium prediction power (10%<=IV< 30%), hence we will include them in modeling as we have less number of variables

kable(IV$Summary %>%

filter(IV >= 10 & IV < 30 ))| Variable | IV |

|---|---|

| credit_hist | 29.32 |

| Duration | 27.79 |

| savings_acct | 19.60 |

| Purpose | 16.92 |

| Age | 12.12 |

| Property | 11.26 |

| Amount | 11.18 |

There is no strong predictor with IV between 30% to 50%

kable(IV$Summary %>%

filter(IV >= 30 & IV < 50 ))| Variable | IV |

|---|---|

check_acct has a very high prediction power (IV > 50%), it could be suspicious and require further investigation.

kable(IV$Summary %>%

filter( IV > 50 ))| Variable | IV |

|---|---|

| check_acct | 66.6 |

3.2.2.

wrapper methods

Wrapper methods consider the selection of a set of features as a search problem, where different combinations are prepared, evaluated and compared to other combinations. A predictive model us used to evaluate a combination of features and assign a score based on model accuracy. The search process may be methodical such as a best-first search, it may stochastic such as a random hill-climbing algorithm, or it may use heuristics, like forward and backward passes to add and remove features. One disadvantage of the wrapper approach is highly computational cost.

An example of a wrapper method is the recursive feature elimination algorithm. Another example is the boruta algorithm.

- rfe (recursive feature elimination, caret package):

Recursive feature elimination via the caret package, is a wrapper method which is explained by the algorithm below. For further details follow Recursive Feature Elimination via caret

recursive feature elimitation algorithm

We created a function called “recur.feature.selection” which internally defined : x, the data frame of predictor variables ; y, the vector (numeric or factor) of outcome (y) ; sizes, an integer vector for the specific subset sizes that should be tested, and a list of options that can be used to specify the model and the methods for prediction(using rfecontrol). Then we applied the algorithm using the rfe function.

recur.feature.selection <- function(num.iters=20, features.var, outcome.var){

set.seed(10)

sizes.var <- 1:20

control <- rfeControl(functions = rfFuncs, #pre-defined sets of functions,randomForest(rffuncs)

method = "cv",

number = num.iters,

returnResamp = "all",

verbose = FALSE

)

results.rfe <- rfe(x = features.var,

y = outcome.var,

sizes = sizes.var,

rfeControl = control)

return(results.rfe)

}#before to apply the rfe algorithm, i clear unusued memory

invisible(gc())#To resolve the summary.connection(connection) : invalid connection, i install the doSnow-parallel-DeLuxe R script performed by Tobias Kind (2015); see more here : https://github.com/tobigithub/R-parallel/wiki/R-parallel-Errors

source("Install-doSNOW-parallel-DeLuxe.R") ## 2 cores detected.4 threads detected.[1] "Cluster stopped."#i remove in the features.var (both Risk (21) and risk_bin (22)) / i keep only Risk (factor) as outcome var.

rfe.results <- recur.feature.selection(features.var = german.copy[,c(-21,-22)],

outcome.var = german.copy[,21])

# view results

rfe.results##

## Recursive feature selection

##

## Outer resampling method: Cross-Validated (20 fold)

##

## Resampling performance over subset size:

##

## Variables Accuracy Kappa AccuracySD KappaSD Selected

## 1 0.687 0.01115 0.03197 0.03603

## 2 0.727 0.26590 0.04169 0.13144

## 3 0.737 0.28146 0.04269 0.11063

## 4 0.729 0.30158 0.05524 0.15665

## 5 0.746 0.34942 0.05236 0.14191

## 6 0.772 0.40465 0.05085 0.14150

## 7 0.770 0.39423 0.04026 0.12075

## 8 0.766 0.38039 0.05586 0.16309

## 9 0.770 0.39346 0.05712 0.16442

## 10 0.776 0.40547 0.05295 0.15160

## 11 0.778 0.40704 0.05386 0.15556 *

## 12 0.773 0.39260 0.04953 0.14876

## 13 0.772 0.38039 0.06031 0.17823

## 14 0.772 0.38123 0.05288 0.15897

## 15 0.760 0.34651 0.05351 0.15822

## 16 0.772 0.37718 0.05167 0.15584

## 17 0.769 0.37059 0.05004 0.15479

## 18 0.761 0.35214 0.04564 0.14335

## 19 0.773 0.37491 0.04366 0.13925

## 20 0.771 0.37221 0.04745 0.14784

##

## The top 5 variables (out of 11):

## check_acct, Duration, credit_hist, Amount, savings_acctThe recursive feature selection output (Outer resampling method: Cross-Validated (20 fold) ) shows that the top 5 variables are: check_acct, Duration, credit_hist, Amount and savings_acct. These findings are somehow in line with those of WOE and IV statistics. ( check_acct has the highest power prediction, and features such as Amount,Duration, savings_acct have medium prediction power)

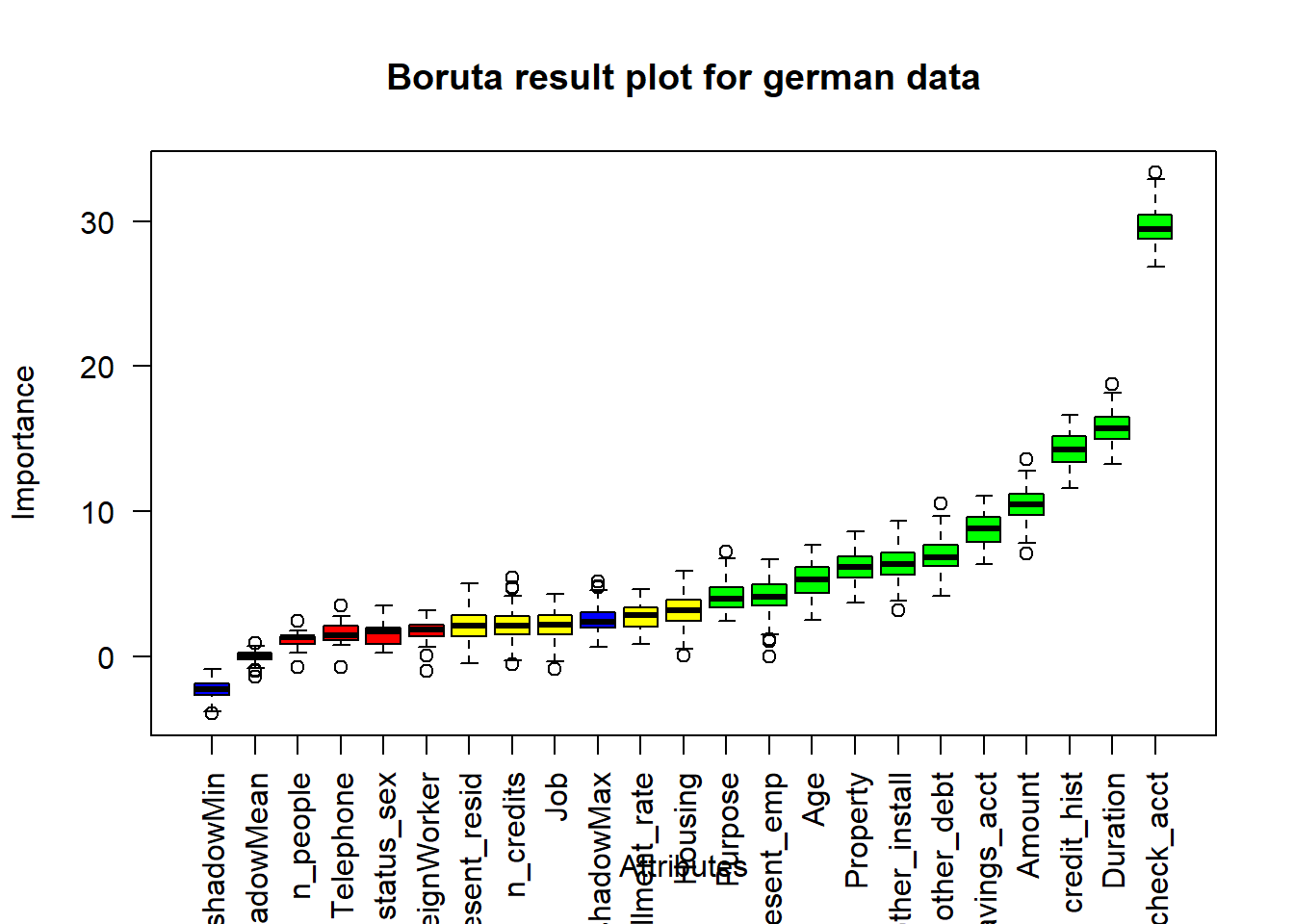

- Boruta algorithm :

Boruta algorithm is a wrapper built around the random forest classification algorithm implemented in the R package randomForest (Liaw and Wiener, 2002). It tries to capture all the important, interesting features you might have in your dataset with respect to an outcome variable. As explained in Kursa & Rudnicki (2010), the steps of the boruta algorithm can be resume as follow:

- First, it duplicates the dataset, and shuffle the values in each column. These values are called shadow features. Then, it trains a classifier, such as a Random Forest Classifier, on the dataset. By doing this, you ensure that you can an idea of the importance -via the Mean Decrease Accuracy or Mean Decrease Impurity- for each of the features of your data set. The higher the score, the better or more important.

- Then, the algorithm checks for each of your real features if they have higher importance. That is, whether the feature has a higher Z-score than the maximum Z-score of its shadow features than the best of the shadow features. If they do, it records this in a vector. These are called a hits. Next,it will continue with another iteration. After a predefined set of iterations, you will end up with a table of these hits.

- At every iteration, the algorithm compares the Z-scores of the shuffled copies of the features and the original features to see if the latter performed better than the former. If it does, the algorithm will mark the feature as important. In essence, the algorithm is trying to validate the importance of the feature by comparing with random shuffled copies, which increases the robustness. This is done by simply comparing the number of times a feature did better with the shadow features using a binomial distribution.

- If a feature hasn’t been recorded as a hit in say 15 iterations, you reject it and also remove it from the original matrix. After a set number of iterations -or if all the features have been either confirmed or rejected- you stop.

set.seed(123)

Boruta.german <- Boruta(Risk ~ . , data = german.copy[,-22], doTrace = 0, ntree = 500)

Boruta.germanBoruta performed 99 iterations in 1.026238 mins.

11 attributes confirmed important: Age, Amount, check_acct,

credit_hist, Duration and 6 more;

4 attributes confirmed unimportant: ForeignWorker, n_people,

status_sex, Telephone;

5 tentative attributes left: Housing, installment_rate, Job,

n_credits, present_resid;#Functions which convert the Boruta selection into a formula which returns only Confirmed attributes.

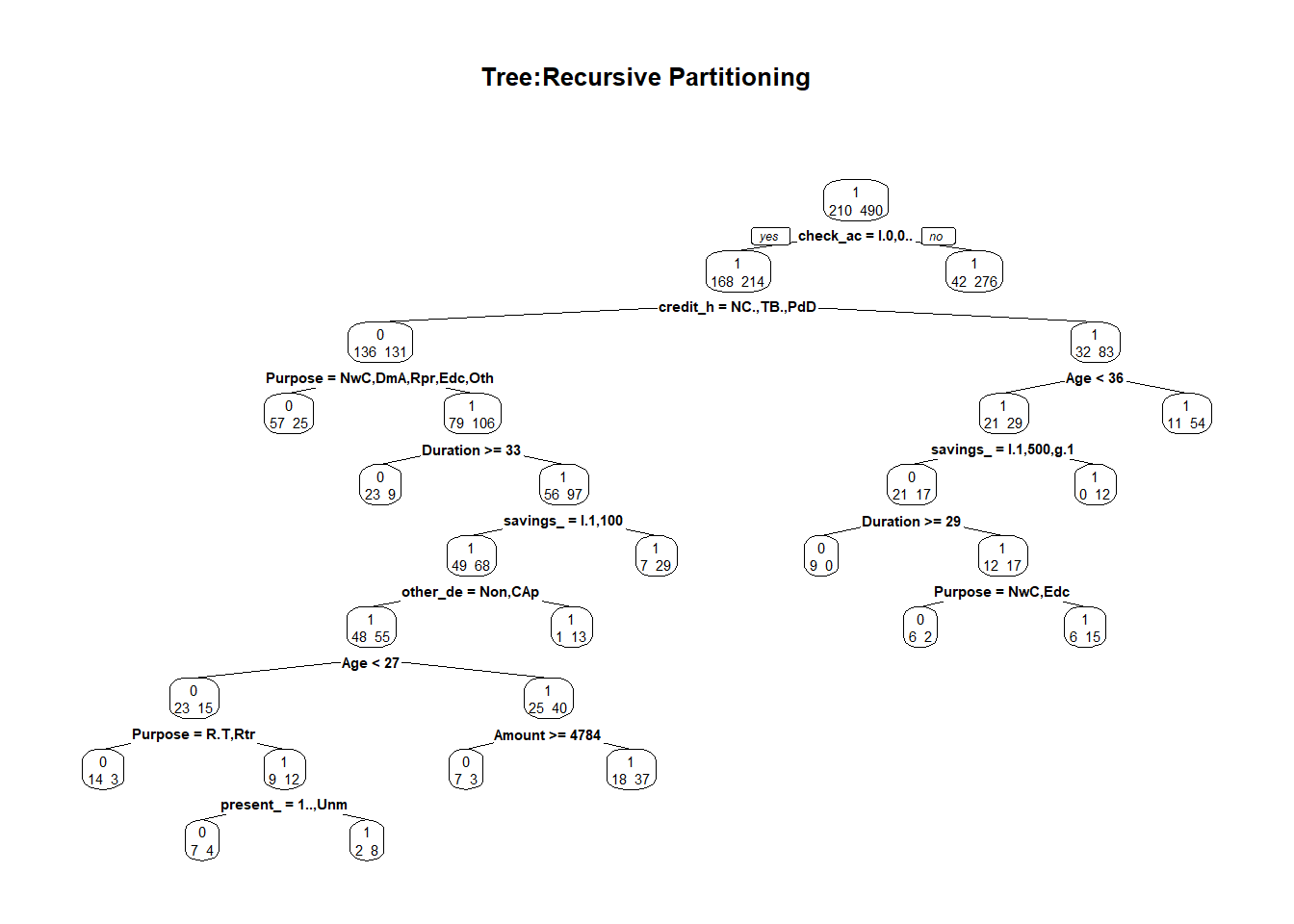

getConfirmedFormula(Boruta.german)Risk ~ Duration + Amount + Age + check_acct + credit_hist + Purpose +

savings_acct + present_emp + other_debt + Property + other_install

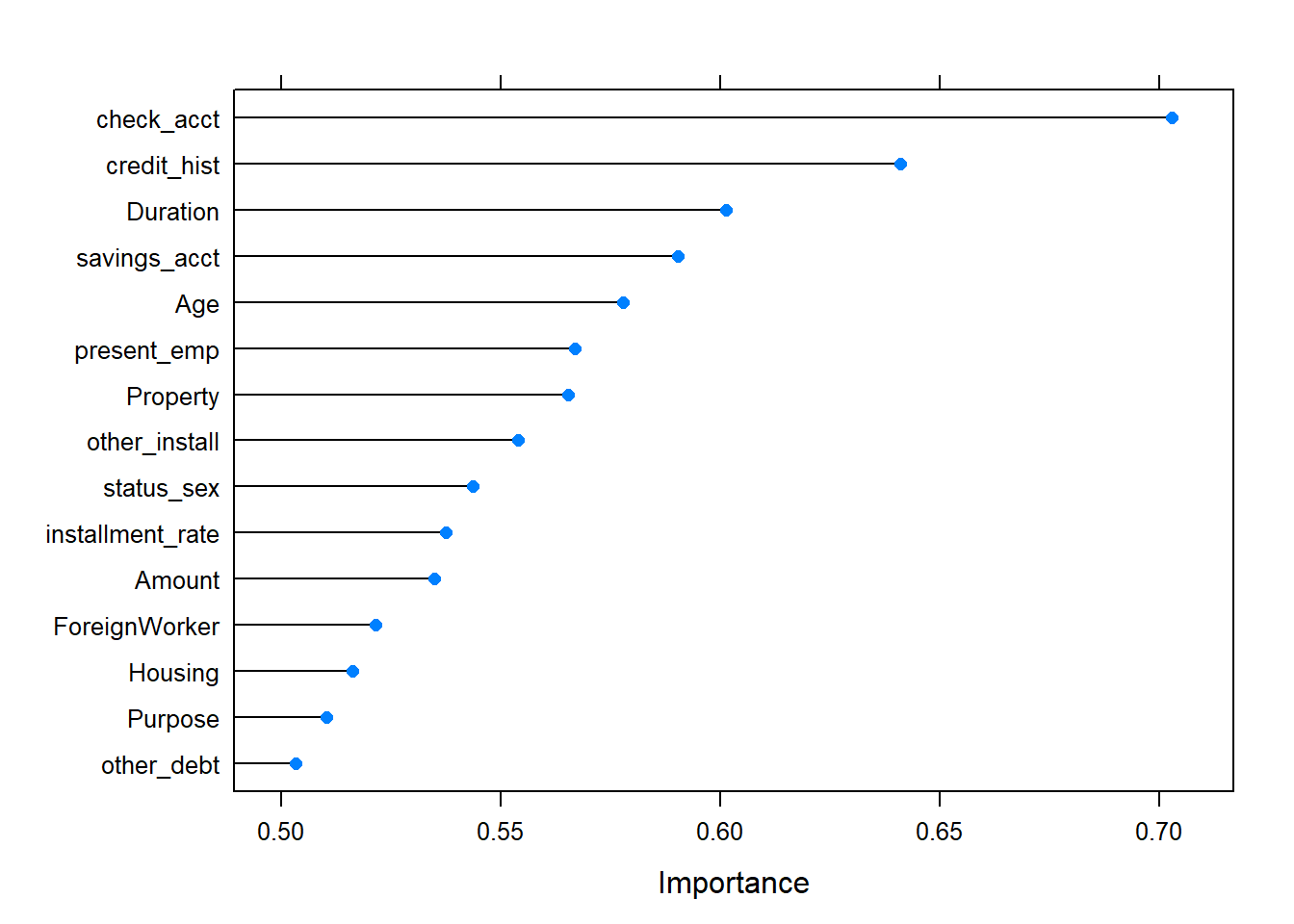

<environment: 0x00000000234f9f30>Boruta function uses y the response vector as factor . Then in the formula, we should consider Risk and not risk_bin. We observed that Boruta performed 99 iterations in 2.055356 mins, and the confirmed important attributes are 11 : Duration , Amount , Age , check_acct , credit_hist , Purpose , savings_acct , present_emp , other_debt , Property and other_install (results that go in accordance with WOE and IV statistics, from weak to highest predictors) . See the plot below, where Blue boxplots correspond to minimal, average and maximum Z score of a shadow attribute. Red and green boxplots represent Z scores of respectively rejected and confirmed attributes. Yellow boxplots represent Z scores of 5 tentative attributes left.

#plot boruta object

plot(Boruta.german,las=2, main="Boruta result plot for german data")

3.2.3.

Embedded methods

A third feature selection method, embedded methods , is detailed in Aggarwal (2014). This class of methods embedding feature selection with classifier construction, have the advantages of wrapper models — they include the interaction with the classification model and filter models—and they are far less computationally intensive than wrapper methods. There are of 3 types : The first are pruning techniques that first utilize all features to train a model and then attempt to eliminate some features by setting the corresponding coefficients to 0 , while maintaining model performance such as recursive feature elimination using a support vector machine . The second are models with a built-in mechanism for feature selection such as C4.5 algorithm. The third are regularization models with objective functions that minimize fitting errors and in the meantime force the coefficients to be small or to be exactly zero ( Elastic Net or Ridge Regression)

However in our study, we are not going to developp these models as features selectors . Here, we just give an overview of this third feature selection approach.

At the end, Based on the Weight of Evidence and Information Value statistics results, we will keep attributes which \(IV > 2\). More over, the wrapper methods , recursive feature elimination and Boruta algorithm confirm this choice since they helped to investigate better on the suspicious character of the highest power predictor, check_acct. The important attributes validated by these algorithms corroborate with our choice.

#attributes to keep (IV>2)

keep <- IV$Summary %>%

filter( IV > 2)

#german.copy data with attributes(IV>2) and response variable

german.copy2 <- german.copy[,c(keep$Variable,"Risk")]

#for convenience, we change the levels Good = 1, Bad = 0

german.copy2$Risk <- as.factor(ifelse(german.copy2$Risk == "Bad", '0', '1'))

#get a glimpse of german copies , see the differences./ german.copy2 is our subsetted data after pre-processing

glimpse(german.copy2)Observations: 1,000

Variables: 16

$ check_acct <fct> lt.0, 0.to.200, none, lt.0, lt.0, none, none,...

$ credit_hist <fct> Critical, PaidDuly, Critical, PaidDuly, Delay...

$ Duration <dbl> 6, 48, 12, 42, 24, 36, 24, 36, 12, 30, 12, 48...

$ savings_acct <fct> Unknown, lt.100, lt.100, lt.100, lt.100, Unkn...

$ Purpose <fct> Radio.Television, Radio.Television, Education...

$ Age <dbl> 67, 22, 49, 45, 53, 35, 53, 35, 61, 28, 25, 2...

$ Property <fct> RealEstate, RealEstate, RealEstate, Insurance...

$ Amount <dbl> 1169, 5951, 2096, 7882, 4870, 9055, 2835, 694...

$ present_emp <fct> gt.7, 1.to.4, 4.to.7, 4.to.7, 1.to.4, 1.to.4,...

$ Housing <fct> Own, Own, Own, ForFree, ForFree, ForFree, Own...

$ other_install <fct> None, None, None, None, None, None, None, Non...

$ status_sex <fct> Male.Single, Female.NotSingle, Male.Single, M...

$ ForeignWorker <fct> yes, yes, yes, yes, yes, yes, yes, yes, yes, ...

$ other_debt <fct> None, None, None, Guarantor, None, None, None...

$ installment_rate <dbl> 4, 2, 2, 2, 3, 2, 3, 2, 2, 4, 3, 3, 1, 4, 2, ...

$ Risk <fct> 1, 0, 1, 1, 0, 1, 1, 1, 1, 0, 0, 0, 1, 0, 1, ...3.3. Data partitioning

Stratified random sampling is a method of sampling that involves the division of a population into smaller groups known as strata. This is useful for imbalanced datasets, and can be used to give more weight to a minority class. In stratified random sampling, the strata are formed based on members’ shared attributes or characteristics. See more here or read Garcia et al(2012) that is already mentioned at the introduction of Data pre-processing paragraph.

In our case we will use Good/Bad as strata and partition data into 70%-30% as train and test sets. The caret function createDataPartition() can be used to create balanced splits of the data

index <- createDataPartition(y = german.copy2$Risk, p = 0.7, list = F)

# i create a function to calculate percent distribution for factors

pct <- function(x){

tbl <- table(x)

tbl_pct <- cbind(tbl,round(prop.table(tbl)*100,2))

colnames(tbl_pct) <- c('Count','Percentage')

kable(tbl_pct)

}

#training set and test set

train <- german.copy2[index,]

pct(train$Risk)| Count | Percentage | |

|---|---|---|

| 0 | 210 | 30 |

| 1 | 490 | 70 |

test <- german.copy2[-index,]

pct(test$Risk)| Count | Percentage | |

|---|---|---|

| 0 | 90 | 30 |

| 1 | 210 | 70 |

The data preprocessing phase is usually not definitive because it requires a lot of attention and subsequent various explorations on the variables. It must be aimed at obtaining better predictive results and in this sense, the further phases of model evaluations can help us to understand which particular preprocessing approaches are actually indispensable or useful for a specific model purpose.

IV. Methods and Analysis

In machine learning, classification is considered an instance of the supervised learning methods,i.e., inferring a function from labeled training data. The training data consist of a set of training examples, where each example is a pair consisting of an input object (typically a vector of features) \(x =(x_1,x_2, ...,x_d)\) and a desired output value (typically a class label) \(y ∈ \{ C_1,C_2, ...,C_K \}\) . Given such a set of training data, the task of a classification algorithm is to analyze the training data and produce an inferred function, which can be used to classify new (so far unseen) examples by assigning a correct class label to each of them. An example would be assigning an applicant credit scoring into “good†or “bad†classes(then consider the outcome Y as a boolean variable, as in our study). A general process of data classification usually consists of two phases— the training phase and the prediction phase.

There are many classification methods in the literature. These methods can be categorized broadly into probabilistic classification algorithms or linear classifiers (Naive bayes classifiers, logistic regression, Hidden markov models, etc ), support vector machines, decision trees, and Neural networks. (Aggarwal, 2014).

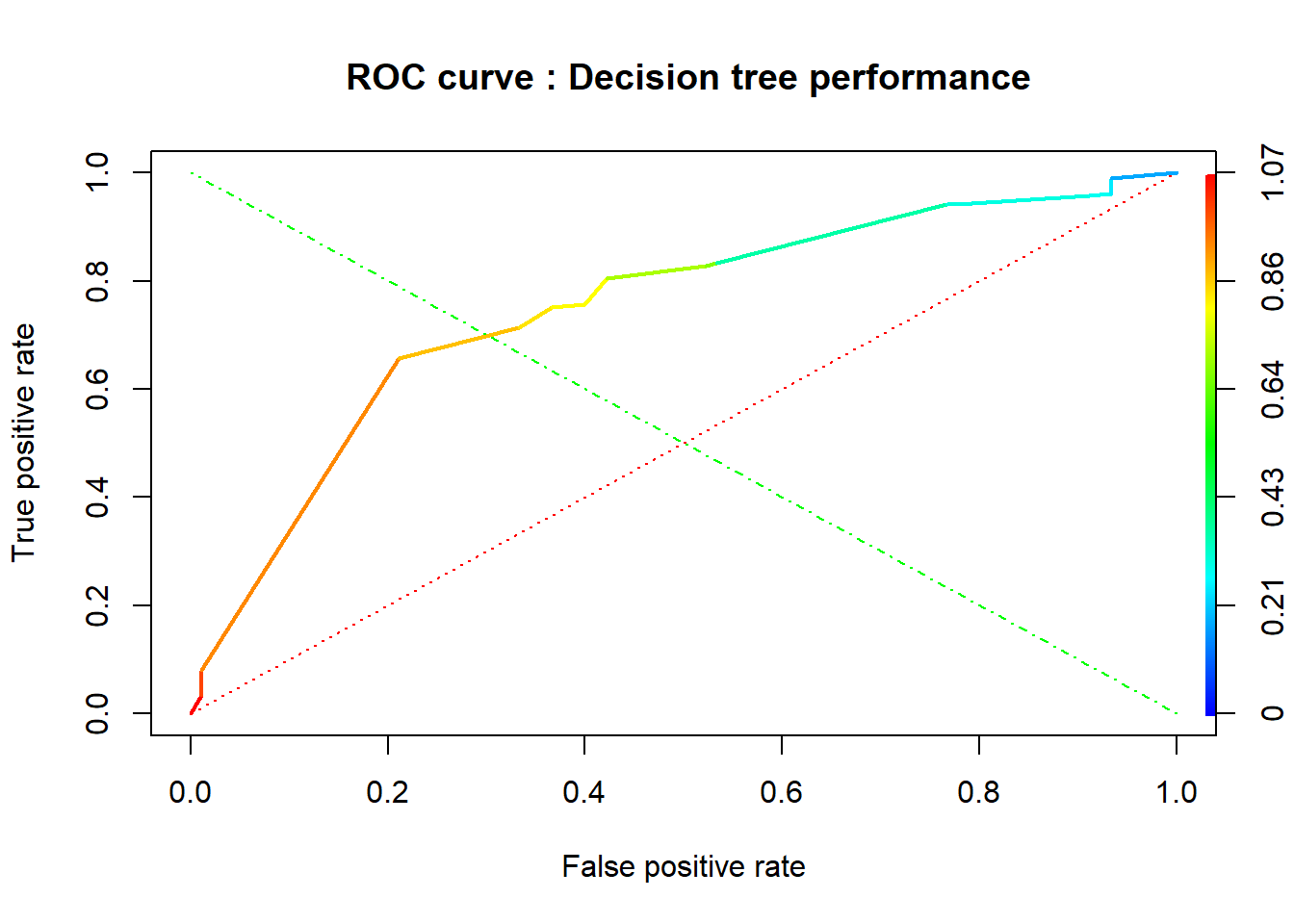

In this section, we are going to explain the methodology over differents Machine Learning algorithms we used taking our principal references from Aggarwal(2014), and to present the metrics for the model performance evaluation.

4.1. Evaluated Algorithms

4.1.1.

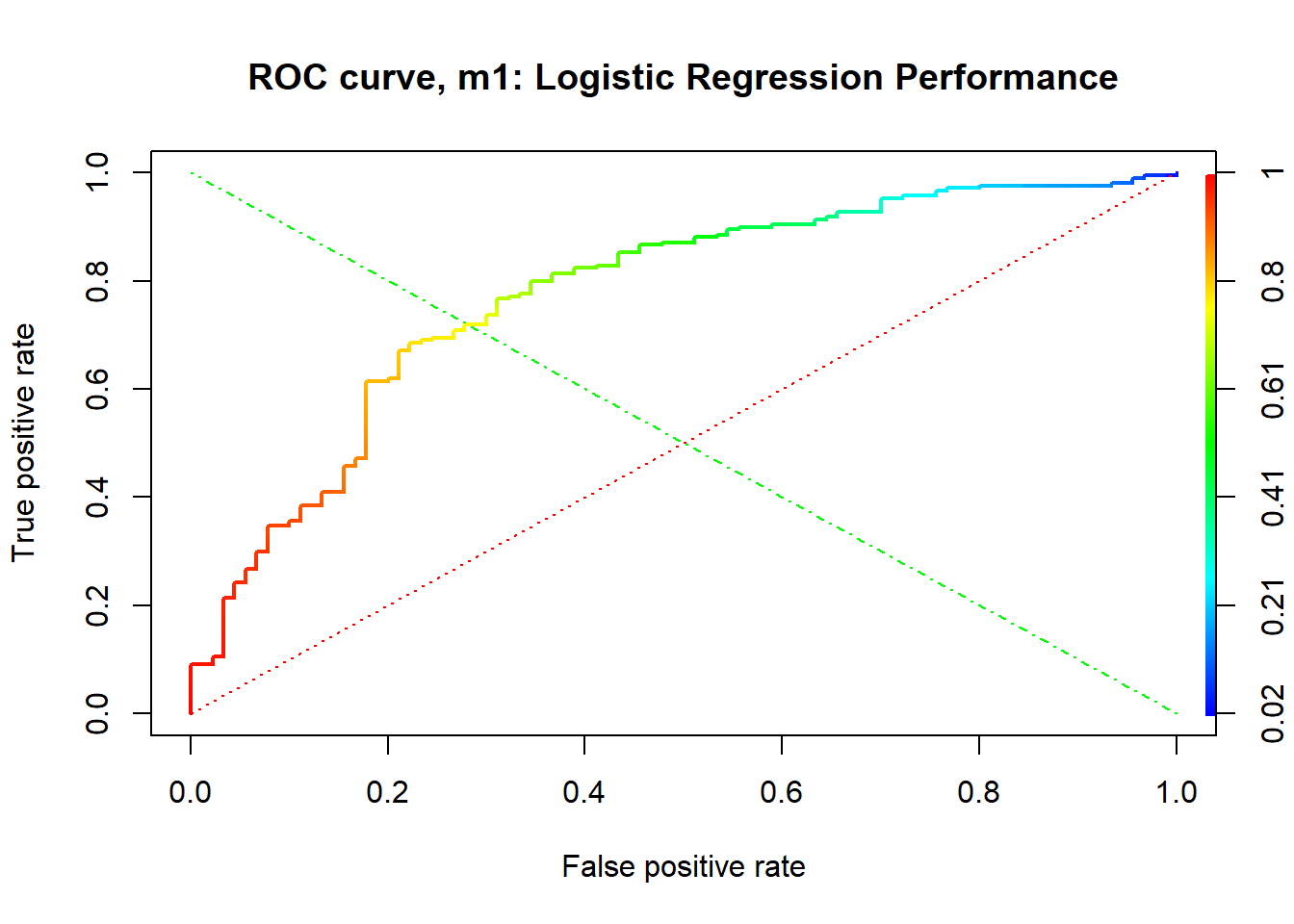

Logistic regression

Logistic regression is an approach for predicting the outcome of a categorialdependent variable based on one or more observed features. The probabilities describing the possible outcomes are modeled as a function of the observed variables using a logistic function.

The goal of logistic regression is to directly estimate the distribution P(Y|X) from the training data. Formally, the logistic regression model is defined as \[p(Y = 1|X) = g(θ^TX) = \frac{1}{\ 1 + e^−θ^T X} \tag{1} ,\] where \(g(z) = \frac{1}{\ 1 + e−z}\) is called the logistic function or the sigmoid function, and \(θ^TX = θ_0 + \sum_{i=1}^{d} θiXi\). As the sum of the probabilities must equal 1, p(Y = 0|X) can be estimated using the following equation \[p(Y = 0|X) = 1−g(θ^TX) = \frac{e−θ^TX}{\ 1 + e−θ^TX}. \tag{2}\]

Because logistic regression predicts probabilities, rather than just classes, we can fit it using likelihood. For each training data point, we have a vector of features, \(X =(X_0,X_1,...,X_d) (X_0 = 1)\)*, and an observed class, \(Y = y_k\). The probability of that class was either \(g(θ^TX)\) if \(yk = 1\), or \(1−g(θ^TX)\) if \(y_k = 0\) . Note that we can combine both Equation (1) and Equation (2) as a more compact form \[ \begin{aligned} p(Y = y_k|X;θ) &= (p(Y = 1|X)) ^{y_k} (p(Y = 0|X))^{1−y_k} \\ &= g(θ^TX)^{y_k} (1−g(θTX))^{1−y_k} \end{aligned}\]

Assuming that the N training examples were generated independently, the likelihood of the parameters can be written as

\[ \begin{aligned} L(θ) &= p(\overline{Y} |X;θ) \\ &= \prod_{n = 1}^{N} p(Y^{(n)} = y_k|X^{(n)};θ) ) \\ &= \prod_{n = 1}^{N} \left( p(Y^{(n)} = 1|X^{(n)})\right)^{Y^{(n)}} \left( p(Y^{(n)} = 0|X^{(n)})\right)^{1-Y^{(n)}} \\ &= \prod_{n = 1}^{N} \left( g( θ^T X^{(n)} )\right)^{Y^{(n)}} \left( 1 - g( θ^T X^{(n)} )\right)^{1-Y^{(n)}} \end{aligned} \tag{3}\]

where θ is the vector of parameters to be estimated, \(Y(n)\) denotes the observed value of Y in the nth training example, and \(X(n)\) denotes the observed value of X in the nth training example. To classify any given X, we generally want to assign the value \(y_k\) to Y that maximizes the likelihood. Maximizing the likelihood is equivalent to maximizing the log likelihood.

In our study, using the glm() function of the stats package, we fit our logistic regression model on the training set. Then, in the testing phase, we evaluated our fitted model on the test set with the predict() function of the same package.

4.1.2.

Decision trees

Decision trees create a hierarchical partitioning of the data, which relates the different partitions at the leaf level to the different classes. The hierarchical partitioning at each level is created with the use of a split criterion. The split criterion may either use a condition (or predicate) on a single attribute, or it may contain a condition on multiple attributes. The former is referred to as a univariate split, whereas the latter is referred to as a multivariate split. The overall approach is to try to recursively split the training data so as to maximize the discrimination among the different classes over different nodes. The discrimination among the different classes is maximized, when the level of skew among the different classes in a given node is maximized. A measure such as the gini-index or entropy is used in order to quantify this skew. For example, if \(p_1 . . . p_k\) is the fraction of the records belonging to the \(k\) different classes in a node \(N\), then the gini-index \(G(N)\) of the node \(N\) is defined as follows: \[G(N) = 1− \sum_{i=1}^k =p^2_i \tag{4}\]

The value of \(G(N)\) lies between \(0\) and \(1−1/k\). The smaller the value of G(N), the greater the skew. In the cases where the classes are evenly balanced, the value is \(1−1/k\) . An alternative measure is the entropy \(E(N)\): \[E(N) = - \sum_{i=1}^k pi · log(pi) \tag{5}\]

The value of the entropy lies between \(0\) and \(log(k)\). The value is \(log(k)\), when the records are perfectly balanced among the different classes. This corresponds to the scenario with maximum entropy. The smaller the entropy, the greater the skew in the data. Thus, the gini-index and entropy provide an effective way to evaluate the quality of a node in terms of its level of discrimination between the different classes.

Note that with decision tree, we refer here to classification tree and not regression tree, since the outcome we want to predict is a categorical variable.

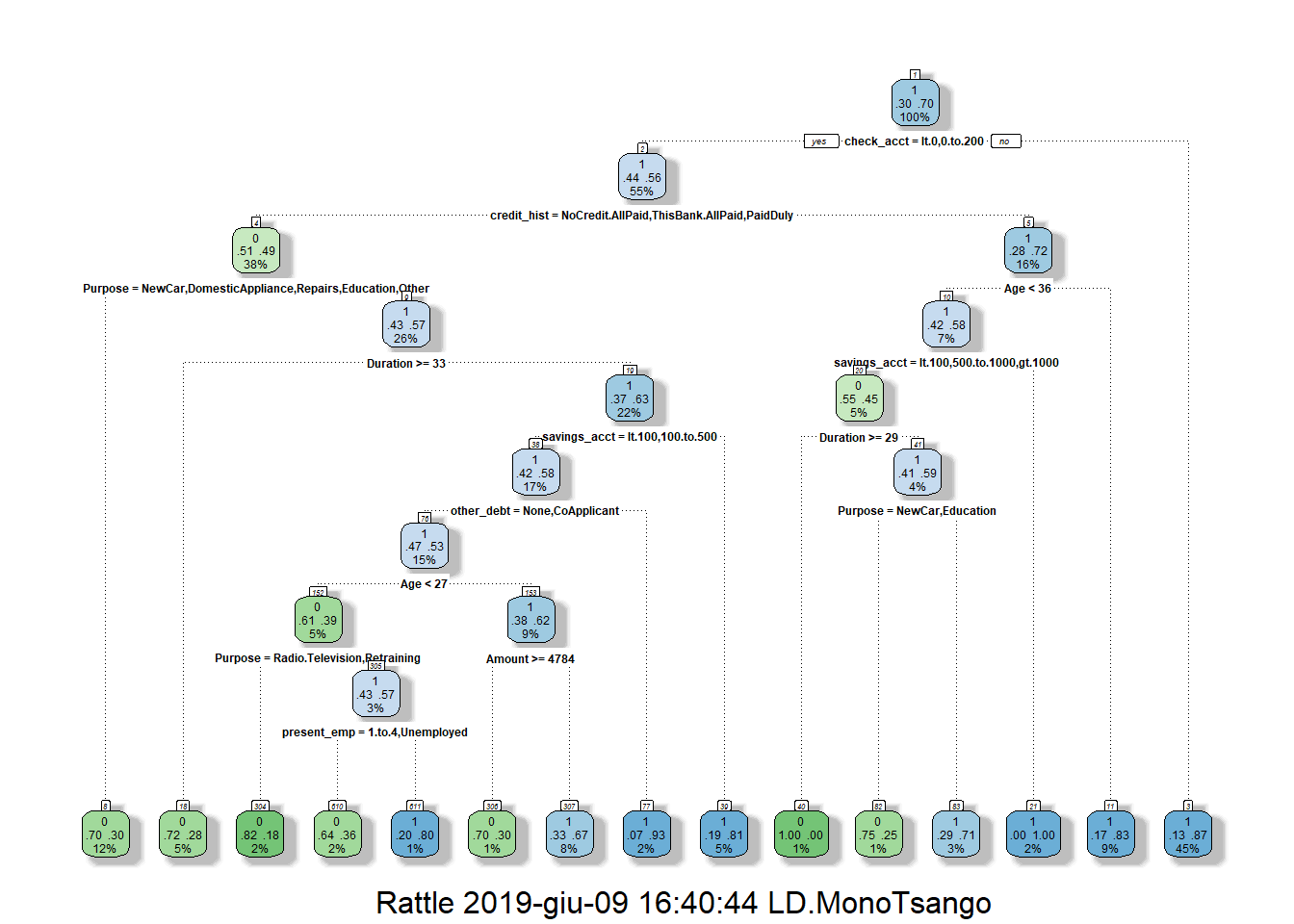

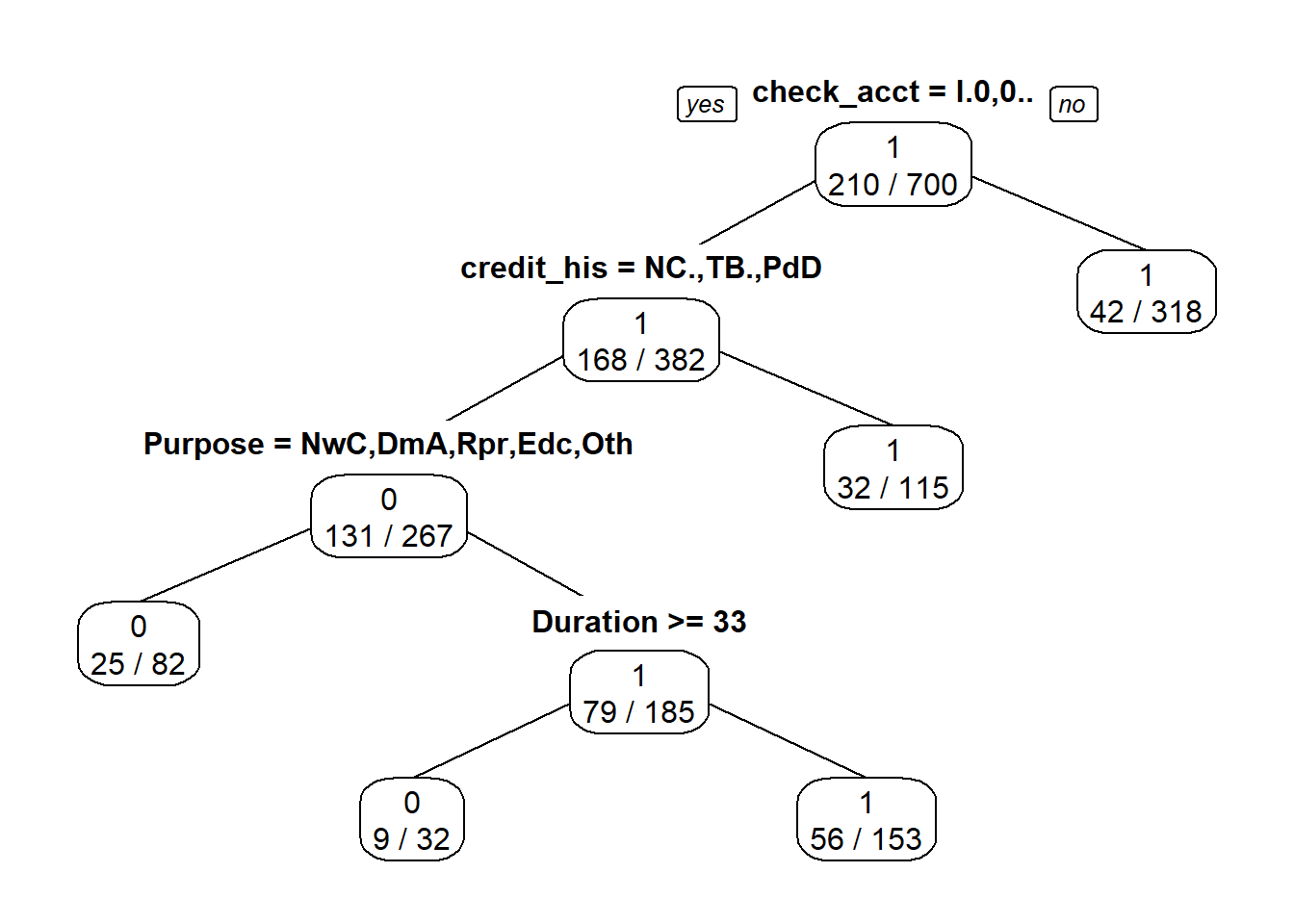

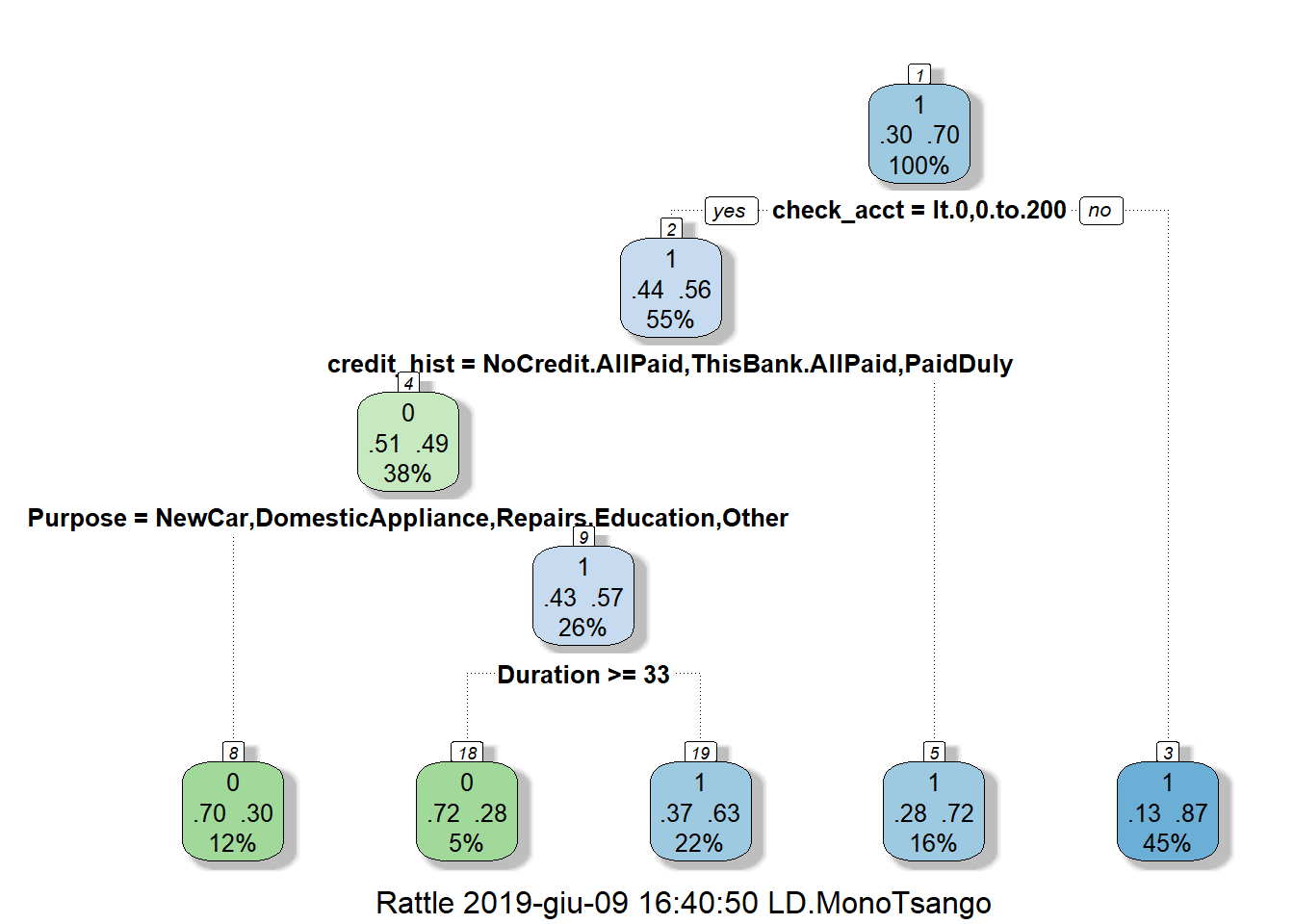

In our study, we built our classification tree model on the training set using the rpart function of the rpart package. By default, rpart() function uses the Gini impurity measure to split the node. The higher the Gini coefficient, the more different instances within the node. We predicted the fitted tree on the test set with the predict() function. Major explanations of Recursive Partitioning Using the rpart Routines are given in Therneau & Atkinson (2018).

4.1.3.

Random forests

Random Forest can be regarded as a variant of Bagging approach. It follows the major steps of Bagging and uses decision tree algorithm to build base classifiers. Besides Bootstrap sampling and majority voting used in Bagging, Random Forest further incorporates random feature space selection into training set construction to promote base classifiers’ diversity.

Random Forest algorithm builds multiple decision trees following the summarized algorithm below and takes majority voting of the prediction results of these trees. To incorporate diversity into the trees, Random Forest approach differs from the traditional decision tree algorithm in the following aspects when building each tree: First, it infers the tree from a Bootstrap sample of the original training set. Second, when selecting the best feature at each node, Random Forest only considers a subset of the feature space. These two modifications of decision tree introduce randomness into the tree learning process, and thus increase the diversity of base classifiers. In practice, the dimensionality of the selected feature subspace \(n'\) controls the randomness. If we set \(n' = n\) where \(n\) is the original dimensionality, then the constructed decision tree is the same as the traditional deterministic one. If we set \(n' = 1\), at each node, only one feature will be randomly selected to split the data, which leads to a completely random decision tree.

Specifically, the following describes the general procedure of Random Forest algorithm:

random forest algorithm

In our study, we used the randomForest() function of the randomForest package which implements Breiman’s random forest algorithm (based on Breiman and Cutler’s original Fortran code), to build our random forest models on training set . Then , we predicted the latter model on test set with the predict() function.

4.1.4.

Support Vector Machines

SVM methods use linear conditions in order to separate out the classes from one another. The idea is to use a linear condition that separates the two classes from each other as well as possible. SVMs were developed by Cortes & Vapnik (1995) for binary classification. Their approach may be roughly sketched with the following tasks : Class separation, Overlapping classes, Nonlinearity and Problem solution which is a quadratic optimization problem.

The principal task, Class separation, lies in looking for the optimal separating hyperplane between the two classes by maximizing the margin between the classes’ closest points (see Figure below)—the points lying on the boundaries are called support vectors, and the middle of the margin is our optimal separating hyperplane. For further details, follow Meyer and Wien(2015) or Karatzoglou et al (2005).

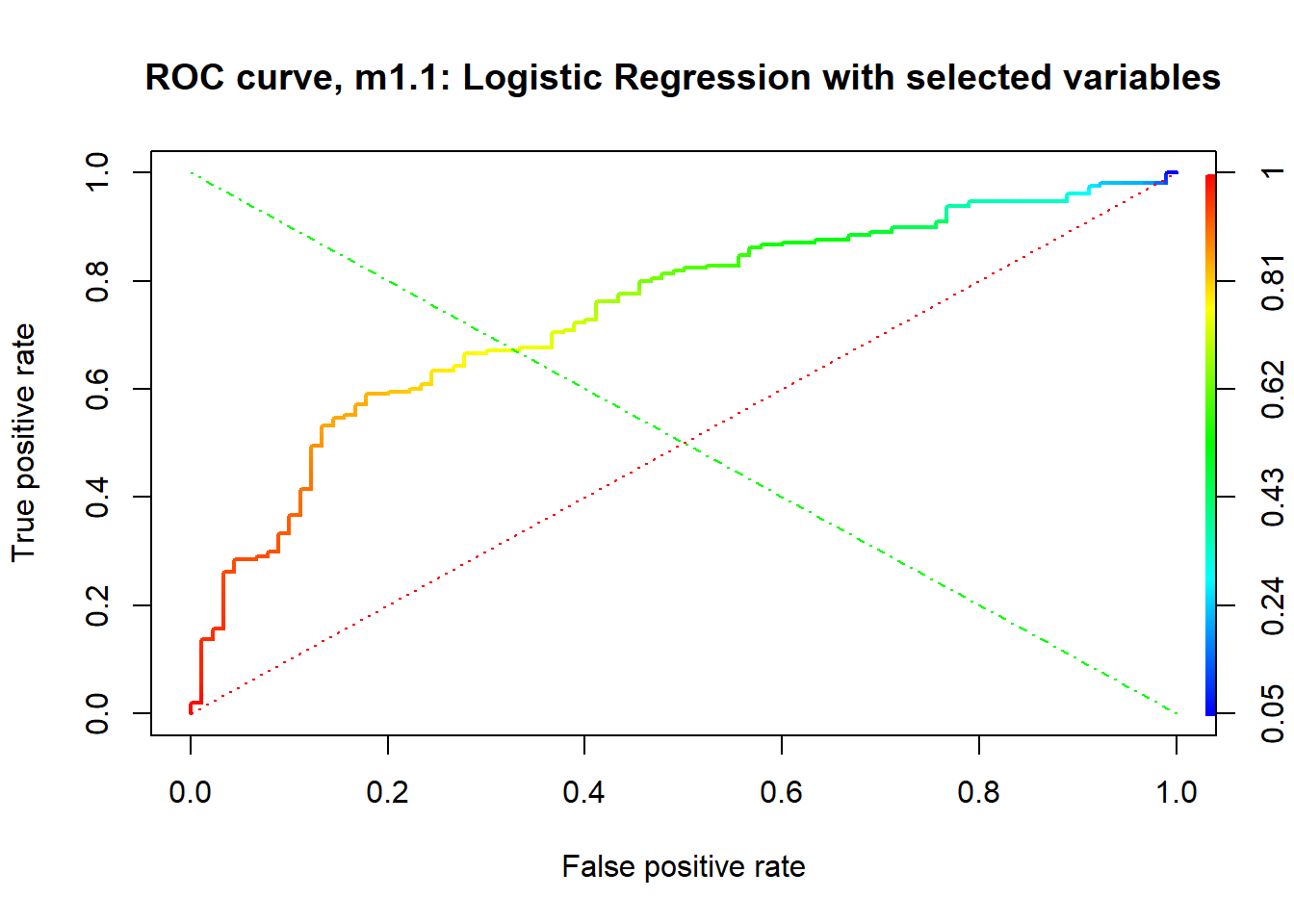

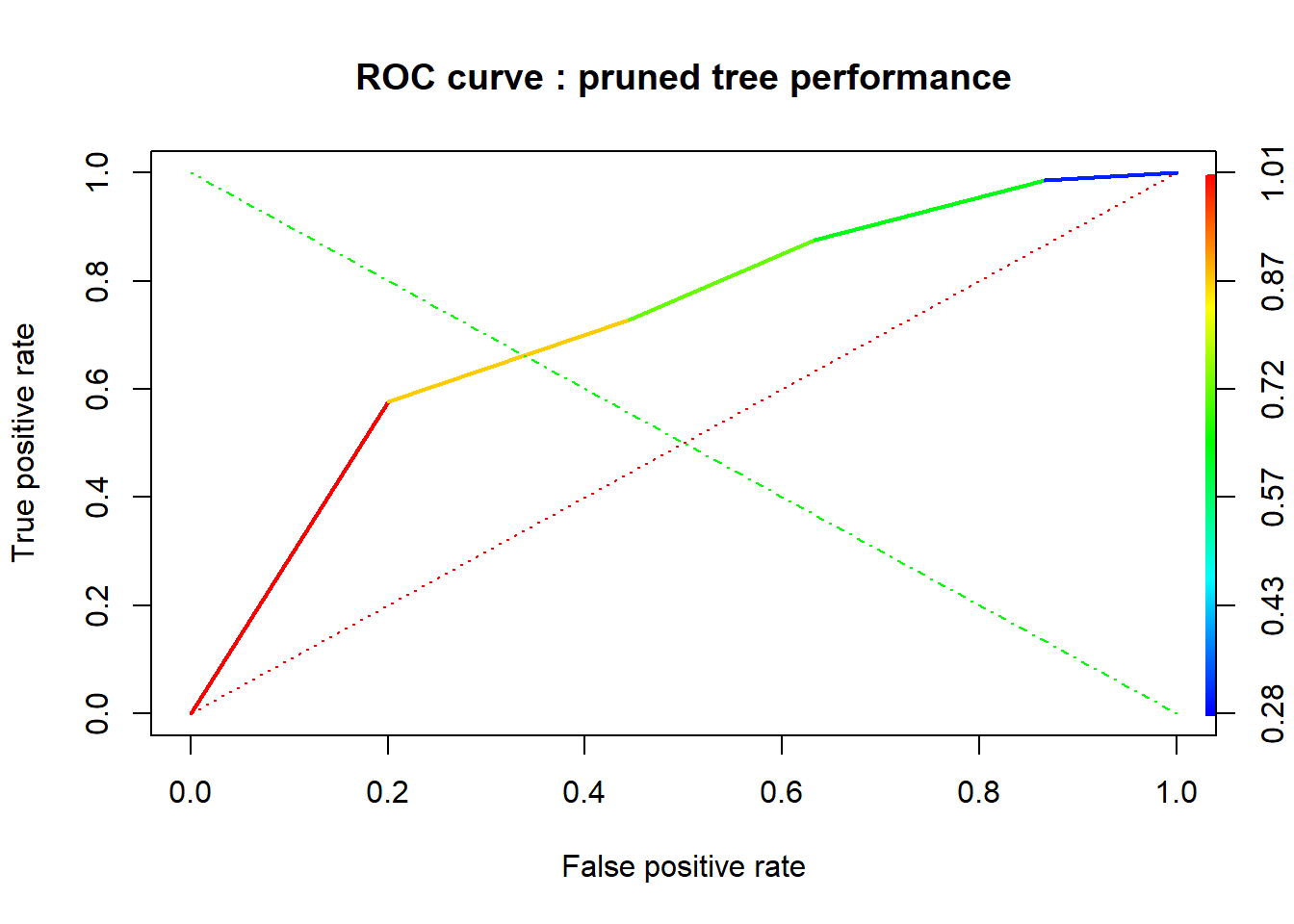

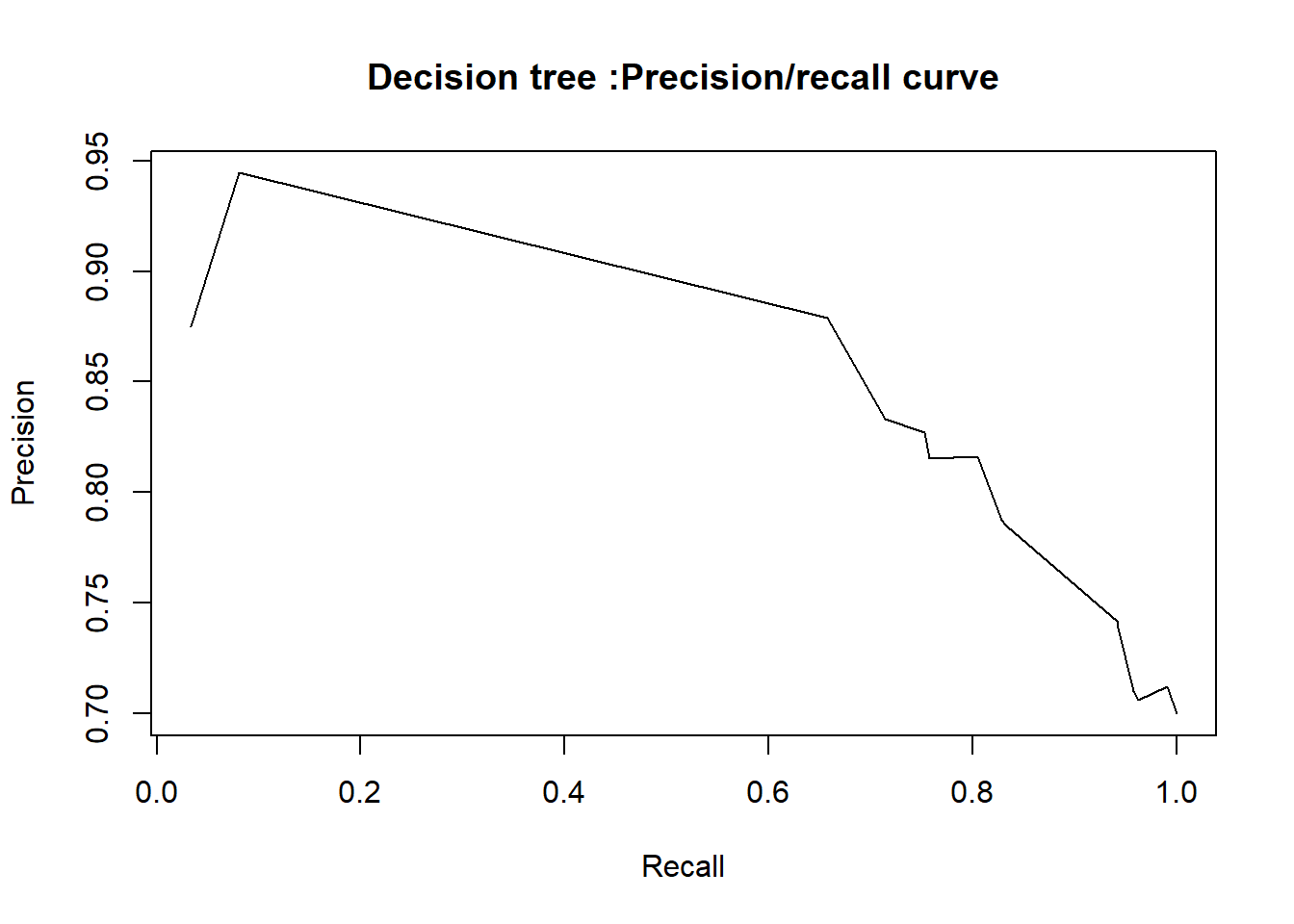

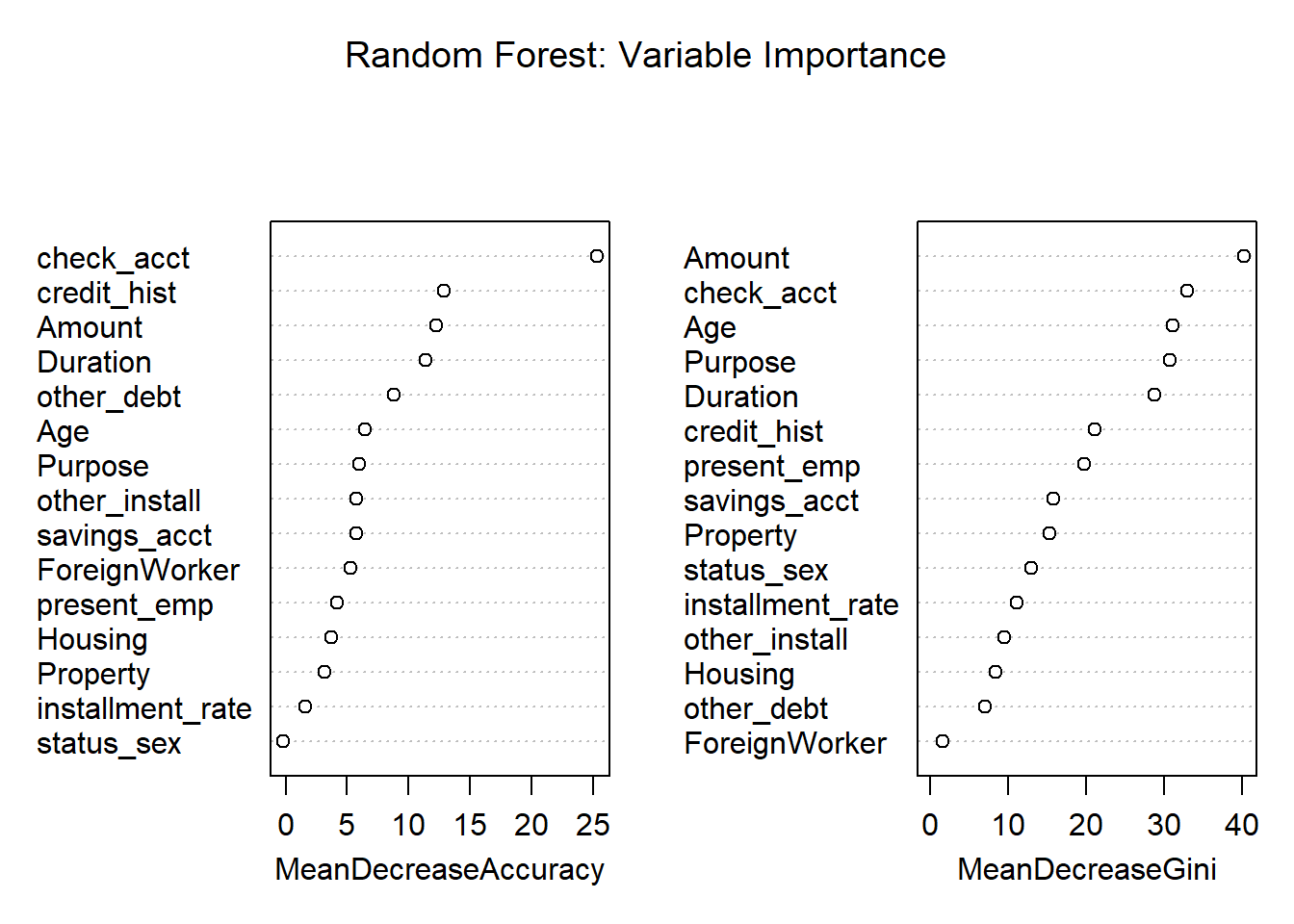

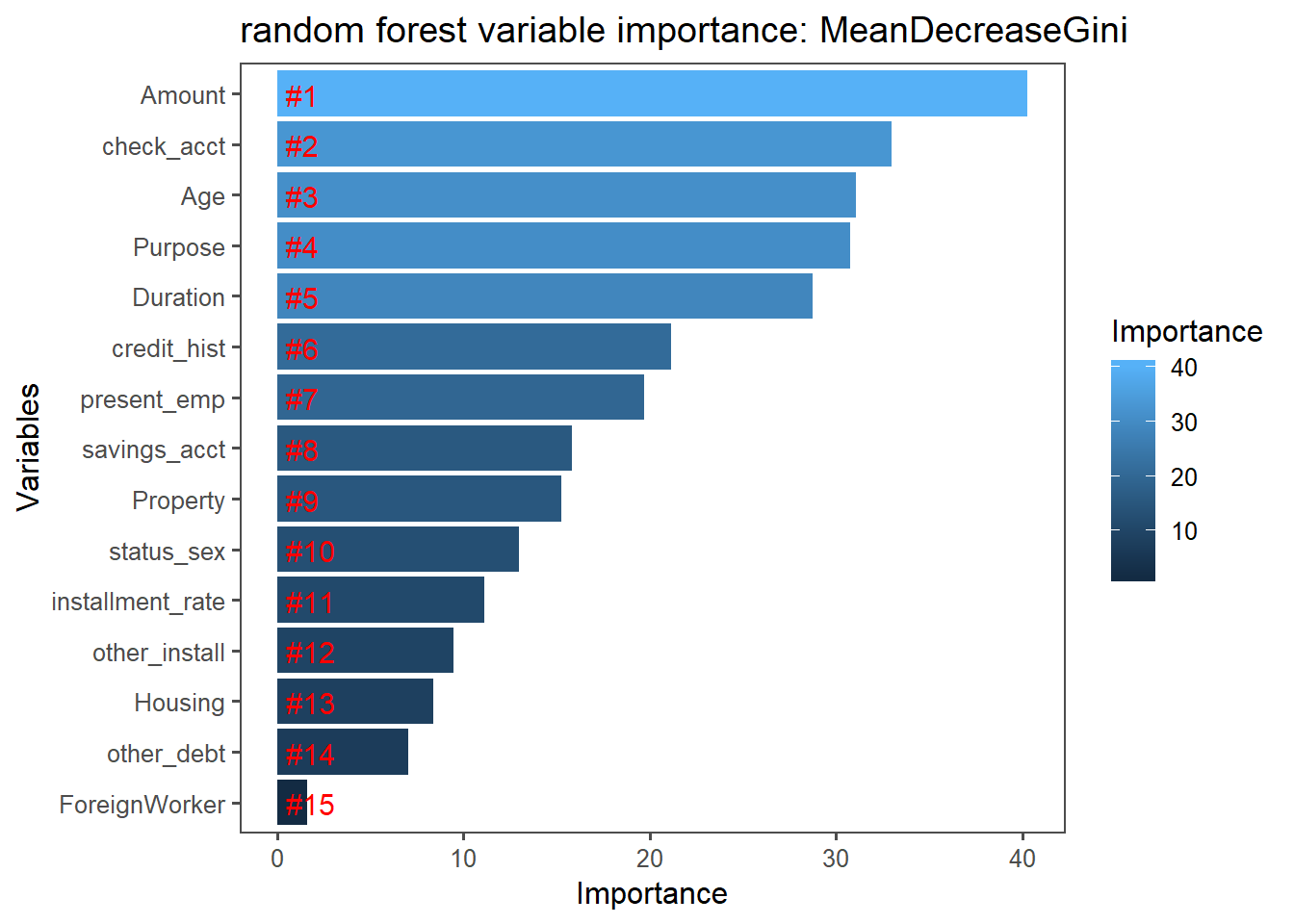

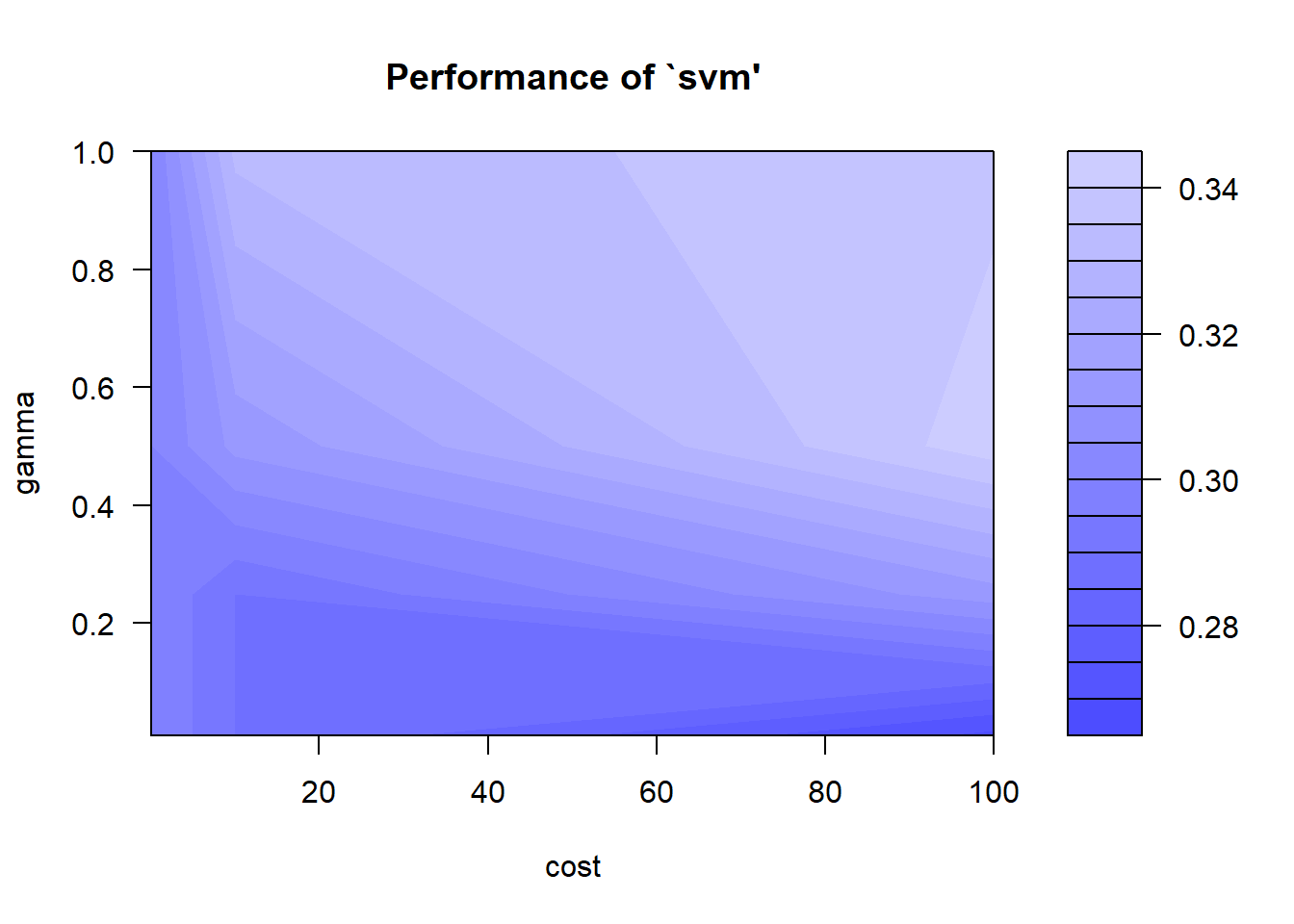

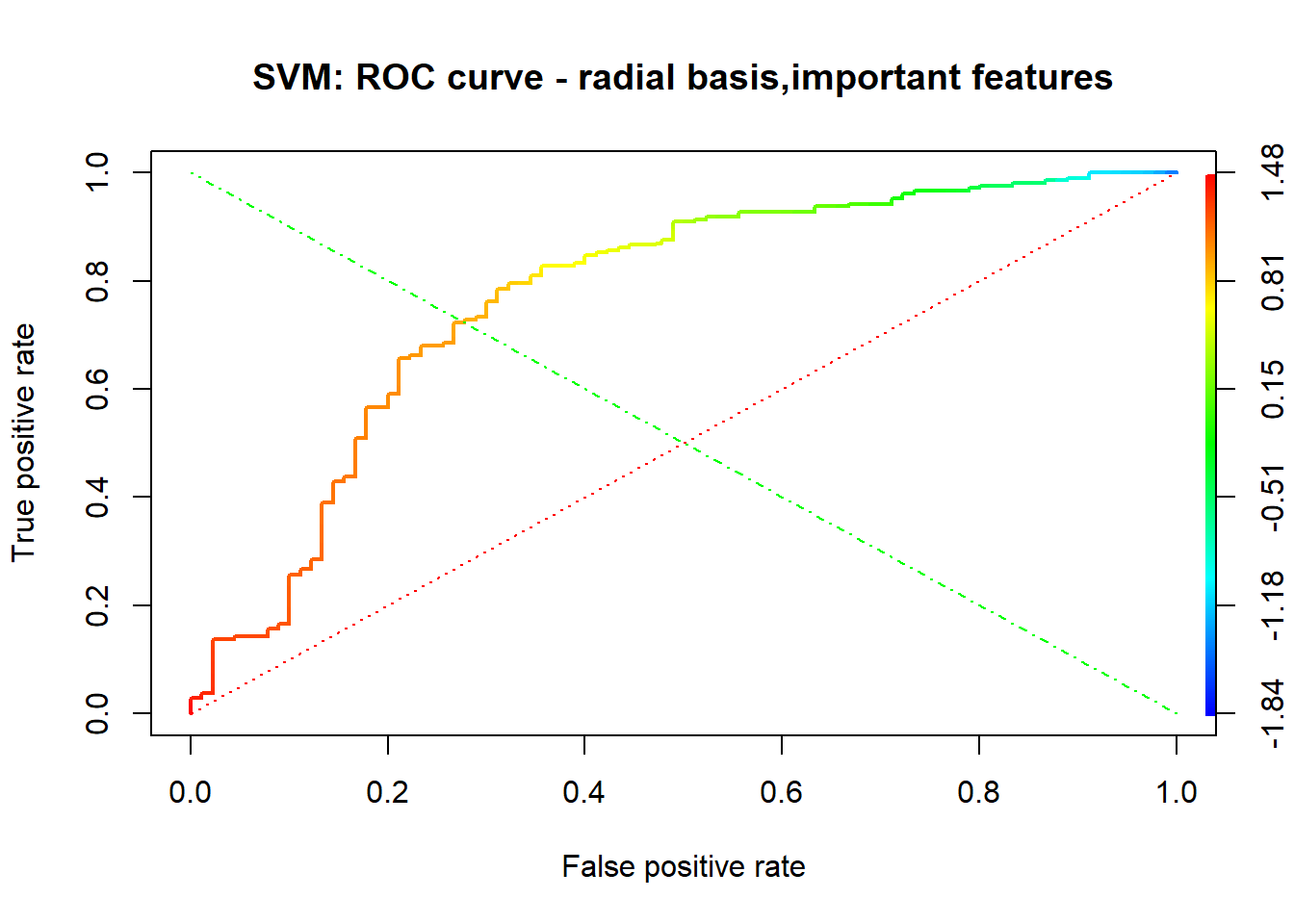

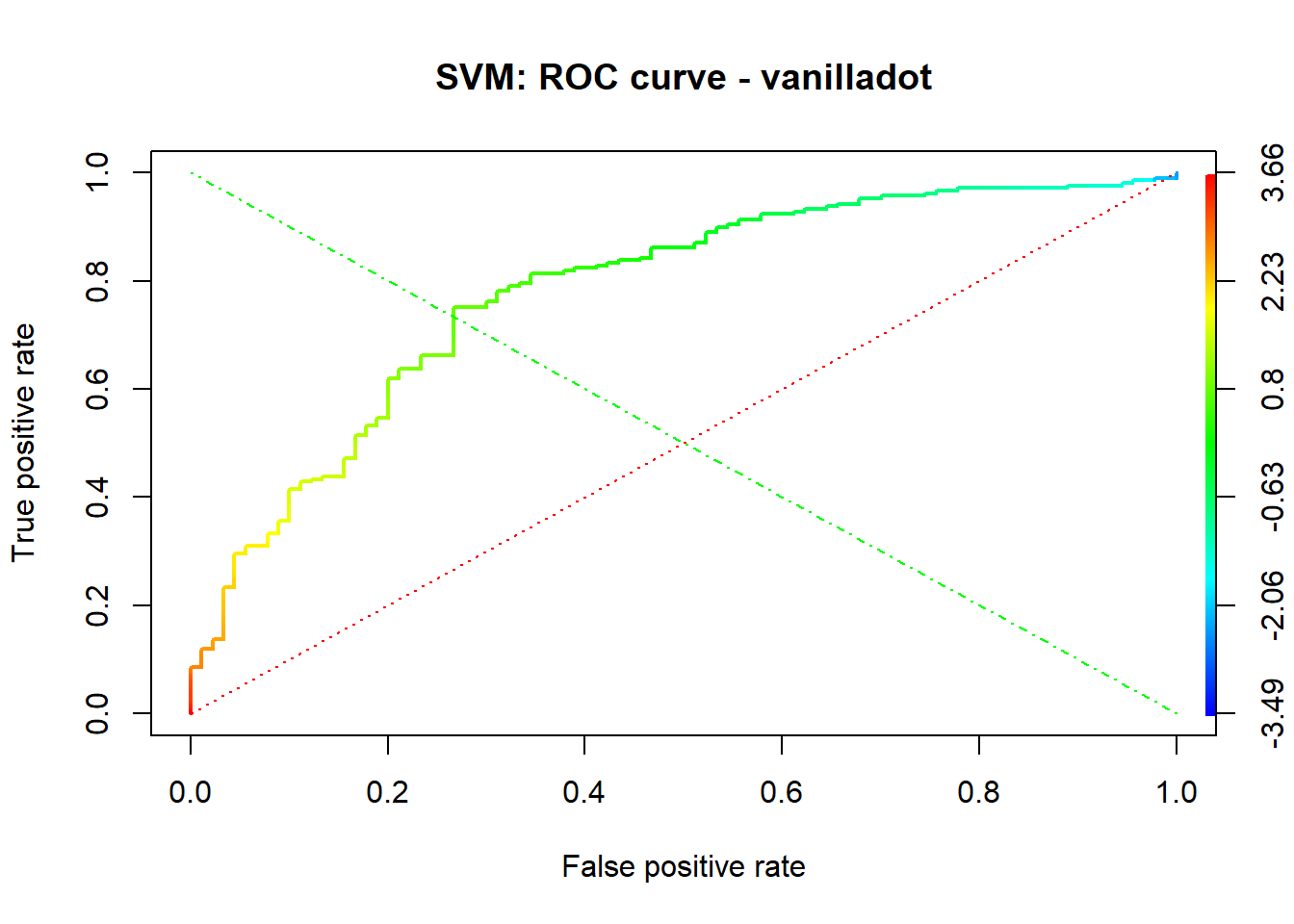

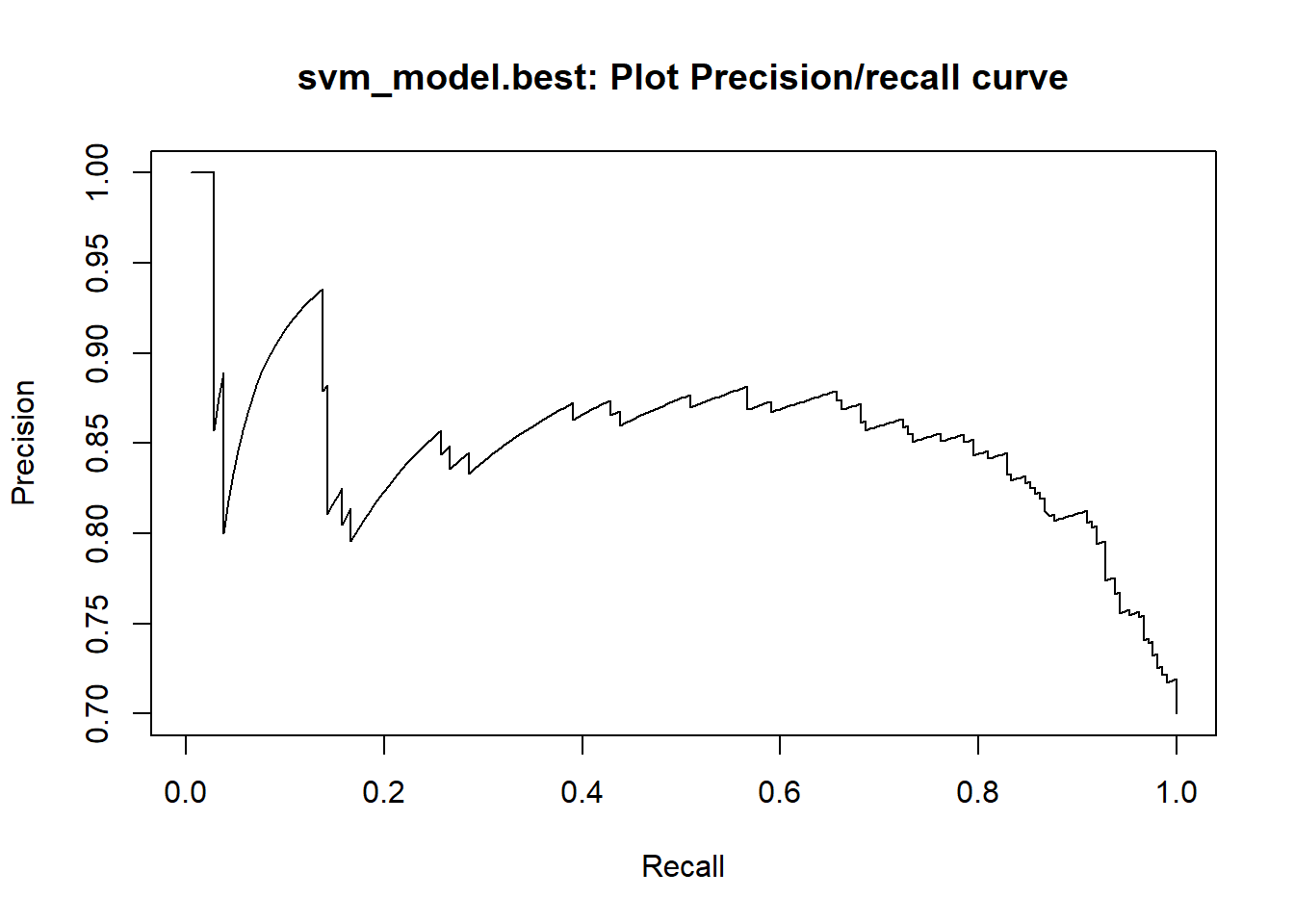

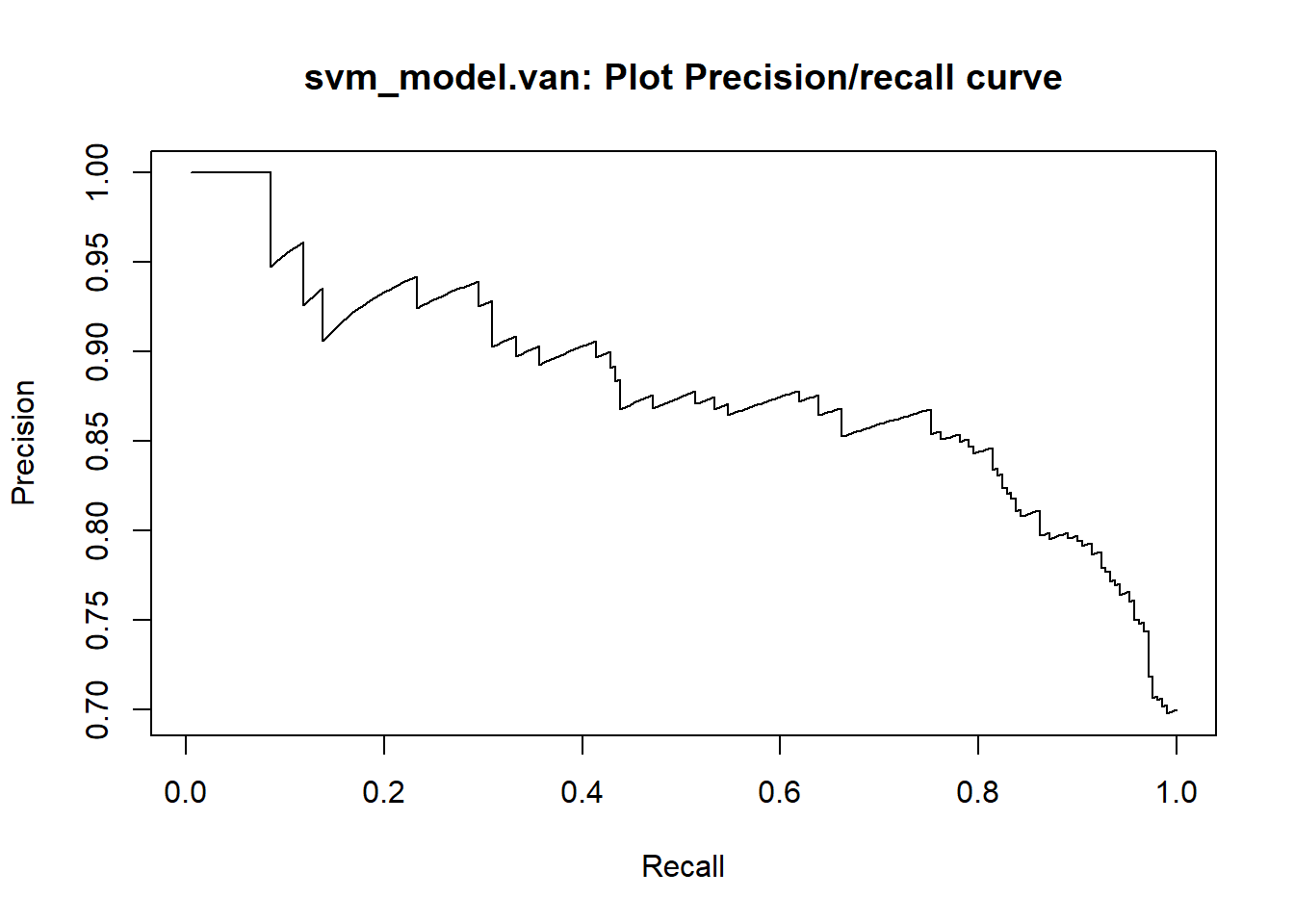

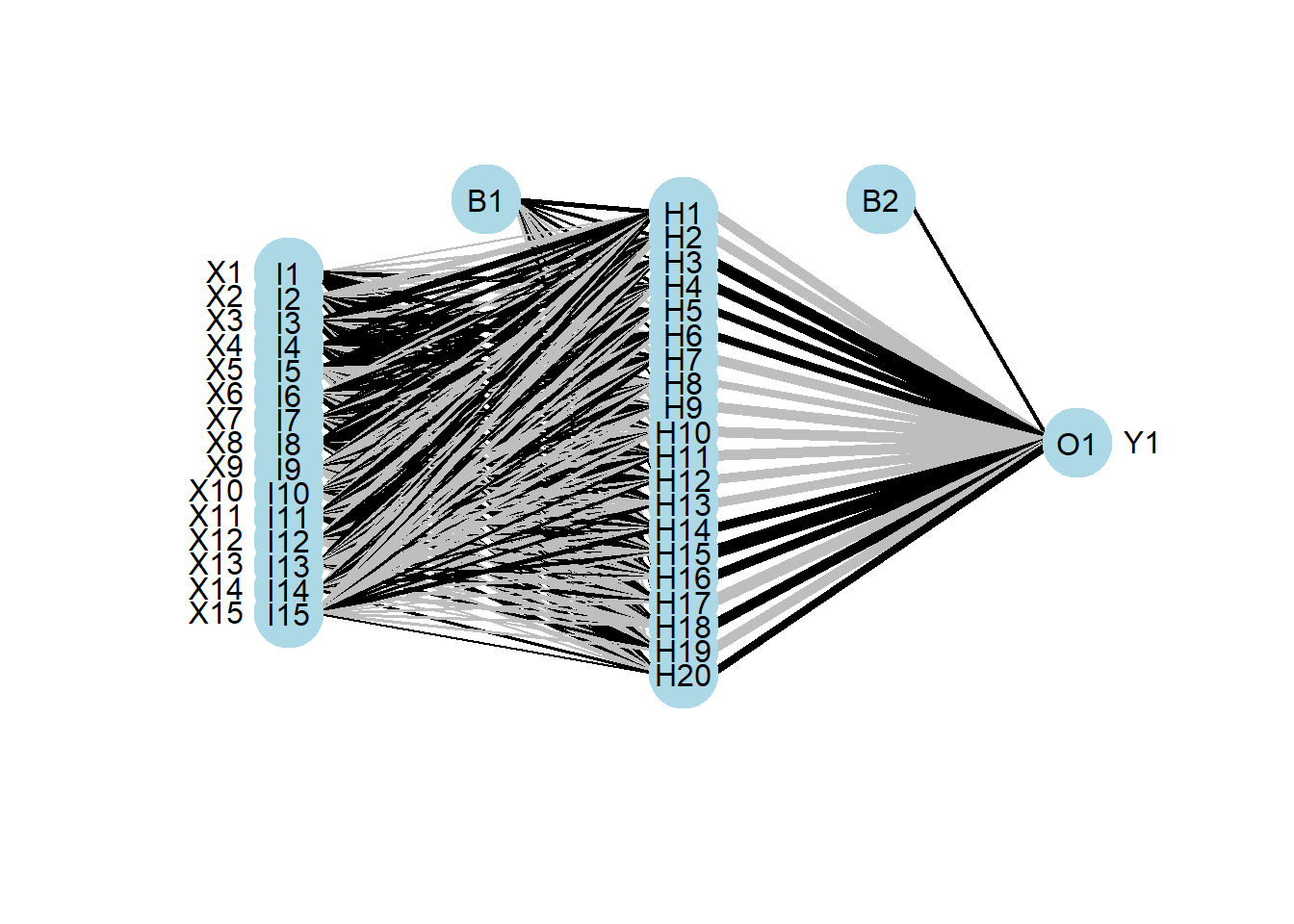

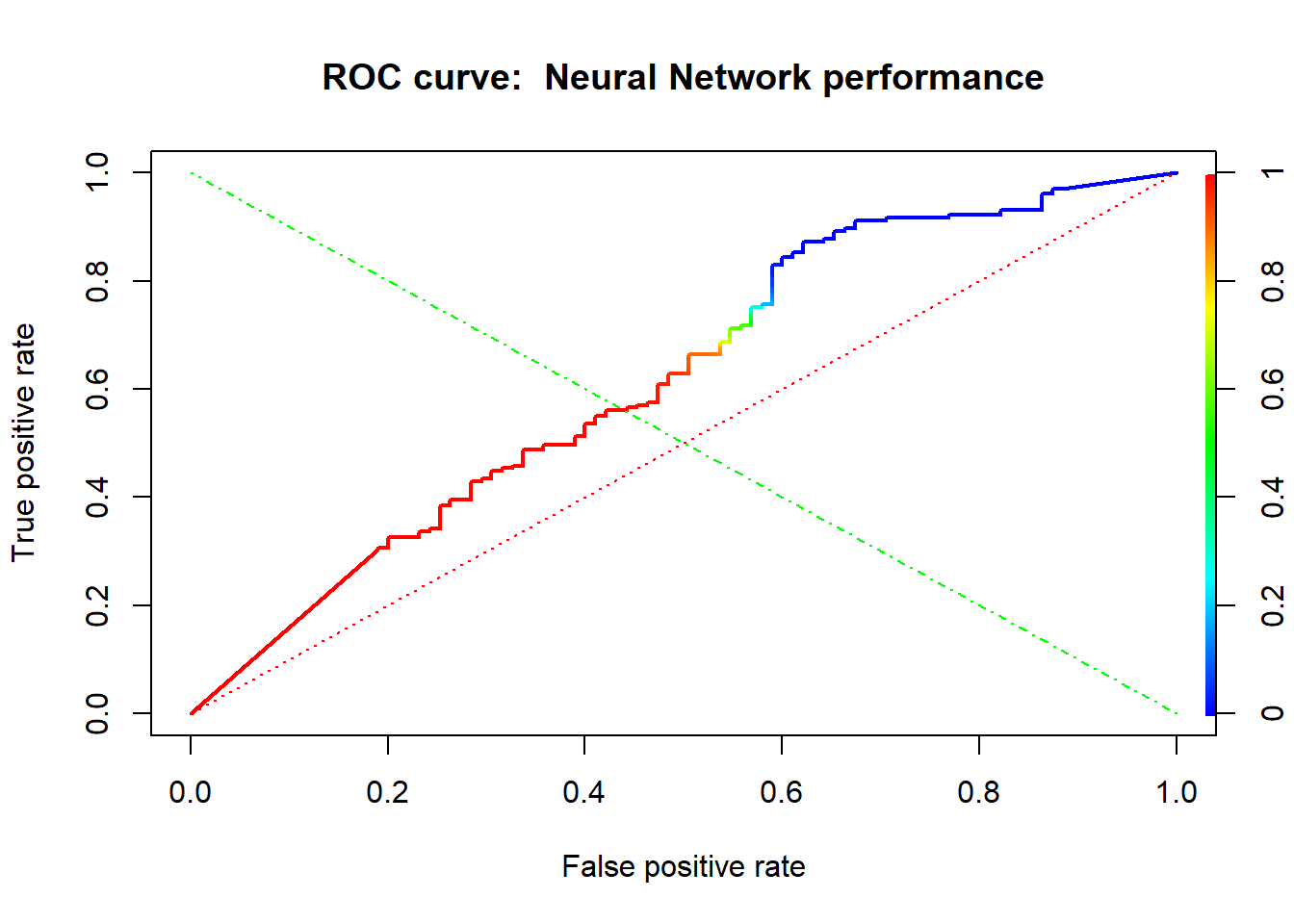

The package e1071 offers an interface to the award-winning C++- implementation by Chang & Lin(2001), libsvm